Abstract

A growing amount of digital objects is designated for

long term preservation - a time scale during which technologies, formats and

communities are very likely to change. Specialized approaches, models and technologies are

needed to guarantee the long-term understandability of the preserved data.

Maintaining the authenticity (trustworthiness) and provenance (history of creation, ownership,

accesses and changes) of the preserved objects for the long term is of great

importance, since users must be confident that the objects in the changed

environment are authentic. We

present a novel model for managing authenticity in long term digital

preservation systems and a supporting archival storage component. The model and

archival storage build on OAIS, the leading standard in the area of long-term

digital preservation. The

preservation aware storage

layer handles provenance data, and documents the relevant events. It collocates

provenance data (and other metadata) together with the preserved data in a

secure environment, thus enhancing the chances of their co-survival. Handling authenticity and

provenance at the storage

layer reduces both threats to authenticity and computation times. This work

addresses core issues in long-term digital preservation in a novel and

practical manner. We present an example of managing authenticity of data

objects during data transformation at the storage component.[1]

1. Introduction

Long Term Digital

Preservation (LTDP) is the set of processes, strategies and tools used to store

and access digital data for long periods of time during which technologies,

formats, hardware, software and technical communities are very

likely to change. The LTDP problem includes aspects of bit preservation and

logical preservation. Bit preservation is the ability to restore the bits of a

data object in the presence of storage media degradation, hardware obsolescence

and/or catastrophes. Logical preservation entails preserving the intellectual

content of the data in the face of future technological and knowledge changes.

Logical preservation is still an open research area that presents a great challenge as it needs to enable future interpretation of the preserved data by consumers that may use technologies unknown today and hold a

different knowledge base from that of the data

producers.

Our work leverages

the Open Archival Information System (OAIS), an ISO standard for LTDP that

provides a general framework for the preservation of digital assets [1]. OAIS

specifies concepts, strategies and functions, and provides a high-level,

flexible reference model (information and functional) for LTDP.

According to the

OAIS information model, each data object requires Representation Information

(RepInfo). The RepInfo is a set of objects used to interpret the data object.

Each RepInfo may have RepInfo of its own, creating a RepInfo network. It

ends at the knowledge base of the designated community that uses the data.

The structure

preserved in the archival storage is called the Archival Information Package

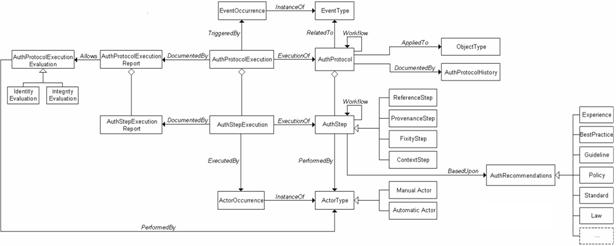

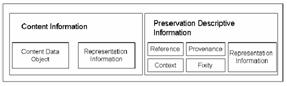

(AIP), depicted in figure 1.

Figure 1 - AIP structure in OAIS [1]

Its content

information section includes the data object and its RepInfo. In addition, the

AIP contains the Preservation Description Information (PDI) section,

which includes several types of metadata: provenance guarantees the

documentation of the life cycle of the AIP, fixity guarantees its integrity,

context documents its relation to its environment and

reference keeps a

set of identifiers for the AIP.

An important part

of an LTDP system is the ability to manage the authenticity of a data object.

Authenticity refers to the reliability of the data in a broad sense, tracking

the control over the preserved information custody. To validate authenticity of

a preserved data object provenance is needed, i.e., the documented history of

creation, ownership, accesses, and changes that have occurred over time for a

given data object. Also a means is needed to guarantee that data is whole and

uncorrupted (integrity). The maintenance of the original bit stream is not

always necessary or possible; the goal is completeness of the intellectual form

(meaning). For example, two Word documents may be considered to deliver the

same meaning if they are composed of the same character sequence yet use different

fonts (hence different bit streams).

Defining and

assessing authenticity is a complex task, which includes defining of roles,

policies, components, and protocols for the custodial function. To enable

future assessment, a pre-requirement is the preservation of the authenticity

documentation that includes the provenance data.

To address

authenticity and provenance in the context of LTDP we have developed a novel

model that aims to ensure the identity and integrity of a digital object. Since authenticity is not a binary attribute,

our model enables evaluating the degree of authenticity. A key aspect of our approach is identifying

the set of attributes that are relevant to evaluating authenticity. Another key

aspect is a conceptual model to describe the dynamic profile of

authenticity. This process of protecting

and assessing the authenticity of a digital object is driven by an authenticity

protocol applied to a set of digital objects. The authenticity protocol is composed of authenticity

steps, each of which is independently executable. The authenticity steps

are organized in a workflow, which defines the order of their execution. Different types of authenticity steps

reference the different elements of the PDI in the AIP as defined by OAIS. A

report on the evaluation results can be used (by a human or other actor) to

evaluate the data authenticity. The overall model ties together these aspects

along with additional components to provide an approach to managing and

assessing authenticity for data subject to long term digital preservation.

Architecting and

implementing a sound, consistent, and efficient LTDP system that supports

authenticity and provenance is a challenge.

Preservation DataStores (PDS) is our OAIS-based preservation aware

storage [3,4] designed to serve as the storage component of a digital

preservation system. It has built-in support for bit and logical preservation.

PDS is aware of the OAIS-based preservation objects that it stores and can

offload functions traditionally performed by applications. These functions

include handling metadata, calculating and validating fixity, documenting

provenance events, managing the RepInfo of the PDI, and validating referential

integrity. PDS supports loading and execution of storlets, which are

execution modules for performing data intensive functions such as data

transformations and fixity calculations close to the data. Another important

feature is the physical co-location of data and metadata, which ensures that

metadata is not lost if raw data survives. Related AIPs are also co-located on

the same media. These features allow PDS to support both bit and logical

preservation, including authenticity management.

Our Preservation DataStores realizes the authenticity model by moving

knowledge of provenance and other related metadata to the storage system. In particular, PDS tracks events related to

ensuring the identity and integrity of the data through direct implementation of

the OAIS concepts of fixity (integrity) and provenance. Further, PDS can trigger the automatic

execution of the authenticity protocol (events the storage is aware of), and it

supports the manual execution for external events (e.g., the change of ownership

of an object). Finally, PDS ties internal

changes that impact authenticity, e.g., format transformations executed via

storlets, to the authenticity model, automatically making the relevant updates

to the OAIS PDI. Supporting the authenticity model at the storage layer results

in an optimized, robust and secure preservation environment, which can provide

a stronger ability to assess the authenticity of a data object.

To summarize, our

work has two main contributions:

- A novel model for managing

authenticity in long term digital preservation systems

- An implementation of a

preservation-aware storage system that integrates the concept of long term

provenance.

The model is being implemented as part of the

CASPAR OAIS framework. CASPAR [2] (Cultural Artistic and Scientific knowledge

for Preservation, Access and Retrieval) is an FP7 EU project that aims to

demonstrate the validity of the OAIS model with different data sets.

The rest of this

paper is structured as follows. Section

2 presents related work. Section 3

presents our model and section 4 our implementation. We conclude in section 5.

2.

Related Work

Among the papers most relevant to

our focus on authenticity and provenance we mention the provenance-aware

storage system (PASS) [5] and a later work by the same group on data modeling

for provenance [6]. The former [5] describes a technology that tracks the

provenance of data at the file system level, and does not employ an auxiliary

database for provenance management. The idea of offloading storage related

activities to the storage level is similar to our PDS work. However, PDS supports also documenting provenance events external to

the storage and also provenance events that are logical in nature.

In the

later work [6], the authors argue that due to the common ancestry relations of

provenance data, these data naturally form a directed graph. Hence, provenance

data and query models should address this structure in a natural manner. A

semi-structured data model with a special query language (PQL, which extends

Lorel) was used, taken from the object oriented database community. Currently, PDS does not support querying the provenance contents.

Provenance was addressed in

the context of scientific workflows [7]. The authors suggest that a workflow

should systematically and automatically record provenance information for later

use. The proposed solution provides a data capturing mechanism, a data model,

and an infrastructure for ingest, access and query. The authors distinguish

between prospective and retrospective provenance: the former captures the computational

task, the steps (algorithm) needed to (re)create the data, whereas the latter

captures the actual steps executed. According to [7], provenance is not limited

to people who handle the data, but may also be attributed to processes as well

as recipes for data regeneration. In PDS we implement this view.

With regard to the more general storage aspects of digital preservation,

previous works [8,9,10] address authentication as well as security issues. Some works [8] explore the needs

of long-term storage and present a reliability model and associated strategies

and architectures. Most of the previous works focus on bit preservation

(maintaining bit integrity) and less on logical preservation (preserving the

meaning or understandability of the data). The focus of PDS is on logical

preservation.

The e-depot digital archiving

system of the National Library of the Netherlands (KB) [11] is composed of the

Digital Information Archiving System (DIAS). Similar to our PDS work, the

e-depot library conforms to the OAIS standard, and addresses both bit and

logical preservation. Unlike DIAS, which stores some

provenance-related metadata separately from the data, PDS, co-locates the data

and metadata. This improves the chances of data/meta-data co-survival – assuming

that the survival of one without the other is useless.

3. Managing Authenticity

3.1 Key Concept

Authenticity is a fundamental

issue for the long-term preservation of digital objects: the relevance of

authenticity as a preliminary and central requirement has been thoroughly

investigated by many international projects, some focused on long-term

preservation of authentic digital records

in the e-government environment, and in scientific and cultural domains[2]

[12]; some devoted to the identification of criteria and responsibilities for

the development of trusted digital repositories [13].

Defining and assessing

authenticity are complex tasks and imply a number of theoretical and

operational/technical activities. These include a clear definition of roles

involved, coherent development of recommendations and policies for building

trusted repositories, and precise identification of each component of the

custodial function. Thus it is crucial to define the key conceptual elements

that provide the foundation for such a complex framework: we need to define

how, and on what basis authenticity has to be managed in the digital

preservation processes in order to ensure the trustworthiness of digital objects.

One of the founding concepts

for the development of a theory on authenticity is that in most cases digital

objects cannot be preserved without any change in the bit stream, and we have

to modify the original object to have the ability to reproduce it in the future.

Unfortunately, this runs counter to the assumption that preserving authenticity

implies retaining the identity and integrity of a digital object, i.e., free

from tampering or corruption. It is a sort of paradox, where preservation

entails change, while authenticity needs fixity.

Authenticity cannot be

recognised as given – once and forever – within a digital environment. This

point implies that a clear distinction should be made between the authenticity

of a preserved resource – not necessarily the original one ingested in the

repository – and the procedure of validating

that resource; the latter is a part of a more general process aimed at assuring

that an information object will be kept as

an original one i.e., reliable, trustworthy, and sound.

The authenticity

of digital resources is threatened whenever they are exchanged between users,

systems or applications, or any time technological obsolescence requires for an

updating or replacing of the hardware or software used to store, process, or

communicate them. Therefore, the preserver’s inference of the authenticity of digital resources must be

supported by evidence provided in association with the resources through its documentation,

by tracing the history of its various migration and treatments,

which have occurred over time. Evidence is also needed to prove that the

digital resources have been maintained using technologies and administrative

procedures that either guarantee their continuing identity and integrity

or at least minimize risks of change from the time the resources were first

set aside to the point at which they are subsequently accessed.

In conclusion, authenticity is never limited

to the resource itself, but is rather extended

to the information/document/record system, and thus to the concept of reliability: authenticity is concerned

with ongoing control over information/document/record creation process and

custody. The verification of the authenticity of a resource is related to the

reliability of the system/resource, and this reliability is crucially based

upon complete documentation of both the creation process and the chain of

preservation.

3.2 Integrity and Identity

Authenticity is established by assessing the integrity and identity of the resource.

Integrity

The integrity of a resource refers to its wholeness. A resource has integrity when it is complete and

uncorrupted in all its essential respects. The verification process should

analyse and ascertain that the essential characteristics of an object are

consistent with the inevitable changes brought about by technological obsolescence. The maintenance of

the bit flow is not always necessary or possible, but the original ability to

convey meaning (e.g., maintenance of colours in a map, columns in a spreadsheet

etc.) must be preserved. In other words, the physical integrity of a resource

(i.e., the original bit stream) can be compromised, but the content structure

and the essential components must remain the same. So, the critical issue with

reference to integrity is to identify the relevant characteristics of a

resource. This means understanding the nature of the resource, analysing its

features, and evaluating their role so to establish what kind of changes are

allowed without loss of integrity.

Identity

Identity of a resource is

intended with a very wide meaning, not only its unique designation and/or

identification. Identity refers to the whole of the characteristics of a resource that uniquely

identify it and distinguish it from any other resource. In addition to its internal conceptual structure, it

refers to its general context (e.g., legal, technological). From this point of view, identity is strongly

related to PDI: Context, Provenance, Fixity, and Reference Information as

defined in OAIS help to understand the environment of a resource. This

information has to be gathered, maintained, and interpreted altogether – as

much as possible – as a set of relationships defining the resource itself: a

resource is not an isolated entity with defined borders and autonomous life, it

is not just a single object; a resource is an object in the context, it is both the object itself and the relationships

that provide complete meaning to it. As a matter of fact, these relationships

change over time, so we need not only to understand them and make them explicit

but also to document them to have a complete history of the resource: we cannot

miss it without losing a bit of the identity of the resource, with consequences

on its authenticity.

3.3 Authenticity Management Tools

Authenticity management tools

have to monitor and manage protocols

and procedures across the custody chain to deliver the benefits of authenticity

into information system, from creation to preservation.

In general, authenticity cannot be evaluated by means

of a boolean flag telling us whether a resource is authentic or not. The

evaluation should lead to assess the degree

of authenticity: the certainty about authenticity is a goal and sure cases are

edge cases. So mechanisms and tools for

managing authenticity have to be designed keeping in mind possible alterations,

corruption, lack of significant data and so on, and we need tools, mechanisms

and weights to understand their

relevance and their impact on authenticity. The consequence is that ensuring

authenticity means providing a proper set of attributes related to content and

context, and verifying/checking (possibly against metrics) the completeness or

the alteration of this set.

Authenticity management tools

have to identify mechanisms for ensuring the maintenance and verification of

the authenticity in terms of identity

and integrity of the digital objects

by providing content and contextual information during the whole preservation

process. The most critical issues are the right attribution of authorship, the identification of provenance in the life cycle of digital

resources, the insurance of content

integrity of the digital components and their relevant contextual relationships, and the

provision of mechanisms to allow future users to verify the authenticity of the

preserved objects or at least to provide the capability of evaluating their

reliability in term of authenticity presumption. Any event producing a change of the object has to be described

and documented at every stage in the life cycle to have, at any time, a sort of

authenticity card for any object in

the repository: the crucial point is to clearly state that the identity of an

object resides not only in its internal structure and content but also – and

maybe mostly – in its complex system of relationships, so that a change of the

object refers not only to a change of the bits of the object but also to

something around it and that anyway contributes to its identity i.e., to its

authenticity.

According to the above

requirements, defining a strategy for managing authenticity means:

§

Identifying a set of attributes to catch

relevant information for the authenticity as it can be collected along the life

cycle of objects belonging to different domains

§

Developing a

conceptual model to describe the dynamic profile of authenticity i.e., to

describe it as a process aimed at gathering, protecting, and/or evaluating

information mainly about identity and integrity.

3.4 Elements of the Conceptual

Model

Authenticity Protocol (AP)

The protection and assessment

of the authenticity of digital objects is a process. To manage this process, we

need to define the procedures to follow.

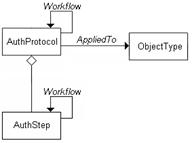

We call one of these

procedures an Authenticity Protocol (AP). An AP is a set of interrelated

steps; each called Authenticity Step (AS). An AP is applied to an Object Type, i.e., to a class of objects

with uniform features for the application of an AP. Any AP may be recursively

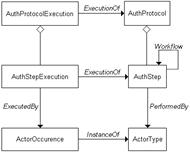

used to design other APs, as expressed by the general Workflow relation. See figure 2.

Figure 2 - Elements of the model

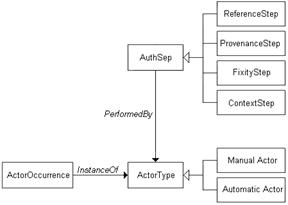

Authenticity Step (AS)

Every AS models a part of an

AP that can be executed independently as a whole, and constitutes a significant

phase of the AP from the authenticity assessment point of view. The

relationships amongst the steps of an AP establish the order in which the steps

must be executed in the context of an execution of the protocol. To model these

relationships, we can use any workflow model, denoted as Workflow. An AS

is performed by an Actor Type, a class of either human or non-human

agents instantiated through the Actor

Occurrence class. The Actor Type

is a generalization of Automatic Actor and Manual Actor (hardware/software and human).

There can be

several types of ASs. Following OAIS, we distinguish Steps based on the kind of PDI involved in the AS. Consequently, we

have four types of steps: Reference Step,

Provenance Step, Fixity Step, and Context Step.

Any kind of analysis performed on the object can be traced to either one of

these steps or a proper combination .See figure 3.

Figure 3 - Authenticity Step

Since an AS involves an

analysis and evaluation, we need at least information about:

§

Good practices, methodologies and any kind of

regulations that must be followed or can help in the analysis and evaluation

§

Possibly the criteria that must be satisfied in

the evaluation.

Authenticity Protocol

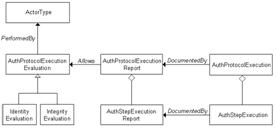

Execution (APE)

APs are executed by an actor

on objects that belong to a specific typology. The execution of an AP is

modelled as an Authenticity Protocol Execution (APE). See figure 4.

Figure 4 - Authenticity Protocol Execution

An APE is related to an AP via

the ExecutionOf association and

consists of a number of Authenticity Step

Executions (ASEs). Every ASE, in turn, is related to the AS via an

association analogous to the ExecutionOf

association, and contains the information about the execution, including:

§

the actor who did the execution

§

the information which was used

§

the time, place, and context of execution.

Every ASE is executed by an Actor Occurrence, i.e., an instantiation

of the Actor Type.

Authenticity Report

Different types of ASEs have

different structures and the outcomes of the executions must be documented to

gather information related to specific aspects of the object, e.g., title,

extent, dates, and transformations. An Authenticity

Step Execution Report simply documents that the step has been done – via

the Documented By relation – and

collects all the values associated with the data elements analysed in a

specific ASE. The report provides a complete set of information upon which an

entitled actor (human or application) can build a judgment, an Authenticity Protocol Execution Evaluation,

which states an evaluation regarding the authenticity of the resource,

referring to both its identity and integrity profile. See figure 5.

Figure 5 - Authenticity Protocol Execution

Evaluation

Event

Authenticity should be

monitored continuously so that any time a resource is somehow changed or a

relationship is modified, an Authenticity

Protocol can be activated and executed in order to verify the permanence of

the resource’s relevant features that guarantee its authenticity.

Any event impacting on a

resource – and specifically on a certain type of a resource – should trigger

the execution of an adequate protocol: the Authenticity

Protocol Execution is triggered by

an Event Occurrence, i.e., the

instantiation of an Event Type that

identifies any act and/or fact related to a specific Authenticity Protocol.

Authenticity

Protocol History

The authenticity of a resource

is strongly related to the criteria and procedures adopted to analyse and

evaluate it: the evolution of the Authenticity

Protocols over time should be documented – via the Documented By relation – in an Authenticity

Protocol History. The evolution of an AP may concern the addition, removal

or modification of any step making up the AP, and the change of the sequence

defining the Workflow. In any case

both the old and the new step and/or sequence must be retained for

documentation purposes. When an AS of an AP is changed, all the executions of

the AP that include an ASE related to the changed step, must be revised, and

possibly a new execution is required for the new (modified) step.

See figure 6 for the overall

authenticity model.

4. Authenticity and Provenance

in PDS

As a preservation aware

storage, PDS has built-in support for handling metadata, including provenance

data. Provenance data receives special attention, as they are crucial for the

future usability of the content data.