Figure

Figure

Figure Figure |

Figure Figure |

|

The second, object-oriented, version of the example illustrates LINQ's use of C#'s lambda expressions. The Join method, for example, takes as arguments a dataset to perform the Join against (in this case staticRank) and three functions. The first two functions describe how to determine the keys that should be used in the Join. The third function describes the Join function itself. Note that the compiler performs static type inference to determine the concrete types of var objects and anonymous lambda expressions so the programmer need not remember (or even know) the type signatures of many subexpressions or helper functions.

Figure Figure |

var input = GetTable<LineRecord>("file://in.tbl");

var result = MainProgram(input, ...);

var output = ToDryadTable(result, "file://out.tbl");

Tables are referenced by URI strings that indicate the storage system

to use as well as the name of the partitioned dataset.

Variants of ToDryadTable can simultaneously invoke multiple

expressions and generate multiple output DryadTables in a single

distributed Dryad job. This feature (also encountered in parallel

databases such as Teradata)

can be used to

avoid recomputing or materializing common subexpressions.

DryadLINQoffers two data re-partitioning operators:

HashPartition<T,K> and RangePartition<T,K>.

These

operators are needed to enforce a partitioning on an output

dataset and they may also be used to override the optimizer's choice of

execution plan.

From a LINQ perspective, however, they are no-ops since they just

reorganize a collection without changing its contents.

The system allows the implementation of additional re-partitioning

operators, but we have found these two to be sufficient for a

wide class of applications.

The remaining new operators are Apply and Fork, which

can be thought of as an "escape-hatch" that a programmer can use

when a computation is needed that cannot be

expressed using any of LINQ's built-in operators.

Apply takes a function f and passes to it

an iterator over the entire input collection, allowing arbitrary

streaming computations.

As a simple example, Apply can be used to perform

"windowed" computations on a sequence, where the ith

entry of the output sequence is a function on the range of input

values [i,i+d] for a fixed window of length d. The

applications of Apply are much more general than this and we

discuss them further in Section 7.

The Fork operator is very similar to

Apply except that it takes a single input and

generates multiple output datasets. This is useful as a performance

optimization to eliminate common subcomputations, e.g. to

implement a document parser that

outputs both plain text and a bibliographic entry to separate tables.

If the DryadLINQsystem has no further

information about f, Apply (or Fork) will cause all of the

computation to be serialized onto a single computer. More

often, however, the user supplies annotations on f that indicate

conditions under which Apply can be parallelized. The details

are too complex to be described in the space available, but

quite general "conditional homomorphism" is supported-this means

that the application can specify conditions on the partitioning of a

dataset under which Apply can be run independently on each

partition. DryadLINQwill automatically

re-partition the data to match the conditions if necessary.

DryadLINQallows programmers to specify annotations of various kinds.

These provide manual hints to guide optimizations that the system is unable to

perform automatically, while preserving the semantics of the program. As mentioned above, the Apply operator

makes use of annotations, supplied as simple C# attributes, to

indicate opportunities for parallelization. There are also Resource annotations to discriminate functions that require constant

storage from those whose storage grows along with

the input collection size-these are used by the

optimizer to determine buffering strategies and decide when to pipeline operators in the same

process. The programmer may also declare that a dataset has a

particular partitioning scheme if the

file system does not store sufficient metadata to determine this

automatically.

The DryadLINQoptimizer produces good automatic execution plans for

most programs composed of standard LINQ operators, and annotations are

seldom needed unless an application uses complex Apply statements.

Figure Figure |

Figure Figure |

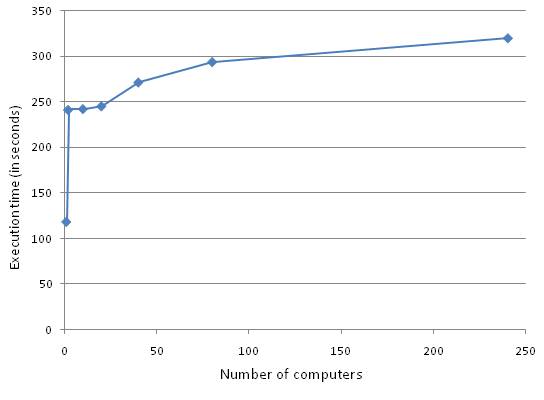

| Computers | 1 | 2 | 10 | 20 | 40 | 80 | 240 |

| Time | 119 | 241 | 242 | 245 | 271 | 294 | 319 |

Figure Figure |

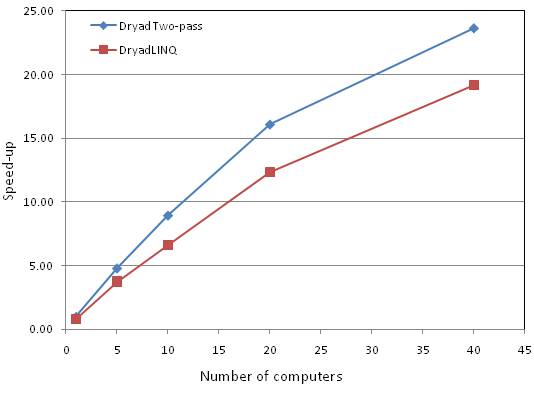

| Computers | 1 | 5 | 10 | 20 | 40 |

| Dryad | 2167 | 451 | 242 | 135 | 92 |

| DryadLINQ | 2666 | 580 | 328 | 176 | 113 |

Figure Figure |