![\includegraphics[width=1.7in,totalheight=1.6in]{Figures/final-naive.2.eps}](img3.png)

![\includegraphics[width=1.7in,totalheight=1.6in]{Figures/final-vert.2.eps}](img4.png)

![\includegraphics[width=1.7in,totalheight=1.6in]{Figures/final-full.2.eps}](img5.png)

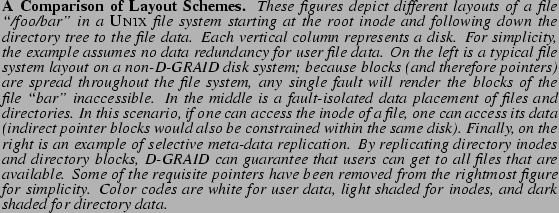

To ensure partial availability of data under multiple failures in a RAID array, D-GRAID employs two main techniques. The first is a fault-isolated data placement strategy, in which D-GRAID places each ``semantically-related set of blocks'' within a ``unit of fault containment'' found within the storage array. For simplicity of discussion, we assume that a file is a semantically-related set of blocks, and that a single disk is the unit of fault containment. We will generalize the former below, and the latter is easily generalized if there are other failure boundaries that should be observed (e.g., SCSI chains). We refer to the physical disk to which a file belongs as the home site for the file. When a particular disk fails, fault-isolated data placement ensures that only files that have that disk as their home site become unavailable, while other files remain accessible as whole files.

The second technique is selective meta-data replication, in which D-GRAID replicates naming and system meta-data structures of the file system to a high degree, e.g., directory inodes and directory data in a UNIX file system. D-GRAID thus ensures that all live data is reachable and not orphaned due to failure. The entire directory hierarchy remains traversable, and the fraction of missing user data is proportional to the number of failed disks.

Thus, D-GRAID lays out logical file system blocks in such a way that the availability of a single file depends on as few disks as possible. In a traditional RAID array, this dependence set is normally the entire set of disks in the group, thereby leading to entire file system unavailability under an unexpected failure. A UNIX-centric example of typical layout, fault-isolated data placement, and selective meta-data replication is depicted in Figure 1. Note that for the techniques in D-GRAID to work, a meaningful subset of the file system must be laid out within a single D-GRAID array. For example, if the file system is striped across multiple D-GRAID arrays, no single array will have a meaningful view of the file system. In such a scenario, D-GRAID can be run at the logical volume manager level, viewing each of the arrays as a single disk; the same techniques remain relevant.

Because D-GRAID treats each file system block type differently, the traditional RAID taxonomy is no longer adequate in describing how D-GRAID behaves. Instead, a finer-grained notion of a RAID level is required, as D-GRAID may employ different redundancy techniques for different types of data. For example, D-GRAID commonly employs n-way mirroring for naming and system meta-data, whereas it uses standard redundancy techniques, such as mirroring or parity encoding (e.g., RAID-5), for user data. Note that n, a value under administrative control, determines the number of failures under which D-GRAID will degrade gracefully. In Section 4, we will explore how data availability degrades under varying levels of namespace replication.