|

||||||||||||||

Chunk naming - If chunks are named using the original URL, all of a file's chunks will share the same name, and will be routed similarly since CDNs hash URLs for routing (31,16). Since we want to spread chunks across the CDN, we must use a different chunk naming scheme. Range caching - We know of no HTTP proxies that cache arbitrary ranges of Web objects, though some can serve ranges from cached objects, and even recreate a full object from all of its chunks. Since browsers are not likely to ask for arbitrary and disjoint pieces of an object, no proxies have developed the necessary support. Since we want to cache at the chunk level instead of the file level, we must address this limitation. Congestion - During periods of bursty demand and heavy synchrony, consistent hashing may produce roving instantaneous congestion. If many clients at different locations suddenly ask for the same file, a lightly-loaded CDN node may see a burst of request. If the clients all ask for another file as soon as the first download completes, another CDN node may become instantly congested. This bursty congestion prevents using the aggregate CDN bandwidth effectively over short time scales.

We address these problems as a whole, to avoid new problems from

piecemeal fixes. For example, adding range caching to the Squid proxy

has been discussed since 1998 (24), but would expand the

in-memory metadata structures, increasing memory pressure, and would

require changing the internet cache protocol (ICP) used by caches to

query each other. Even if we added this support to CoDeeN's proxies,

it would still require extra support in the CDN, since the range

information would have to be hashed along with the URL.

3.2 Chunk Handling Mechanics

We modify intra-CDN chunk handling and request redirection by treating each chunk as a real file with its own name, so the bulk of the CDN does not need to be modified. This name contains the start and end ranges of the file, so different chunks will have different hash values. Only the CDN ingress/egress points are affected, at the boundaries with the client and the origin server.

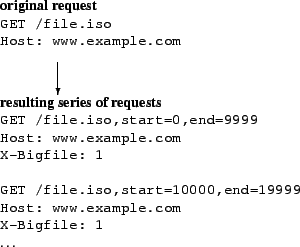

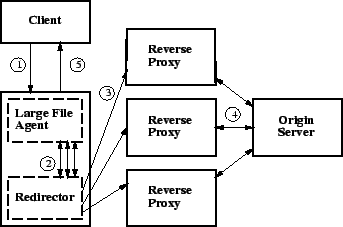

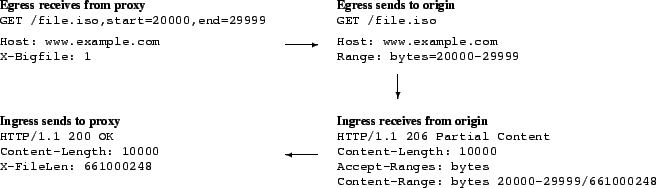

The agent takes the client's request, converts it into a series of requests for chunks, reassembles the responses, and sends it to the client. The client is not aware that the request is handled in pieces, and no browser modifications are needed. This process is implemented in a small program on each CDN node, so communication between it and the CDN infrastructure is cheap. The requests sent into the CDN, shown in Figure 2, contain extended filenames that specify the actual file and the desired byte range, as well as a special header so that the CDN modifies these requests on egress. Otherwise, these requests look like ordinary requests with slightly longer filenames. The full set of steps are shown in Figure 3, where each solid rectangle is a separate machine connected via the Internet. All byte-range interactions take place between the proxy and the origin server - on egress, the request's name is reverted, and range headers are added. The server's response is changed from a HTTP 206 code (partial content received) to 200 (full file received). The underlying proxy never sees the byte-range transformations, so no range-caching support is required. Figure 4 shows this process with additional temporary headers. These headers contain the file length, allowing the agent to provide the content length for the complete download. Having the agent use the local proxy avoids having to reimplement CDN code (such as node liveness, or connection management) in the agent, but can cause cache pollution if the proxy caches all of the agent's requests. The ingress add a cache-control header that disallows local caching, which is removed on egress when the proxy routes the request to the next CDN node. As a result, chunks are cached at the next-hop CDN nodes instead of the local node.

Since the CDN sees a large number of small file requests, it can use

its normal routing, replication, and caching policies. These cached

pieces can then be used to serve future requests. If a node

experiences cache pressure, it can evict as many pieces as needed,

instead of evicting one large file. Similarly, the addition/departure

of nodes will only cause missing pieces to be re-fetched, instead of

the whole file. The only external difference is that the server sees

byte-range requests from many proxies instead of one large file

request from one proxy.

3.3 Agent DesignThe agent is the most complicated part of CoBlitz, since it must operate smoothly, even in the face of unpredictable CDN nodes and origin servers outside our control. The agent monitors the chunk downloads for correctness checking and for performance. The correctness checking consists of issues such as ensuring that the server is capable of serving HTTP byte-range requests, verifying that the response is cacheable, and comparing modification headers (file length, last-modified time, etc) to detect if a file has changed at the origin during its download. In the event of problems, the agent can abort the download and return an error message to the client. The agent is the largest part of CoBlitz - it consists of 770 semicolon-lines of code (1975 lines total), versus 60-70 lines of changes for ingress/egress modifications. To determine when to re-issue chunk fetches, the agent maintains overall and per-chunk statistics during the download. Several factors may slow chunk fetching, including congestion between the proxy and its peers, operational problems at the peers, and congestion between the peers and the origin. After downloading the first chunk, the agent has the header containing the overall file size, and knows the total number of chunks to download. It issues parallel requests up to its limit, and uses non-blocking operations to read data from the sockets as it becomes available. Using an approach inspired by LoCI (3), slow transfers are addressed by issuing multiple requests - whenever a chunk exceeds its download deadline, the agent opens a new connection and re-issues the chunk request. The most recent request for the same chunk is allowed to continue downloading, and any earlier requests for the chunk are terminated. In this way, each chunk can have at most two requests for it in flight from the agent, a departure from LoCI where even more connections are made as the deadline approaches. The agent modifies a non-critical field of the URL in retry requests beyond the first retried request for each chunk. This field is stripped from the URL on egress, and exists solely to allow the agent to randomize the peer serving the chunk. In this way, the agent can exert some control over which peer serves the request, to reduce the chance of multiple failures within the CDN. Keeping the same URL on the first retry attempts to reduce cache pollution - in a load-balanced, replicated CDN, the retry is unlikely to be assigned to the same peer that is handling the original request. The first retry timeout for each chunk is set using a combination of the standard deviation and exponentially-weighted moving average for recent chunks. Subsequent retries use exponential backoff to adjust the deadline, up to a limit of 10 backoffs per chunk. To bound the backoff time, we also have a hard limit of 10 seconds for the chunk timeout. The initial timeout is set to 3 seconds for the first chunk - while most nodes finish faster, using a generous starting point avoids overloading slow origin servers. In practice, 10-20% of chunks are retried, but the original fetch usually completes before the retry. We could reduce retry aggressiveness, but this approach is unlikely to cause much extra traffic to the origin since the first retry uses a different replica with the same URL. By default, the agent sends completed chunks to the client as soon as they finish downloading, as long as all preceding chunks have also been sent. If the chunk at the head of the line has not completed downloading, no new data is sent to the client until the chunk completes. By using enough parallel chunk fetches, delays in downloading chunks can generally be overlapped with others in the pipeline. If clients that can use chunked transfer encoding provide a header in the request indicating they are capable of handling chunks in any order, the agent sends chunks as they complete, with no head-of-line blocking. Chunk position information is returned in a trailer following each chunk, which the client software can use to assemble the file in the correct order.

The choice of chunk size is a trade-off between efficiency and latency

- small chunks will result in faster chunk downloads, so slower

clients will have less impact. However, the small chunks require more

processing at all stages - the agent, the CDN infrastructure, and

possibly the origin server. Larger chunks, while more efficient, can

also cause more delay if head-of-line blocking arises. After some

testing, we chose a chunk size of 60KB, which is large enough to be

efficient, but small enough to be manageable. In particular, this

chunk size can easily fit into Linux's default outbound kernel socket

buffers, allowing the entire chunk to be written to the socket with a

single system call that returns without blocking.

3.4 Design BenefitsWe believe that this design has several important features that not only make it practical for deployment now, but will continue to make it useful in the future:

4 Coping With ScaleOne first challenge for CoBlitz was handling scale - at the time of CoBlitz's original deployment, CoDeeN was running on all 100 academic PlanetLab node in North America. The first major scale issue was roughly quadrupling the node count, to include every PlanetLab node. In the process, we adopted three design decisions that have served us well: (a) make peering a unilateral, asynchronous decision, (b) use minimum application-level ping times when determining suitable peers, and (c) apply hysteresis to the peer set. These are described in the remainder of this section. 4.1 Unilateral, Asynchronous Peering

In CoDeeN, we have intentionally avoided any synchronized communication for group maintenance, which results in avoiding any quorum protocols, 2-phase behavior, or any group membership protocols. The motivations behind this decision were simplicity and robustness - by making every decision unilaterally and independently at each node, we avoid any situation where forward progress fails because some handshaking protocol fails. As a result, CoDeeN has been operational even in some very extreme circumstances, such as in February 2005, when a kernel bug caused the sudden, near-simultaneous failures of nodes, with more than half of all PlanetLab nodes freezing. One side-effect of asynchronous communication is that all peering is unilateral - nodes independently pick their peers, using periodic heartbeats and acknowledgments to judge peer health. Pairwise heartbeats are simple, robust, and particularly useful for testing reachability. More sophisticated techniques, such as aggregating node health information using trees, can reduce the number of heartbeats, but can lead to worse information, since the tree may miss or use different links than those used for pairwise communication.

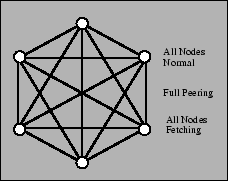

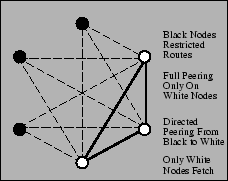

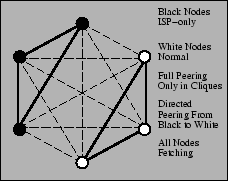

Unilateral and unidirectional peering improves CoBlitz's scalability,

since it allows nodes with heterogeneous connectivity or policy issues

to participate to the extent possible. These scenarios are shown in

Figures 5, 6,

and 7. For example, research networks like

Internet2 or CANARIE (the Canadian high-speed network) do not peer

with the commercial Internet, but are reachable from a number of

research sites including universities and corporate labs. These nodes

advertise that they do not want any nodes (including each other) using

them as peers, since they cannot fetch content from the commercial

Internet. These sites can unidirectionally peer with any CoDeeN nodes

they can reach - regular CoDeeN nodes do not reciprocate, since the

restricted nodes cannot fetch arbitrary Web content. Also, in certain

PlanetLab locations, both corporate and regional, political/policy

considerations make the transit of arbitrary content an unwise idea,

but the area may have a sizable number of nodes. These nodes

advertise that only other nodes from the same organization can use

them as peers. These nodes will peer both with each other and with

unrestricted nodes, giving them more peers for CoBlitz transfers than

they would have available otherwise. Policy restrictions are not

PlanetLab-specific - ISPs host commercial CDN nodes in their network

with the restriction that the CDN nodes only serve their own

customers.

4.2 Peer Set SelectionWith the worldwide deployment of CoDeeN, some step had to be taken to restrict the set of CoDeeN nodes that each node would use as peers. Each CoDeeN node sends one heartbeat per second to another node, so at 600 To get some indication of application health, CoDeeN uses application-level pings, rather than network pings, to determine round trip times (RTTs). Originally, CoDeeN kept the average of the four most recent RTT values, and selected the 60 closest peers within a 100ms RTT cutoff. The 100ms cutoff was to reduce noticeable lag in interactive settings, such as Web browsing. In parts of the world where nodes could not find 20 peers within 100ms, this cutoff is raised to 200ms and the 20 best peers are selected. This approach exhibited two problems - a high rate of change in the peer sets, and low overlap among peer sets for nearby peers. The high change rate potentially impacts chunk caching in CoBlitz - if the peer that previously fetched a chunk is no longer in the peer set, the new peer that replaces it may not yet have fetched the chunk. To address this issue, hysteresis was added to the peer set selection process. Any peer not on the set could only replace a peer on the set if it was closer in two-thirds of the last 32 heartbeats. Even under the worst-case conditions, using the two-thirds threshold would keep a peer on the set for 10 minutes at a time. While hysteresis reduced peer set churn, it also reinforced the low overlap between neighboring peer sets. Further investigation indicated that CoDeeN's application-level heartbeats had more than an order of magnitude variance than network pings. This variance led to instability in the average RTT calculations, so once nodes were added to the peer set, they rarely got displaced. Switching from an average application-level RTT to the minimum observed RTT (an approach also used in other systems (6,13,22)) and increasing the number of samples yielded significant improvement, with application-level RTTs correlating well with ping time on all functioning nodes. Misbehaving nodes still showed large application-level minimum RTTs, despite having low ping times. The overlap of peer lists for nodes at the same site increased from roughly half to almost 90%. At the same time, we discovered that many intra-PlanetLab paths had very low latency, and restricting the peer size to 60 was needlessly constrained. We increased this limit to 120 nodes, and issued 2 heartbeats per second. Of the nodes regularly running CoDeeN, two-thirds tend to now have 100+ peers. More details of the redesign process and its corresponding performance improvement can be found in our previous study (5).

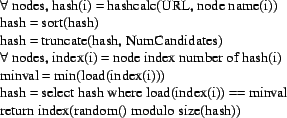

4.3 Scaling LargerIt is interesting to consider whether this approach could scale to a much larger system, such as a commercial CDN like Akamai. By the numbers, Akamai is about 40 times as large as our deployment, at 15,000 servers across 1,100 networks. However, part of what makes scaling to this size simpler is deploying clusters at each network point-of-presence (POP), which number only 2,500. Further, their servers have the ability to issue reverse ARPs and assume the IP addresses of failing nodes in the cluster, something not permitted on PlanetLab. With this ability, the algorithms need only scale to the number of POPs, since the health of a POP can be used instead of querying the status of each server. Finally, by imposing geographic hierarchy and ISP-level restrictions, the problem size is further reduced. With these assumptions, we believe that we can scale to larger sizes without significant problems. 5 Reducing Load & CongestionReducing origin server load and reducing CDN-wide congestion are related, so we present them together in this section. Origin load is an important metric for CoBlitz, because it determines CoBlitz's caching benefit and impacts the system's overall performance. From a content provider's standpoint, CoBlitz would fetch only a single copy of the content, no matter what the demand. However, for reasons described below, this goal may not be practical.5.1 The HRW AlgorithmCoDeeN uses the Highest Random Weight (HRW) (29) algorithm to route requests from clients. This algorithm is functionally similar to Consistent Hashing (17), but has some properties that make it attractive when object replication is desired (31). The algorithm used in CoDeeN, Replicated HRW with Load Balancing, is shown in Figure 8.

For each URL, CoDeeN generates an array of values by hashing the URL

with the name of each node in the peer set. It then prunes this list

based on the replication factor specified, and then prunes it again so

only the nodes with the lowest load values remain. The final candidate

is chosen randomly from this set. Using replication and load balancing

reduces hot spots in the CDN - raising the replication factor reduces

the chance any node gets a large number of requests, but also

increases the node's working set, possibly degrading performance.

5.2 Increasing Peer Set SizeIncreasing the peer set size, as described in Section 4.2 has two effects - each node appears as a peer of many more nodes than before, and the number of nodes chosen to serve a particular URL is reduced. In the extreme, if all CDN nodes were in each others' peer sets, then the total number of nodes handling any URL would equal to NumCandidates. In practice, the peer sets give rise to overlapping regions, so the number of nodes serving a particular URL is tied to the product of the number of regions and NumCandidates. When examining origin server load in CoBlitz, we found that nodes with fewer than five peers generate almost one-third of the traffic. Some poorly-connected sites have such high latency that even with an expanded RTT criterion, they find few peers. At the same time, few sites use them as peers, leading to them being an isolated cluster. For regular Web CDN traffic, these small clusters are not much of an issue, but for large-file traffic, the extra load these clusters cause on the origin server slows the rest of the CDN significantly. Increasing the minimum number of peers per node to 60 reduces traffic to the origin. Because of unilateral peering, this change does not harm nearby nodes - other nodes still avoid these poorly-connected nodes. Reducing the number of replicas per URL reduces origin server load, since fewer nodes fetch copies from the origin, but it also causes more bursty traffic at those replicas if downloading is synchronized. For CoBlitz, synchronized downloads occur when developers push software updates to all nodes, or when cron-initiated tasks simultaneously fetch the same file. In these cases, demand at any node experiences high burstiness over short time scales, which leads to congestion in the CDN. 5.3 Fixing Peer Set DifferencesOnce other problems are addressed, differences in peer sets can also cause a substantial load on the origin server. To understand how this arises, imagine a CDN of 60 nodes, where each node does not see one peer at random. If we ask all nodes for the top candidate in the HRW list for a given URL, at least one node is likely to return the candidate that would have been the second-best choice elsewhere. If we ask for the top k candidates, the set will exceed k candidates with very high probability. If each node is missing two peers at random, the union of the sets is likely to be at least k+2. Making the matter worse is that these ``extra'' nodes fetching from the origin also provide very low utility to the rest of the nodes - since few nodes are using them to fetch the chunk, they do not reduce the traffic at the other replicas.To fix this problem, we observe that when a node receives a forwarded request, it can independently check to see whether it should be the node responsible for serving that request. On every forwarded request that is not satisfied from the cache, the receiving node performs its own HRW calculation. If it finds itself as one of the top candidates, it considers the forwarded request reasonable and fetches it from the origin server. If the receiver finds that it is not one of the top candidates, it forwards the request again. We find that 3-7% of chunks get re-forwarded this way in CoBlitz, but it can get as high as 10-15% in some cases. When all PlanetLab nodes act as clients, this technique cuts origin server load almost in half.

Due to the deterministic order of HRW, this approach is guaranteed to

make forward progress and be loop-free. While the worst case is a

number of hops linear in the number of peer groups, this case is also

exponentially unlikely. Even so, we limit this approach to only one

additional hop in the redirection, to avoid forwarding requests across

the world and to limit any damage caused by bugs in the forwarding

logic. Given the relatively low rate of chunks forwarded in this

manner, restricting it to only one additional hop appears sufficient.

5.4 Reducing BurstinessTo illustrate the burstiness resulting from improved peering, consider a fully-connected clique of 120 CDN nodes that begin fetching a large file simultaneously. If all have the same peer set, then each node in the replica set k will receive 120/k requests, each for a 60KB chunk. Assuming 2 replicas, the traffic demand on each is 28.8 Mbits. Assuming a 10 Mbps link, it will be fully utilized for 3 seconds just for this chunk, and then the utilization will drop until the next burst of chunks. The simplest way of reducing the short time-scale node congestion is to increase the number of replicas for each chunk, but this would increase the number of fetches to the origin. Instead, we can improve on the purely mesh-based topology by taking some elements of the stream-oriented systems, which are excellent for reducing link stress. These systems all build communication trees, which eliminates the need to have the same data traverse a link multiple times. While trees are an unattractive option for standard Web CDNs because they add extra latency to every request fetched from the origin, a hybrid scheme can help the large-file case, if the extra hops can reduce congestion. We take the re-forwarding support to forward misdirected chunks, and use it to create broader routing trees in the peer sets. We change the re-forwarding logic to use a different number of replicas when calculating the HRW set, leading to a broad replica set and a smaller set of nodes that fetch from the origin. We set the NumCandidates value to 1 when evaluating the re-forwarding logic, while dynamically selecting the value at the first proxy. The larger replica set at the first hop reduces the burstiness at any node without increasing origin load. To dynamically select the number of replicas, we observe that we can eliminate burstiness by spreading the requests equally across the peers at all times. With a target per-client memory consumption, we can determine how many chunks are issued in parallel. So, the replication factor is governed by the following equation:

At 1 MB of buffer space per client, a 60KB chunk size, and 120 peers,

our replication factor will be 7. We can, of course, cap the number of

peers at some reasonable fraction of the maximum number of peers so

that memory pressure does not cause runaway replication for the sake

of load balancing. In practice, we limit the replication factor to

20% of the minimum target peer set, which yields a maximum factor of

12.

5.5 Dynamic Window ScalingAlthough parallel chunk downloads can exploit multi-path bandwidth and reduce the effect of slow transfers, using a fixed number of parallel chunks also has some congestion-related drawbacks which we address. When the content is not cached, the origin server may receive more simultaneous requests than it can handle if each client is using a large number of parallel chunks. For example, the Apache Web Server is configured by default to allow 150 simultaneous connection, and some sites may not have changed this value. If a CDN node has limited bandwidth to the rest of the CDN, too many parallel fetches can cause self-congestion, possibly underutilizing bandwidth, and slowing down the time of all fetches. The problem in this scenario is that too many slow chunks will cause more retries than needed.

In either of these scenarios, using a smaller number of simultaneous fetches would be beneficial, since the per-chunk download time would improve. We view finding the ``right'' number of parallel chunks as a congestion issue, and address it in a manner similar to how TCP performs congestion control. Note that changing the number of parallel chunks is not an attempt to perform low-level TCP congestion control - since the fetches are themselves using TCP, we have this benefit already. Moreover, since the underlying TCP transport is already using additive-increase multiplicative-decrease, we can choose whatever scheme we desire on top of it. Drawing on TCP Vegas (6), we use the extra information we have in the CoBlitz agent to make the chunk ``congestion window'' a little more stable than a simple sawtooth. We use three criteria: (1) if the chunk finishes in less than the average time, increase the window, (2) if the first fetch attempt is killed by retries, shrink the window, and (3) otherwise, leave the window size unmodified. We also decide that if more chunk fetches are in progress than the window size dictates, existing fetches are allowed to continue, but no new fetches (including retries) are allowed. Given that our condition for increasing the window is already conservative, we give ourselves some flexibility on exactly how much to add. Similarly, given that the reason for requiring a retry might be that any peer is slow, we decide against using multiplicative decrease when a chunk misses the deadline. While determining the decrease rate is fairly easy, choosing a reasonable increase rate required some experimentation. The decrease rate was chosen to be one full chunk for each failed chunk, which would have the effect of closing the congestion window very quickly if all of the chunks outstanding were to retry. This logic is less severe than multiplicative decrease if only a small number of chunks miss their deadlines, but can shrink the window to a single chunk within one ``RTT'' (in this case, average chunk download time) in the case of many failures.

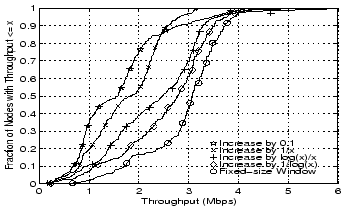

Some experimentation with different increase rates is shown in

Figure 9. The purely additive condition,

6 EvaluationIn this section, we evaluate the performance of CoBlitz, both in various scenarios, and in comparison with BitTorrent (12). We use BitTorrent because of its wide use in large-file transfer (7), and because other research systems, such as Slurpie, Bullet' and Shark (2,26,19), are not running (or in some cases, available) at the time of this writing. As many of these have been evaluated on PlanetLab, we draw some performance and behavior comparisons in Section 7. One unique aspect of our testing is the scale - we use every running PlanetLab node except those at Princeton, those designated as alpha testing nodes, and those behind firewalls that prevent CoDeeN traffic. The reason for excluding the Princeton nodes is because we place our origin server at Princeton, so the local PlanetLab nodes would exhibit unrealistically large throughputs and skew the means. During our testing in September and early October 2005, the number of available nodes that met the criteria above ranged from 360-380 at any given time, with a union size of 400 nodes. Our test environment consists of a server with an AMD Sempron processor running at 1.5 GHz, with Linux 2.6.9 as its operating system and lighttpd 1.4.4 (18) as our web server. Our testing consists of downloading a 50MB file in various scenarios. The choice of this file size was to facilitate comparisons with other work (2,19), which uses file sizes of 40-50MB in their testing. Our testing using a 630MB ISO image for the Fedora Core 4 download yielded slightly higher performance, but would complicate comparisons with other systems. Given that some PlanetLab nodes are in parts of the world with limited bandwidth, our use of 50MB files also reduces contention problems for them. Each test is run three times, and the reported numbers are the average value across the tests for which the node was available. Due to the dynamics of PlanetLab, over any long period of time, the set of available nodes will change, and given the span of our testing, this churn is unavoidable. We tune BitTorrent for performance - the clients and the server are configured to seed the peers indefinitely, and the maximum number of peers is increased to 60. While live BitTorrent usage will have lower performance due to fewer peers and peers departing after downloading, we want the maximum BitTorrent performance. We test a number of scenarios, as follows:

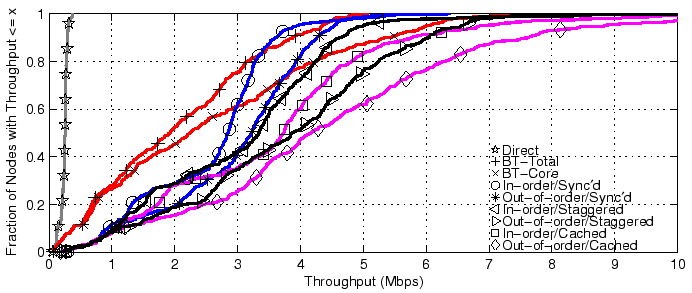

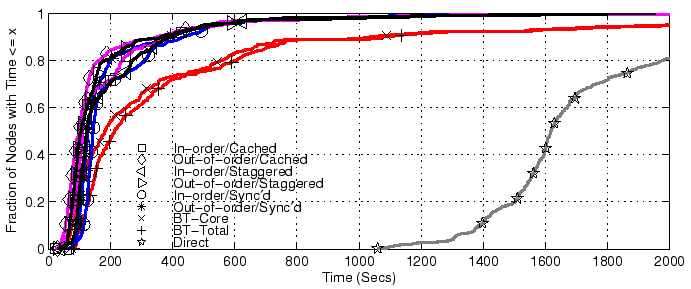

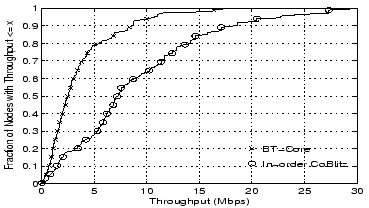

6.1 Overall PerformanceThe throughputs and download times for all tests are shown in Figure 10 and Figure 11, with summaries presented in Table 1. For clarity, we trim the x axes of both graphs, and the CDFs shown are of all nodes completing the tests. The actual number of nodes finishing each test are shown in the table. In the throughput graph, lines to the right are more desirable, while in the download time graph, lines to the left are more desirable.

From the graphs, we can see several general trends: all schemes beat direct downloading, uncached CoBlitz generally beats BitTorrent, out-of-order CoBlitz beats in-order delivery, staggered downloading beats synchronized delivery, and cached delivery, even when synchronized, beats the others. Direct downloading at this scale is particularly problematic - we had to abruptly shut down this test because it was consuming most of Princeton's bandwidth and causing noticeable performance degradation. The worst-case performance for CoBlitz occurs for the uncached case where all clients request the content at exactly the same time and more load is placed on the origin server at once. This case is also very unlikely for regular users, since even a few seconds of difference in start times defeats this problem. The fairest comparison between BitTorrent and CoBlitz is BT-Total versus CoBlitz out-of-order with Staggering, in which case CoBlitz beats BitTorrent by 55-86% in throughput and factor of 1.7 to 4.94 in download time. Even the worst-case performance for CoBlitz, when all clients are synchronized on uncached content, generally beats BitTorrent by 27-48% in throughput and a factor of 1.47 to 2.48 in download time.

In assessing how well CoBlitz compares against BitTorrent, it is

interesting to examine the 90

It may be argued that worst-case times are important for systems that

need to know an update has been propagated to its members, but if this

is an issue, more important than delay is failure to complete. In

Table 1, we show the number of nodes that finish

each test, and these vary considerably despite the fact that the same

set of machines is being used. Of the approximately 400 machines

available across the union of all tests, only about 5-12 nodes fail to

complete using CoBlitz, while roughly 17-18 fail in direct testing,

and about 21-25 fail with BitTorrent. The 5-12 nodes where CoBlitz

eventually stops trying to download are at PlanetLab sites with

highly-congested links, poor bandwidth, and other problems - India,

Australia, and some Switzerland nodes.

6.2 Load at the Origin

Another metric of interest is how much traffic reaches the origin server in these different tests, and this information is provided in Table 2, shown as a multiple of the file size. We see that the CoBlitz scenarios fetch a total of 7 to 9 copies in the various tests, which yields a utility of 43-55 nodes served per fetch (or a cache hit rate of 97.6 - 98.2%). BitTorrent has comparable overall load on the origin, at 10 copies, but has a lower utility value, 35, since it has fewer nodes complete. For Shark, the authors observed it downloading 24 copies from the origin to serve 185 nodes, yielding a utility of 7.7. We believe that part of the difference may stem from peering policy - CoDeeN's unilateral peering approach allows poorly-connected nodes to benefit from existing clusters, while Coral's latency-oriented clustering may adversely impact the number of fetches needed.

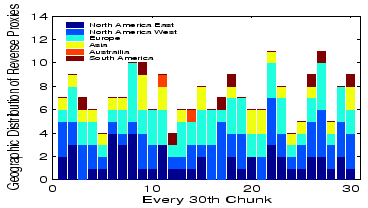

A closer examination of fetches per chunk, shown in

Figure 12, shows that CoBlitz's average of 8

copies varies from 4-11 copies by chunk, and these copies appear to be

spread fairly evenly geographically. The chunks that receive only 4

fetches are particularly interesting, because they suggest it may be

possible to cut CoBlitz's origin load by another factor of 2. We are

investigating whether these chunks happen to be served by nodes that

overlap with many peer sets, which would further validate CoBlitz's

unilateral peering.

6.3 Performance after Flash CrowdsFinally, we evaluate the performance of CoBlitz after a flash crowd, where the CDN nodes can still have the file cached. This was one of motivations for building CoBlitz on top of CoDeeN - that by using an infrastructure geared toward long-duration caching, we could serve the object quickly even after demand for it drops. This test is shown in Figure 13, where clients at all PlanetLab nodes try downloading the file individually, with no two operating simultaneously. We see that performance is still good after the flash crowd has dissipated - the median for this in-order test is above 7 Mbps, almost tripling the median for in-order uncached and doubling the median of in-order cached. At this bitrate, clients can watch DVD-quality video in real time. We include BitTorrent only for comparison purposes, and we see that its median has only marginally improved in this scenario.

6.4 Real-world UsageOne of our main motivations when developing CoBlitz was to build a system that could be used in production, and that could operate with relatively little monitoring. These decisions have led us not only to use simpler, more robust algorithms where possible, but also to restrict the content that we serve. To keep the system usage focused on large-file transfer with a technical focus, and to prevent general-purpose bandwidth cost-shifting, we have placed restrictions on what the general public can serve using CoBlitz. Unless the original file is hosted at a university, CoBlitz will not serve HTML files, most graphics types, and most audio/video formats. As a result of these policies, we have not received any complaints related to the content served by CoBlitz, which has simplified our operational overhead.

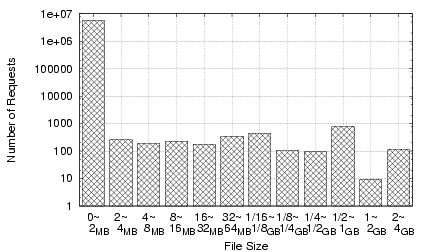

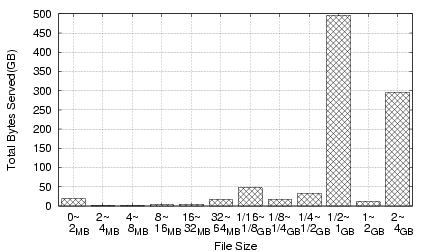

To get a sense of a typical month's CoBlitz usage, we present the breakdown for February 2006 traffic in Figures 14 (by number of requests) and 15 (by bytes served). Most of the requests for files less than 2MB come from the Stork service (28), which provides package management on PlanetLab, and the CiteSeer Digital Library (11), which provides document downloads via CoBlitz. The two spikes in bytes served are from the Fedora Core Linux distribution, available as either downloadable CD images or DVD images. Most of the remaining traffic comes from smaller sites, other PlanetLab users, and Fedora Core RPM downloads.

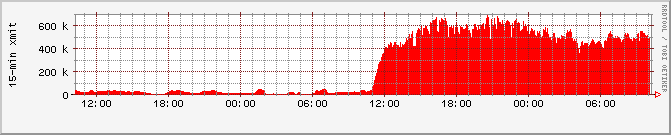

A more unusual usage pattern occurred on March 20, 2006, when the

Fedora Core 5 Linux distribution was released. Within minutes of the

official announcement on the Fedora mailing lists, the availability

was mentioned on the front page of Slashdot (27), on a

Monday morning for the US. The measurements from this day and the

previous day are shown in Figure 16. In less than an

hour, CoBlitz went from an average of 20Mbps of traffic to over 400

Mbps, and sustained 5-minute peaks exceeded 700Mbps. CoBlitz

functioned as expected, with one exception - many of the clients were

using ``download agents'' that fetch files using a ``no-cache'' HTTP

header. CoBlitz had been honoring these requests for PlanetLab

researchers who wanted to force refreshes, and we had not seen a

problem in other environments. However, for this download, these

headers were causing unnecessary fetches to the origin that were

impacting performance. We made a policy decision to disregard these

headers for the Fedora Mirror sites, at which point origin traffic

dropped dramatically. This flash crowd had a relatively long tail -

it average 200-250Mbps on the third day, and only dropped to less than

100Mbps on the fifth day, a weekend. The memory footprint of CoBlitz

was also low - even serving the CD and DVD images on several

platforms (PPC, i386, x86_64), the average memory consumption was

only 75MB per node.

7 Related WorkSeveral projects that perform large file transfer have been measured on PlanetLab, with the most closely related ones being Bullet' (19), and Shark (2), which is built on Coral (15). Though neither system is currently accessible to the public, both have been evaluated recently. Bullet', which operates out-of-order and uses UDP, is reported to achieve 7 Mbps when run on 41 PlanetLab hosts at different sites. In testing under similar conditions, CoBlitz achieves 7.4 Mbps (uncached) and 10.6 Mbps (cached) on average. We could potentially achieve even higher results by using a UDP-based transport protocol, but our experience suggests that UDP traffic causes more problems, both from intrusion detection systems as well as stateful firewalls. Shark's performance for transferring a 40MB file across 185 PlanetLab nodes shows a median throughput of 0.96 Mbps. As discussed earlier, Shark serves an average of only 7.7 nodes per fetch, which suggests that their performance may improve if they use techniques similar to ours to reduce origin server load. The results for all of these systems are shown in Table 3. The missing data for Bullet' and Shark reflect the lack of information in the publications, or difficulty extracting the data from the provided graphs. The use of parallel downloads to fetch a file has been explored before, but in a more narrow context - Rodriguez et al. use HTTP byte-range queries to simultaneously download chunks in parallel from different mirror sites (23). Their primary goal was to improve single client downloading performance, and the full file is pre-populated on all of their mirrors. What distinguishes CoBlitz from this earlier work is that we make no assumptions about the existence of the file on peers, and we focus on maintaining stability of the system even when a large number of nodes are trying to download simultaneously. CoBlitz works if the chunks are fully cached, partially cached, or not at all cached, fetching any missing chunks from the origin as needed. In the event that many chunks need to be fetched from the origin, CoBlitz attempts to reduce origin server overload. Finally, from a performance standpoint, CoBlitz attempts to optimize the memory cache hit rate for chunks, something not considered in Rodriguez's system.

While comparing with other work is difficult due to the difference in test environment, we can make some informed conjecture based on our experiences. FastReplica's evaluation includes tests of 4-8 clients, and their per-client throughput drops from 5.5 Mbps with 4 clients to 3.6 Mbps with 8 clients (9). Given that their file is broken into a small number of equal-sized pieces, the slowest node in the system is the overall bottleneck. By using a large number of small, fixed-size pieces, CoBlitz can mitigate the effects of slow nodes, either by increasing the number of parallel fetches, or by retrying chunks that are too slow. Another system, Slurpie, limits the number of clients that can access the system at once by having each one randomly back off such that only a small number are contacting the server regardless of the number of nodes that want service. Their local-area testing has clients contact the server at the rate of one every three seconds, which staggers it far more than BitTorrent. Slurpie's evaluation on PlanetLab provides no absolute performance numbers (26), making it difficult to draw comparisons. However, their performance appears to degrade beyond 16 nodes.

The scarcity of deployed systems for head-to-head

comparisons supports part of our motivation - by reusing CDN

infrastructure, we have been able to easily deploy CoBlitz and keep

it running.

8 ConclusionWe show that, with a relatively small amount of modification, a traditional, HTTP-based content distribution network can be made to efficiently support scalable large-file transfer. Even with no modifications to clients, servers, or client-side software, our approach provides good performance under demanding conditions, but can provide even higher performance if clients implement a relatively simple HTTP feature, chunked encoding. Additionally, we show how we have taken the experience gained from 18 months of CoBlitz deployment, and used it to adapt our algorithms to be more aware of real-world conditions. We demonstrate the advantages provided by this approach by evaluating CoBlitz's performance across all of PlanetLab, where it exceeds the performance of BitTorrent as well as all other research efforts known to us. In the process of making CoBlitz handle scale and reduce congestion both within the CDN and at the origin server, we identify a number of techniques and observations that we believe can be applied to other systems of this type. Among them are: (a) using unilateral peering, which simplifies communication as well as enabling the inclusion of policy-limited or poorly-connected nodes, (b) using request re-forwarding to reduce the origin server load when nodes send requests to an overly-broad replica set, (c) dynamically adjusting replica sets to reduce burstiness in short time scales, (d) congestion-controlled parallel chunk fetching, to reduce both origin server load as well as self-interference at slower CDN nodes. We believe that the lessons we have learned from CoBlitz should help not only the designers of future systems, but also provide a better understanding of how to design these kinds of algorithms to reflect the unpredictable behavior we have seen in real deployment. AcknowledgmentsWe would like to thank our shepherd, Neil Spring, and the anonymous reviewers for their useful feedback on the paper. This work was supported in part by NSF Grants ANI-0335214, CNS-0439842, and CNS-0520053.

Bibliography

About this document ...Scale and Performance in the CoBlitz Large-File Distribution Service This document was generated using the LaTeX2HTML translator Version 2002-1 (1.69)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

The command line arguments were: The translation was initiated by KyoungSoo Park on 2006-03-28 KyoungSoo Park 2006-03-28 |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

This paper was originally published in the Proceedings of the

3rd Symposium on Networked Systems Design and Implementation (NSDI '06) May 8–10, 2006, San Jose, CA Last changed: 3 May 2006 jel |