|

This section presents data for PCP and TCP transfers over the Internet between twenty North American nodes selected from the RON testbed [3].

We implemented the PCP protocol in a user-level process; this is a conservative measure of PCP's effectiveness, since timestamps and packet pacing are less accurate when done outside the operating system kernel. To enable an apples-to-apples comparison, we also implemented TCP-SACK in the same user-level process. With TCP-SACK, selective acknowledgments give the sender a complete picture of which segments have been received without loss. The sender uses fast retransmit whenever it receives three out-of-order acknowledgments and then enters fast recovery. Our TCP implementation assumes delayed acknowledgments, where every other packet arriving within a short time interval of 200 ms is acknowledged. As in most other implementations [38], acknowledgments are sent out immediately for the very first data packet (in order to avoid the initial delayed ACK timeout when the congestion window is simply one) and for all packets that are received out-of-order. Removing delayed ACKs would improve TCP response time, but potentially at a cost of worse packet loss rates by making TCP's slow start phase more aggressive and overshooting the available network resources to a greater extent. We used RON to validate that our user-level implementation of TCP yielded similar results to native kernel TCP transfers for our measured paths.

|

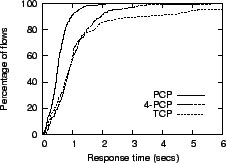

For each pair of the twenty RON nodes, and in each direction, we ran three experiments: a single PCP transfer, a single TCP-SACK transfer, and four parallel PCP transfers. Each transfer was 250KB, repeated one hundred times and averaged. Figure 2 presents the cumulative distribution function of the transfer times for these 380 paths. PCP outperforms TCP in the common case because of its better startup behavior. The average PCP response time is 0.52 seconds; the average TCP response time is 1.33 seconds. To put this in perspective, four parallel PCP 250KB transfers complete on average in roughly the same time as a single 250KB TCP transfer. Further, worst case performance is much worse for TCP; while all PCP transfers complete within 2 seconds, over 10% of TCP transfers take longer than 5 seconds. While this could be explained by PCP being too aggressive relative to competing TCP traffic, we will see in the next graph that this is not the case. Rather, for congested links, TCP's steady state behavior is easily disrupted by background packet losses induced by shorter flows entering and exiting slow start.

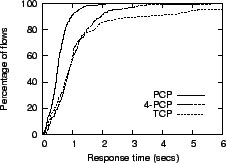

In Figure 3, we examine the behavior of PCP and TCP as a function of flow size, for a single pair of nodes. The TCP behavior is dominated by slow start for small transfers, and steady state behavior for large transfers; since this path has a significant background loss rate caused by other TCP flows, TCP is unable to achieve more than 5Mb/s in steady state. By contrast, PCP is able to transfer large files at over 15Mb/s, without increasing the background loss rate seen by TCP flows. To show this, we ran four parallel PCP transfers simultaneously with the TCP transfer; the TCP transfer was unaffected by the PCP traffic. In other words, for this path, there is a significant background loss rate, limiting TCP performance, despite the fact that the congested link has room for substantial additional bandwidth.

While some researchers have suggested specific modifications to TCP's additive increase rule to improve its performance for high bandwidth paths, we believe these changes would have made little difference for our tests, as most of our paths have moderate bandwidth and most of our tests use moderate transfer sizes. Quantitatively evaluating these alternatives against PCP is future work.