C-Miner: Mining Block Correlations

in Storage Systems

Zhenmin Li, Zhifeng Chen, Sudarshan M.

Srinivasan and Yuanyuan Zhou

Department of Computer Science

University of Illinois at

Urbana-Champaign, Urbana, IL 61801

{zli4,zchen9,smsriniv,yyzhou}@cs.uiuc.edu

Abstract:

Block correlations are common semantic patterns in storage systems. These

correlations can be exploited for improving the effectiveness of storage

caching, prefetching, data layout and disk scheduling. Unfortunately,

information about block correlations is not available at the storage system

level. Previous approaches for discovering file correlations in file systems do

not scale well enough to be used for discovering block correlations in storage

systems. In this paper, we propose C-Miner, an algorithm which uses a

data mining technique called frequent sequence mining to discover block

correlations in storage systems. C-Miner runs reasonably fast with

feasible space requirement, indicating that it is a practical tool for

dynamically inferring correlations in a storage system. Moreover, we have also

evaluated the benefits of block correlation-directed prefetching and data layout

through experiments. Our results using real system workloads show that

correlation-directed prefetching and data layout can reduce average I/O response

time by 12-25% compared to the base case, and 7-20% compared to the commonly

used sequential prefetching scheme.

Introduction

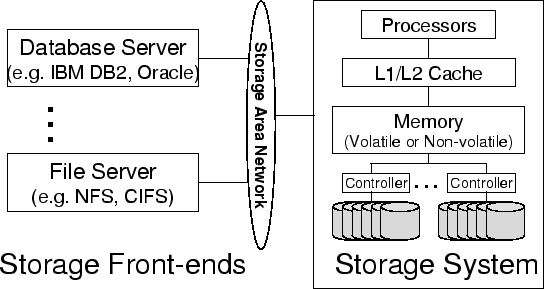

To satisfy the growing demand for storage, modern storage systems are becoming

increasingly intelligent. For example, the IBM Storage Tank system [29] consists of a cluster of storage nodes

connected using a storage area network. Each storage node includes processors,

memory and disk arrays. An EMC Symmetric server contains up to eighty 333

MHz microprocessors with up to 4-64 GB of memory as the storage cache [19]. Figure 1

gives an example architecture of modern storage systems. Many storage systems

also provide virtualization capabilities to hide disk layout and configurations

from storage clients [36,3]. Unfortunately, it is not an easy

task to exploit the increasing intelligence in storage systems. One primary

reason is the narrow I/O interface between storage applications and storage

systems. In such a simple interface, storage applications perform only block

read or write operations without any indication of access patterns or data

semantics. As a result, storage systems can only manage data at the block level

without knowing any semantic information such as the semantic correlations

between blocks. Therefore, much previous work had to rely on simple patterns

such as temporal locality, sequentiality, and loop references to improve storage

system performance, without fully exploiting its intelligence. This motivates a

more powerful analysis tool to discover more complex patterns, especially

semantic patterns, in storage systems.

Figure 1: Example of modern storage

architecture

|

Block correlations are common semantic patterns in storage systems. Many blocks

are correlated by semantics. For example, in a database that uses index trees

such as B-trees to speed up query performance, a tree node is correlated to its

parent node and its ancestor nodes. Similarly, in a file server-backend storage

system, a file block is correlated to its inode block. Correlated blocks tend to

be accessed relatively close to each other in an access stream. Exploring these

correlations is very useful for improving the effectiveness of storage caching,

prefetching, data layout and disk scheduling. For example, at each access, a

storage system can prefetch correlated blocks into its storage cache so that

subsequent accesses to these blocks do not need to access disks, which is

several orders of magnitude slower than accessing directly from a storage cache.

As self-managing systems are becoming ever so important, capturing block

correlations would enhance the storage system's knowledge about its workloads, a

necessary step toward providing self-tuning capability. Unfortunately,

information about block correlations are unavailable at a storage system because

a storage system exports only block interfaces. Since databases or file systems

are typically provided by vendors different from those of storage systems, it is

quite difficult and complex to extend the block I/O interface to allow upper

levels to inform a storage system about block correlations. Recently,

Arpaci-Dusseau et al. proposed a very interesting approach called

semantically-smart disk systems (SDS) [54] by using a ``gray-box'' technology to

infer data structure and categorize data in storage systems. However, this

approach requires probing in the front-end and assumes that the front-ends

conform to the FFS-like file system layout. An alternative approach is to infer

block correlations fully transparently inside a storage system by only observing

access sequences. This approach does not require any probing from a front-end

and also makes no assumption about the type of the front-ends. Therefore, this

approach is more general and can be applied to storage systems with any

front-end file systems or database servers. Semantic distances [34,35] and probability graphs [24,25] are such ``black-box''

approaches. They are quite useful in discovering file correlations in file

systems (see section 2.3 for more

details). This paper proposes C-Miner, a method which applies a data

mining technique called frequent sequence mining to discover block

correlations in storage systems. Specifically, we have modified a recently

proposed data mining algorithm called CloSpan [66] to find block correlations in

several storage traces collected in real systems. To the best of our knowledge,

C-Miner is the first approach to infer block correlations involving

multiple blocks. Furthermore, C-Miner is more scalable and

space-efficient than previous approaches. It runs reasonably fast with

reasonable space overhead, indicating that it is a practical tool for

dynamically inferring correlations in a storage system. Moreover, we have also

evaluated the benefits of block correlation-directed prefetching and disk data

layout using the real system workloads. Compared to the base case, this scheme

reduces the average response time by 12% to 25%. Compared to the sequential

prefetching scheme, it also reduces the average response time by 7% to 20%. The

paper is organized as follows. In the next section, we briefly describe block

correlations, the benefits of exploiting block correlations, and approaches to

discover block correlations. In section 3,

we present our data mining method to discover block correlations. Section 4

discusses how to take advantage of block correlations in the storage cache for

prefetching and disk layout. Section 5

presents our experimental results. Section 6

discusses the related work and section 7

concludes the paper.

Block Correlations

What are Block Correlations?

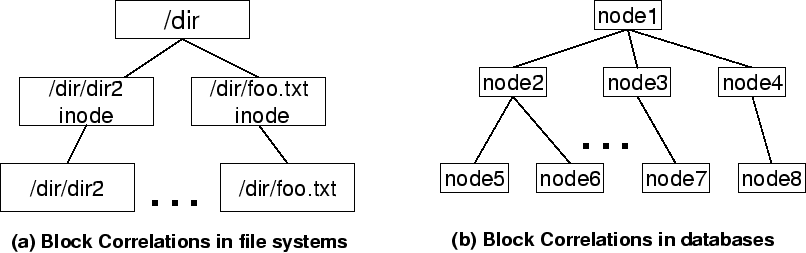

Block correlations commonly exist in storage systems. Two or more blocks are

correlated if they are ``linked'' together semantically. For example, Figure 2(a)

shows some block correlations in a storage system which manages data for an NFS

server. In this example, a directory block ``/dir'' is directly correlated to

the inode block of ``/dir/foo.txt'', which is also directly correlated to the

file block of ``/dir/foo.txt''. Besides direct correlations, blocks can also be

correlated indirectly through another block. For example, the directory block

``/dir'' is indirectly correlated to the file block of ``/dir/foo.txt''. Figure 2(b)

shows block correlations in a database-backend storage system. Databases

commonly use a tree structure such as B-tree or B*-tree to store data. In such a

data structure, a node is directly correlated to its parent and children, and

also indirectly correlated to its ancestor and descendant nodes.

Figure 2: Examples of block

correlations

|

Unlike other access patterns such as temporal locality, block correlations are

inherent in the data managed by a storage system. Access patterns such as

temporal locality or sequentiality depend on workloads and can therefore change

dynamically, whereas block correlations are relatively more stable and do not

depend on workloads, but rather on data semantics. When block semantics are

changed (for example, a block is reallocated to store other data), some block

correlations may be affected. In general, block semantics are more stable than

workloads, especially in systems that do not have very bursty deletion and

insertion operations that can significantly change block semantics. As we will

show in section 5.3, block correlations

can remain stable for several days in file systems. Correlated blocks are

usually accessed very close to each other. This is because most storage

front-ends (database servers or file servers) usually follow semantic ``links''

to access blocks. For example, an NFS server needs to access an inode block

before accessing a file block. Similarly, a database server first needs to

access a parent node before accessing its children. Due to the interleaving of

requests and transactions, these I/O requests may not be always consecutive in

the access stream received by a storage system. But they should be relatively

close within a short distance from each other. Spatial locality is a simple case

of block correlations. An access stream exhibits spatial locality if, after a

block is accessed, other blocks that are near it are likely to be accessed in

the near future. This is based on the observation that a block is usually

semantically correlated to its neighboring blocks. For example, if a file's

blocks are allocated in disks consecutively, these blocks are correlated to each

other. Therefore, in some workloads, these blocks are likely accessed one after

another. However, many correlations are more complex than spatial locality. For

example, in an NFS server, an inode block is usually allocated separately from

its file blocks and a directory block is allocated separately from the inode

blocks of the files in this directory. Therefore, although accesses to these

correlated blocks are close to each other in the access stream, they do not

exhibit good spatial locality because these blocks are far away from each other

in the disk layout and even on different disks. In some cases, a block

correlation may involve more than two blocks. For example, a three-block

correlation might be: if

both  and

and  are accessed recently,

are accessed recently,  is very likely to be accessed in a short period of time. Basically,

is very likely to be accessed in a short period of time. Basically,  and

and  are correlated to

are correlated to  , but

, but  or

or  alone may not be correlated to

alone may not be correlated to

. To give a real instance of this multi-block correlation, let us

consider a B* tree which also links all the leaf nodes together.

. To give a real instance of this multi-block correlation, let us

consider a B* tree which also links all the leaf nodes together.  ,

,

and

and  are all leaf nodes. If

are all leaf nodes. If  is accessed, the system cannot predict that

is accessed, the system cannot predict that  is going to be accessed soon. However, if

is going to be accessed soon. However, if  and

and  are accessed one after another, it is likely that

are accessed one after another, it is likely that  will be accessed soon because it is likely that the front-end is

doing a sequence scan of all the leaf nodes, which is very common in

decision-support system (DSS) workloads [7,68].

Block correlations can be exploited to improve storage system performance.

First, correlations can be used to direct prefetching. For example, if a strong

correlation exists between blocks

will be accessed soon because it is likely that the front-end is

doing a sequence scan of all the leaf nodes, which is very common in

decision-support system (DSS) workloads [7,68].

Block correlations can be exploited to improve storage system performance.

First, correlations can be used to direct prefetching. For example, if a strong

correlation exists between blocks  and

and

, these two blocks can be fetched together from disks whenever one of

them is accessed. The disk read-ahead optimization is an example of exploiting

the simple sequential block correlations by prefetching subsequent disk blocks

ahead of time. Several studies [55,14,31] have shown that using even

these simple sequential correlations can significantly improve the storage

system performance. Our results in section 5.5

demonstrate that prefetching based on block correlations can improve the

performance much better than such simple sequential prefetching in most cases. A

storage system can also lay out data in disks according to block correlations.

For example, a block can be collocated with its correlated blocks so that they

can be fetched together using just one disk access. This optimization can reduce

the number of disk seeks and rotations, which dominate the average disk access

latency. With correlation-directed disk layouts, the system only needs to pay a

one-time seek and rotational delay to get multiple blocks that are likely to be

accessed soon. Previous studies [52,54] have shown promising results in allocating

correlated file blocks on the same track to avoid track-switching costs.

Correlations can also be used to direct storage caching. For example, a storage

cache can ``promote'' or ``demote'' a block after its correlated block is

accessed or evicted. After an access to block

, these two blocks can be fetched together from disks whenever one of

them is accessed. The disk read-ahead optimization is an example of exploiting

the simple sequential block correlations by prefetching subsequent disk blocks

ahead of time. Several studies [55,14,31] have shown that using even

these simple sequential correlations can significantly improve the storage

system performance. Our results in section 5.5

demonstrate that prefetching based on block correlations can improve the

performance much better than such simple sequential prefetching in most cases. A

storage system can also lay out data in disks according to block correlations.

For example, a block can be collocated with its correlated blocks so that they

can be fetched together using just one disk access. This optimization can reduce

the number of disk seeks and rotations, which dominate the average disk access

latency. With correlation-directed disk layouts, the system only needs to pay a

one-time seek and rotational delay to get multiple blocks that are likely to be

accessed soon. Previous studies [52,54] have shown promising results in allocating

correlated file blocks on the same track to avoid track-switching costs.

Correlations can also be used to direct storage caching. For example, a storage

cache can ``promote'' or ``demote'' a block after its correlated block is

accessed or evicted. After an access to block

, blocks that are correlated to

, blocks that are correlated to  are likely to be accessed very soon. Therefore, a cache replacement

algorithm can specially ``mark'' these blocks to avoid being evicted. Similarly,

after a block

are likely to be accessed very soon. Therefore, a cache replacement

algorithm can specially ``mark'' these blocks to avoid being evicted. Similarly,

after a block  is evicted, blocks that are correlated to

is evicted, blocks that are correlated to  are not very likely to be accessed soon so it might be OK to also

evict these blocks in subsequent replacement decisions. The storage cache can

also give higher priority to those blocks that are correlated to many other

blocks. Therefore, for databases that use tree structures, it would achieve a

similar effect as the DBMIN cache replacement algorithm that is specially

designed for database workloads [15]. This algorithm gives higher priority to root

blocks or high-level index blocks to stay in a database buffer cache. Besides

performance, block correlations can also be used to improve storage system

security, reliability and energy-efficiency. For example, malicious clients

accesses the storage system in a very different pattern from the normal clients.

By catching abnormal block correlations in an access stream, the storage system

can detect such kind of malicious users. When a file block is archived to a

tertiary storage, its correlated blocks may also need to be backed up in order

to provide consistency. In addition, storage power management schemes can also

take advantage of block correlations by clustering correlated blocks in the same

disk so it is possible for other disks to transition into standby mode [11]. The experiments in this study focus on

demonstrating the benefits of exploiting block correlations in improving storage

system performance. The usages for security, reliability and energy-efficiency

remain as our future work.

are not very likely to be accessed soon so it might be OK to also

evict these blocks in subsequent replacement decisions. The storage cache can

also give higher priority to those blocks that are correlated to many other

blocks. Therefore, for databases that use tree structures, it would achieve a

similar effect as the DBMIN cache replacement algorithm that is specially

designed for database workloads [15]. This algorithm gives higher priority to root

blocks or high-level index blocks to stay in a database buffer cache. Besides

performance, block correlations can also be used to improve storage system

security, reliability and energy-efficiency. For example, malicious clients

accesses the storage system in a very different pattern from the normal clients.

By catching abnormal block correlations in an access stream, the storage system

can detect such kind of malicious users. When a file block is archived to a

tertiary storage, its correlated blocks may also need to be backed up in order

to provide consistency. In addition, storage power management schemes can also

take advantage of block correlations by clustering correlated blocks in the same

disk so it is possible for other disks to transition into standby mode [11]. The experiments in this study focus on

demonstrating the benefits of exploiting block correlations in improving storage

system performance. The usages for security, reliability and energy-efficiency

remain as our future work.

Obtaining Block Correlations

There can be three possible approaches to obtain block correlations in storage

systems. These approaches trade transparency and generality for accuracy at

different degrees. The ``black box'' approach is most transparent and general

because it infers block correlations without any assumption or modification to

storage front-ends. The ``gray box'' approach does not need modifications to

front-end software but makes certain assumptions about front-ends and also

requires probing from front-ends. The ``white box'' approach completely relies

on front-ends to provide information and therefore has the most accurate

information but is least transparent.

infer block correlations completely inside a storage system, without any

assumption on the storage front-ends. One commonly used method of this approach

is to infer block correlations based on accesses. The observation is that

correlated blocks are usually accessed relatively close to each other.

Therefore, if two blocks are almost always accessed together within a short

access distance, it is very likely that these two blocks are correlated to each

other. In other words, it is possible to automatically infer block correlations

in a storage system by dynamically analyzing the access stream. In the field of

networked or mobile file systems, researchers have proposed semantic distance

(SD) [34,35] or probability graphs [24,25] to capture file correlations in

file systems. The main idea is to use a graph to record the number of times two

items are accessed within a specified access distance. In an SD graph, a node

represents an accessed item  with edges linking to other items. The weight of each edge

with edges linking to other items. The weight of each edge  is the number of times that

is the number of times that  is accessed within the specified lookahead window of

is accessed within the specified lookahead window of  's access. So if the weight for an edge is large, the corresponding

items are probably correlated. The algorithm to build the SD graph from an

access stream works like this: Suppose the specified lookahead window size is

100, i.e., accesses that are less than 100 accesses apart are considered to be

``close'' accesses. Initially the probability graph is empty. The algorithm

processes each access one after another. The algorithm always keeps track of the

items of most recent 100 accesses in the current sliding window. When an item

's access. So if the weight for an edge is large, the corresponding

items are probably correlated. The algorithm to build the SD graph from an

access stream works like this: Suppose the specified lookahead window size is

100, i.e., accesses that are less than 100 accesses apart are considered to be

``close'' accesses. Initially the probability graph is empty. The algorithm

processes each access one after another. The algorithm always keeps track of the

items of most recent 100 accesses in the current sliding window. When an item  is accessed, it adds node

is accessed, it adds node

into the graph if it is not in the graph yet. It also increments the

weight of the edge

into the graph if it is not in the graph yet. It also increments the

weight of the edge  for any

for any  accessed during the current window. If such an edge is not in the

graph, it adds this edge and sets the initial weight to be 1. After the entire

access stream is processed, the algorithm rescans the SD graph and only records

those correlations with weights larger than a given threshold. Even though

probability graphs or SD graphs work well for inferring file correlations in a

file system, they, unfortunately, are not practical for inferring block

correlations in storage systems because of two reasons. (1) Scalability

problem: a semantic distance graph requires one node to represent each

accessed item and also one edge to capture each non-zero-weight correlation.

When the system has a huge number of items as in a storage system, an SD graph

is too big to be practical. For instance, if we assume the specified window size

is 100, it may require more than 100 edges associated with each node. Therefore,

one node would occupy at least

accessed during the current window. If such an edge is not in the

graph, it adds this edge and sets the initial weight to be 1. After the entire

access stream is processed, the algorithm rescans the SD graph and only records

those correlations with weights larger than a given threshold. Even though

probability graphs or SD graphs work well for inferring file correlations in a

file system, they, unfortunately, are not practical for inferring block

correlations in storage systems because of two reasons. (1) Scalability

problem: a semantic distance graph requires one node to represent each

accessed item and also one edge to capture each non-zero-weight correlation.

When the system has a huge number of items as in a storage system, an SD graph

is too big to be practical. For instance, if we assume the specified window size

is 100, it may require more than 100 edges associated with each node. Therefore,

one node would occupy at least

(assuming each edge requires 8 bytes to store the

weight and the disk block number of

(assuming each edge requires 8 bytes to store the

weight and the disk block number of  ). For a small storage system with only 80 GB and a block size of 8

KB, the probability graph would occupy 8 GB, 10% of the storage capacity.

Besides space overheads, building and searching such a large graph would also

take a significantly large amount of time. (2) Multi-block correlation

problem: these graphs cannot represent correlations that involve more than

two blocks. For example, the block correlations described at the end of the

Section 2.1 cannot be conveniently represented

in a semantic distance graph. Therefore, these techniques can lose some

important block correlations. In this paper, we present a practical black box

approach that uses a data mining method to automatically infer both dual-block

and multi-block correlations in storage systems. In Section 3,

we describe our approach in detail.

). For a small storage system with only 80 GB and a block size of 8

KB, the probability graph would occupy 8 GB, 10% of the storage capacity.

Besides space overheads, building and searching such a large graph would also

take a significantly large amount of time. (2) Multi-block correlation

problem: these graphs cannot represent correlations that involve more than

two blocks. For example, the block correlations described at the end of the

Section 2.1 cannot be conveniently represented

in a semantic distance graph. Therefore, these techniques can lose some

important block correlations. In this paper, we present a practical black box

approach that uses a data mining method to automatically infer both dual-block

and multi-block correlations in storage systems. In Section 3,

we describe our approach in detail.

are investigated by Arpaci-Dusseau et al in [5]. They

developed three gray-box information and control layers between a client and the

OS, and combined algorithmic knowledge, observations and inferences to collect

information. The gray-box idea has been explored by Sivathanu et al in storage

systems to automatically obtain file-system knowledge [54]. The main idea is to probe from a storage

front-end by performing some standard operations and then observing the

triggered I/O accesses to the storage system. It works very well for file

systems that conform to FFS-like structure (if the front-end security is not a

concern). The advantage of this approach is that it does not require any

modification to the storage front-end software. The tradeoff is that it requires

the front-end to conform to specific disk layouts such as FFS-like structure.

rely on storage front-ends to directly pass semantic information to obtain

block correlations in a storage system. For example, the storage I/O interface

can be modified using a higher-level, object-like interface [23] so that correlations can be easily expressed

using the object interface. The advantage with this approach is that it can

obtain very accurate information about block correlations from storage

front-ends. However, it requires modifying storage front-end software, some of

which, such as database servers, are too large to be easily ported to

object-based storage interface.

Mining for Block Correlations

Data mining, also known as knowledge discovery in databases (KDD), has developed

quickly in recent years due to the wide availability of voluminous data and the

imminent need for extracting useful information and knowledge from them.

Traditional methods of data analysis dependent on human handling cannot scale

well to huge sizes of data sets. In this section, we first introduce some

fundamental data mining concepts and analysis methods used in our paper and then

describe C-Miner, our algorithm for inferring block correlations in

storage systems.

Frequent Sequence Mining

Different patterns can be discovered by different data mining techniques,

including association analysis, classification and prediction, cluster analysis,

outlier analysis, and evolution analysis [27]. Among these techniques, association

analysis can help discover correlations between two or more sets of events or

attributes. Suppose there exists a strong association between events  and

and  , it means that if event

, it means that if event  happens, event

happens, event

is also very likely to happen. We use the association rule

is also very likely to happen. We use the association rule

to describe such a correlation between these two events.

Frequent sequence mining is one type of association analysis to discover

frequent subsequences in a sequence database [1]. A subsequence is considered

frequent when it occurs in at least a specified number of sequences

(called min_support) in the sequence database. A subsequence is not

necessarily contiguous in an original sequence. For example, a sequence database

to describe such a correlation between these two events.

Frequent sequence mining is one type of association analysis to discover

frequent subsequences in a sequence database [1]. A subsequence is considered

frequent when it occurs in at least a specified number of sequences

(called min_support) in the sequence database. A subsequence is not

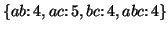

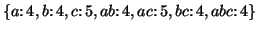

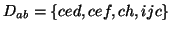

necessarily contiguous in an original sequence. For example, a sequence database  has five sequences:

has five sequences:

The number of occurrences of subsequence  is 4. We denote the number of occurrences of a subsequence as its

is 4. We denote the number of occurrences of a subsequence as its  . Obviously, the smaller

. Obviously, the smaller  is, the more frequent subsequences the database contains. In

the above example, if

is, the more frequent subsequences the database contains. In

the above example, if  is specified as 5, only the subsequence

is specified as 5, only the subsequence  is frequent; if

is frequent; if  is specified as 4, the frequent subsequences are

is specified as 4, the frequent subsequences are

, where the numbers are the supports of the subsequences.

Frequent sequence mining is an active research topic in data mining [67,46,6] with broad applications,

such as mining motifs in DNA sequences, analysis of customer shopping sequences

etc. To the best of our knowledge, our study is the first one that uses frequent

sequence mining to discover patterns in storage systems.

C-Miner is based on a recently proposed frequent sequence mining

algorithm called CloSpan (Closed Sequential Pattern mining)[66]. The main idea of CloSpan is to

find only closed frequent subsequences. A closed sequence is a

subsequence whose support is different from that of its super-sequences. In the

above example, subsequence

, where the numbers are the supports of the subsequences.

Frequent sequence mining is an active research topic in data mining [67,46,6] with broad applications,

such as mining motifs in DNA sequences, analysis of customer shopping sequences

etc. To the best of our knowledge, our study is the first one that uses frequent

sequence mining to discover patterns in storage systems.

C-Miner is based on a recently proposed frequent sequence mining

algorithm called CloSpan (Closed Sequential Pattern mining)[66]. The main idea of CloSpan is to

find only closed frequent subsequences. A closed sequence is a

subsequence whose support is different from that of its super-sequences. In the

above example, subsequence  is closed because its support is 5, and the support of any one of

its super-sequences (for example,

is closed because its support is 5, and the support of any one of

its super-sequences (for example,  and

and  , etc.) is no more than 4; on the other hand, subsequence

, etc.) is no more than 4; on the other hand, subsequence  is not closed because its support is the same as that of one of its

super-sequences,

is not closed because its support is the same as that of one of its

super-sequences,  . CloSpan only produces the closed frequent subsequences rather

than all frequent subsequences since any non-closed subsequences can be

indicated by their super-sequences with the same support. In the above example,

the frequent subsequences are

. CloSpan only produces the closed frequent subsequences rather

than all frequent subsequences since any non-closed subsequences can be

indicated by their super-sequences with the same support. In the above example,

the frequent subsequences are

, but we

only need to produce the closed subsequences

, but we

only need to produce the closed subsequences

. This feature significantly reduces the number of patterns

generated, especially for long frequent subsequences. More details can be found

in [46,66].

. This feature significantly reduces the number of patterns

generated, especially for long frequent subsequences. More details can be found

in [46,66].

C-Miner: Our Mining Algorithm

Frequent sequence mining is a good candidate for inferring block correlations in

storage systems. One can map a block to an item, and an access sequence to a

sequence in the sequence database. Using frequent sequence mining, we can obtain

all the frequent subsequences in an access stream. A frequent subsequence

indicates that the involved blocks are frequently accessed together. In other

words, frequent subsequences are good indications of block correlations in a

storage system. One limitation with the basic mining algorithm is that it does

not consider the gap of a frequent subsequence. If a frequent sequence contains

two accesses that are very far from each other in terms of access time, such a

correlation is not interesting for our application. From the system's point of

view, it is much more interesting to consider frequent access subsequences that

are not far apart. For example, if a frequent subsequence  always appears in the original sequence with a distance of more

than 1000 accesses, it is not a very interesting pattern because it is hard for

storage systems to exploit it. Further, such correlations are generally less

accurate. To address this issue, C-Miner restricts access distances. In

order to describe how far apart two accesses are in the access stream, the

access distance between them is denoted as

always appears in the original sequence with a distance of more

than 1000 accesses, it is not a very interesting pattern because it is hard for

storage systems to exploit it. Further, such correlations are generally less

accurate. To address this issue, C-Miner restricts access distances. In

order to describe how far apart two accesses are in the access stream, the

access distance between them is denoted as  , measured by the number of accesses between these two accesses. We

specify a maximum distance threshold, denoted as

, measured by the number of accesses between these two accesses. We

specify a maximum distance threshold, denoted as  . All the uninteresting frequent sequences whose gaps are

larger than the threshold are filtered out. This is similar to the lookahead

window used in the semantic distance algorithms.

. All the uninteresting frequent sequences whose gaps are

larger than the threshold are filtered out. This is similar to the lookahead

window used in the semantic distance algorithms.

Existing frequent sequence mining algorithms including CloSpan are designed to

discover patterns for a sequence database rather than a single long sequence of

time-series information as in storage systems. To overcome this limitation,

C-Miner preprocesses the access sequence (that is, the history access

trace) by breaking it into fixed-size short sequences. The size of each short

sequence is called

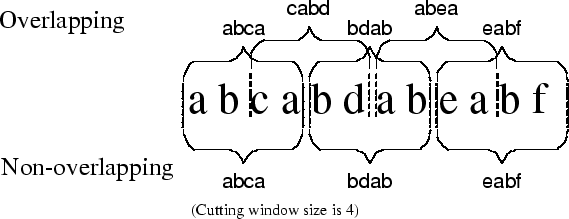

cutting window size. There are two ways to cut the long access stream

into short sequences - overlapped cutting and non-overlapped cutting. The

overlapped cutting divides an entire access stream into many short sequences and

leaves some overlapped regions between any two consecutive sequences.

Non-overlapped cutting is straightforward; it simply splits the access stream

into access sequences of equal size. Figure 3

illustrates how these two methods cut the access stream  into short sequences with length of 4. Overlapped cutting

may increase the number of occurrences for some subsequences if it falls in the

overlapped region. In the example shown in Figure

3, using overlapped cutting results in 5 short sequences:

into short sequences with length of 4. Overlapped cutting

may increase the number of occurrences for some subsequences if it falls in the

overlapped region. In the example shown in Figure

3, using overlapped cutting results in 5 short sequences:

. The subsequences

. The subsequences  in

in  and

and  occurs only once in the original access stream, but now is

counted twice since the short sequences,

occurs only once in the original access stream, but now is

counted twice since the short sequences,  and

and  , overlap with each other. It is quite difficult to determine how

many redundant occurrences there are due to overlapping. Another drawback is

that the overlapped cutting generates more sequences than non-overlapped

cutting. Therefore it takes the mining algorithm a longer time to infer frequent

subsequences.

, overlap with each other. It is quite difficult to determine how

many redundant occurrences there are due to overlapping. Another drawback is

that the overlapped cutting generates more sequences than non-overlapped

cutting. Therefore it takes the mining algorithm a longer time to infer frequent

subsequences.

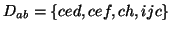

Figure 3: Overlapping and

non-overlapping window

|

Using non-overlapped cutting can, however, lead to loss of frequent subsequences

that are split into two or more sequences, and therefore can decrease the

support values of some frequent subsequences because some of their occurrences

are split into two sequences. In the example shown in figure

3, the non-overlapped cutting results in only 3 sequences:

. The support for

. The support for  is 3, but the actual support in the original long sequence is 4.

The lost support is because the second occurrence is broken across two windows

and is therefore not counted. But we believe that the amount of lost information

in the non-overlapped cutting scheme is quite small, especially if the cutting

window size is relatively large. Since C-Miner restricts the access

distance of a frequent subsequence, only a few frequent subsequences may be

split across multiple windows. Suppose the instances of a frequent subsequence

are distributed uniformly in the access stream, the cutting windows size is

is 3, but the actual support in the original long sequence is 4.

The lost support is because the second occurrence is broken across two windows

and is therefore not counted. But we believe that the amount of lost information

in the non-overlapped cutting scheme is quite small, especially if the cutting

window size is relatively large. Since C-Miner restricts the access

distance of a frequent subsequence, only a few frequent subsequences may be

split across multiple windows. Suppose the instances of a frequent subsequence

are distributed uniformly in the access stream, the cutting windows size is  and the maximum access distance for frequent sequences is

and the maximum access distance for frequent sequences is  (

(

). Then, in the worst case, the probability that an

instance of a frequent subsequence is split across two sequences is

). Then, in the worst case, the probability that an

instance of a frequent subsequence is split across two sequences is  . For example, if the access distance is limited within

50, and the cutting window size is 500 accesses, the support value is lost by at

most 10% in the worst case. Therefore, most frequent subsequences would still be

considered frequent after non-overlapped cutting. Based on this analysis, we use

non-overlapped cutting in our experiments.

. For example, if the access distance is limited within

50, and the cutting window size is 500 accesses, the support value is lost by at

most 10% in the worst case. Therefore, most frequent subsequences would still be

considered frequent after non-overlapped cutting. Based on this analysis, we use

non-overlapped cutting in our experiments.

Once it has a database of short sequences, C-Miner

mines the database and produces frequent subsequences, which can then be used to

derive block correlations. C-Miner mainly consists of two stages: (1)

generating a candidate set of frequent subsequences that includes all the closed

frequent subsequences; and (2) pruning the non-closed subsequences from the

candidate set. In the first stage, C-Miner generates a candidate set of

frequent sequences using a depth-first search procedure. The following

pseudo-code shows the mining algorithm. In the algorithm,  is a suffix database which contains all the maximum suffixes of

the sequences that contain the frequent subsequence

is a suffix database which contains all the maximum suffixes of

the sequences that contain the frequent subsequence  . For example, in the previous sequence database

. For example, in the previous sequence database  , the suffix database of frequent subsequences

, the suffix database of frequent subsequences  is

is

.

.

![\begin{boxedminipage}[thb]{3in}

\renewcommand {1.09}{1}\begin{tabbing}\hspace{...

...amond \alpha$\ means to concatenate $s$\ with $\alpha$.)}

\end{boxedminipage}](img50.png)

There are two main ideas in C-Miner to improve the mining efficiency.

The first idea is based on an obvious observation that if a sequence is

frequent, then all of its subsequences are frequent. For example, if a sequence  is frequent, all of its subsequences

is frequent, all of its subsequences

are frequent. Based on this observation, C-Miner recursively

produces a longer frequent subsequence by concatenating every frequent item to a

shorter frequent subsequence that has already been obtained in the previous

iterations. To better explain this idea, let us consider an example. In order to

get the set

are frequent. Based on this observation, C-Miner recursively

produces a longer frequent subsequence by concatenating every frequent item to a

shorter frequent subsequence that has already been obtained in the previous

iterations. To better explain this idea, let us consider an example. In order to

get the set  of frequent subsequences with length

of frequent subsequences with length  , we can join the set

, we can join the set  of frequent subsequences with length

of frequent subsequences with length  and the set

and the set  of frequent subsequences with length 1. For example, suppose we

have already computed

of frequent subsequences with length 1. For example, suppose we

have already computed  and

and  as shown below. In order to compute

as shown below. In order to compute  , we can first compute

, we can first compute  by concatenating a subsequence from

by concatenating a subsequence from  and an item from

and an item from  :

:

For greater efficiency, C-Miner does not join the sequences in set  with all the items in

with all the items in  . Instead, each sequence in

. Instead, each sequence in  is concatenated with only the frequent items in its suffix

database. In our example, for the frequent sequence

is concatenated with only the frequent items in its suffix

database. In our example, for the frequent sequence  in

in  , its suffix database is

, its suffix database is

, and only

, and only  is the frequent item, so

is the frequent item, so  is only concatenated with

is only concatenated with  and then we get a longer sequence

and then we get a longer sequence  that belongs to

that belongs to  . The second idea is used for efficiently evaluating whether a

concatenated subsequence is frequent or not. It tries to avoid searching through

the whole database. Instead, it only checks with certain suffixes. In the above

example, for each sequence

. The second idea is used for efficiently evaluating whether a

concatenated subsequence is frequent or not. It tries to avoid searching through

the whole database. Instead, it only checks with certain suffixes. In the above

example, for each sequence  in

in

, C-Miner checks whether it is frequent or not by

searching the suffix database

, C-Miner checks whether it is frequent or not by

searching the suffix database  . If the number of its occurrences is greater than

. If the number of its occurrences is greater than  ,

,  is added into

is added into  , which is the set of frequent subsequences of length 3.

C-Miner continues computing

, which is the set of frequent subsequences of length 3.

C-Miner continues computing  from

from  ,

,

from

from  , and so on until no more subsequences can be added into the set of

frequent subsequences. In order to mine frequent sequences more efficiently,

C-Miner uses a technique that can efficiently determine whether there are

new closed patterns in search subspaces and stop checking those unpromising

subspaces. The basic idea is based on the following observation about a closed

sequence property. In the algorithm step 2, among all the sequences in

, and so on until no more subsequences can be added into the set of

frequent subsequences. In order to mine frequent sequences more efficiently,

C-Miner uses a technique that can efficiently determine whether there are

new closed patterns in search subspaces and stop checking those unpromising

subspaces. The basic idea is based on the following observation about a closed

sequence property. In the algorithm step 2, among all the sequences in  , if an item

, if an item  always occurs before another item

always occurs before another item

, C-Miner does not need to search any sequences with prefix

, C-Miner does not need to search any sequences with prefix  . The reason is that

. The reason is that

,

,

is not closed under this condition. Take the previous

sequence database as an example.

is not closed under this condition. Take the previous

sequence database as an example.  always occurs before

always occurs before

, so any subsequence with prefix

, so any subsequence with prefix  is not closed because it is also a subsequence with prefix

is not closed because it is also a subsequence with prefix  . Therefore, C-Miner does not need to search the frequent

sequences with prefix

. Therefore, C-Miner does not need to search the frequent

sequences with prefix  because all these frequent sequences are included in the frequent

sequences with prefix

because all these frequent sequences are included in the frequent

sequences with prefix

(e.g.,

(e.g.,  is included in

is included in  with support 4). Without searching these unpromising branches,

C-Miner can generate the candidate frequent sequences much more

efficiently.

with support 4). Without searching these unpromising branches,

C-Miner can generate the candidate frequent sequences much more

efficiently.

C-Miner produces frequent sequences that indicate block correlations,

but it does not directly generate the association rules in the form of

, which is much easier to use in storage systems.

In order to convert the frequent sequences into association rules, C-Miner

breaks each sequence into several rules. In order to limit the number of rules,

C-Miner constrains the length of a rule (the number of items on the left

side of a rule). For example, a frequent sequence

, which is much easier to use in storage systems.

In order to convert the frequent sequences into association rules, C-Miner

breaks each sequence into several rules. In order to limit the number of rules,

C-Miner constrains the length of a rule (the number of items on the left

side of a rule). For example, a frequent sequence  may be broken into the following set of rules with the same

support of

may be broken into the following set of rules with the same

support of

:

:

Different closed frequent sequences can be broken into the same rules. For

example, both  and

and  can be broken into the same rule

can be broken into the same rule

, but they may have different support values. The

support of a rule is the maximum support of all corresponding closed

frequent sequences.

, but they may have different support values. The

support of a rule is the maximum support of all corresponding closed

frequent sequences.

For each association rule, we also need to evaluate its accuracy . In order to

describe the reliability of a rule, we introduce  to measure the accuracy. For example, in the above example,

to measure the accuracy. For example, in the above example,  occurs 5 times, but

occurs 5 times, but  only occurs 4 times; this means that when

only occurs 4 times; this means that when  is accessed,

is accessed,  is also accessed in the near future (within

is also accessed in the near future (within

distance) with probability 80%. We call this probability the

confidence of the rule. When we use an association rule to predict future

accesses, its confidence indicates the expected prediction accuracy. Predictions

based on low-confidence rules are likely to be wrong and may not be able to

improve system performance. Worse still, they may hurt the system performance

due to overheads and side-effects. Because of this, we use confidence to

restrict rules and filter out those with low probability. The

distance) with probability 80%. We call this probability the

confidence of the rule. When we use an association rule to predict future

accesses, its confidence indicates the expected prediction accuracy. Predictions

based on low-confidence rules are likely to be wrong and may not be able to

improve system performance. Worse still, they may hurt the system performance

due to overheads and side-effects. Because of this, we use confidence to

restrict rules and filter out those with low probability. The  metric is different from

metric is different from  . For example, suppose

. For example, suppose  and

and  are accessed only once in the entire access stream and their

accesses are within the

are accessed only once in the entire access stream and their

accesses are within the  distance, the confidence of the association rule

distance, the confidence of the association rule

is 100% whereas its support is only 1. This rule is not very

interesting because it happens rarely. On the other hand, if a rule has high

support but very low confidence (e.g. 5%), it may not be useful because it is

too inaccurate to be used for prediction. Therefore, in practice, we usually

specify a minimum support threshold

is 100% whereas its support is only 1. This rule is not very

interesting because it happens rarely. On the other hand, if a rule has high

support but very low confidence (e.g. 5%), it may not be useful because it is

too inaccurate to be used for prediction. Therefore, in practice, we usually

specify a minimum support threshold  and a minimum confidence threshold

and a minimum confidence threshold  in order to filter low-quality association rules. We can

estimate the confidence for each rule in a simple way. Suppose we need to

compute the confidence for rule

in order to filter low-quality association rules. We can

estimate the confidence for each rule in a simple way. Suppose we need to

compute the confidence for rule

. Assume that the supports for

. Assume that the supports for  and

and  are

are  and

and  , respectively. Then the confidence for this rule is

, respectively. Then the confidence for this rule is

. Since both sides of each rule are frequent sequences

(or frequent items) and the supports for all the frequent sequences are already

obtained from post-processing,

. Since both sides of each rule are frequent sequences

(or frequent items) and the supports for all the frequent sequences are already

obtained from post-processing,  and

and  are ready to be used for computing the confidence of the rule.

Compared with other methods such as probability graphs or SD graphs,

C-Miner can find more correlations, especially those multi-block

correlations. From our experiments, we find that these multi-block correlations

are very useful for systems. For dual-block correlations, which can also be

inferred using previous approaches, C-Miner is more efficient. First,

C-Miner is much more space efficient than SD graphs because it does not

need to maintain the information for non-frequent sequences, whereas SD graphs

need to keep the information for every block during the graph building process.

Second, in terms of time complexity, C-Miner is the same (

are ready to be used for computing the confidence of the rule.

Compared with other methods such as probability graphs or SD graphs,

C-Miner can find more correlations, especially those multi-block

correlations. From our experiments, we find that these multi-block correlations

are very useful for systems. For dual-block correlations, which can also be

inferred using previous approaches, C-Miner is more efficient. First,

C-Miner is much more space efficient than SD graphs because it does not

need to maintain the information for non-frequent sequences, whereas SD graphs

need to keep the information for every block during the graph building process.

Second, in terms of time complexity, C-Miner is the same ( ) as SD. But in practice, since C-Miner has much smaller

memory footprint size, it is more efficient and can run in a cheap uniprocessor

machine with moderate memory size as used in our experiments. Other frequent

sequence mining algorithms such as PrefixSpan[46] can also find long frequent

sequences. Compared with these frequent sequence mining algorithms,

C-Miner is more efficient for discovering long frequent sequences

because it not only avoids searching the non-frequent sequences while generating

longer sequences, but also prunes all the unpromising searching branches

according to the closed sequence property as we have discussed. C-Miner

can outperform PrefixSpan by an order of magnitude for some datasets.

) as SD. But in practice, since C-Miner has much smaller

memory footprint size, it is more efficient and can run in a cheap uniprocessor

machine with moderate memory size as used in our experiments. Other frequent

sequence mining algorithms such as PrefixSpan[46] can also find long frequent

sequences. Compared with these frequent sequence mining algorithms,

C-Miner is more efficient for discovering long frequent sequences

because it not only avoids searching the non-frequent sequences while generating

longer sequences, but also prunes all the unpromising searching branches

according to the closed sequence property as we have discussed. C-Miner

can outperform PrefixSpan by an order of magnitude for some datasets.

Case Studies

The block correlation information inferred by C-Miner can be used to

prefetch more intelligently. Assume that C-Miner has obtained a block

correlation rule: if block  is accessed, block

is accessed, block  will also be accessed soon within a short distance ( of length

will also be accessed soon within a short distance ( of length  ) with a certain confidence (probability). Based on this rule, when

there is an access to block

) with a certain confidence (probability). Based on this rule, when

there is an access to block  , we can prefetch block

, we can prefetch block  into the storage cache since it will probably be accessed soon.

Doing such can avoid future accesses to disks to fetch these blocks. Several

design issues should be considered while using block correlations for

prefetching. One of the most important issues is how to effectively share the

limited size cache for both caching and prefetching. If prefetching is too

aggressive, it can pollute the storage cache and may even degrade the cache hit

ratio and system performance. This problem has been investigated thoroughly by

previous work [9,10,45]. We therefore do not investigate

it further in our paper. In our simulation experiments, we simply fix the cache

size for prefetched data so it does not compete with non-prefetching requests.

However, the total cache size is fixed at the same value for the system with and

without prefetching in order to have a fair comparison. Another design issue is

the extra disk load imposed by prefetch requests. If the disk load is too heavy,

the disk utilization is close to 100%. In this case, prefetching can add

significant overheads to demand requests, canceling out the benefits of improved

storage cache hit ratio. Two methods can be used to alleviate this problem. The

first method is to differentiate between demand requests and prefetch requests

by using a priority-based disk scheduling scheme. In particular, the system uses

two waiting queues in the disk scheduler: critical and non-critical. All the

demand requests are issued to the critical queue, while the prefetch requests

are issued to the non-critical queue which has lower priority. The other method

is to throttle the prefetch requests to a disk if the disk is heavily utilized.

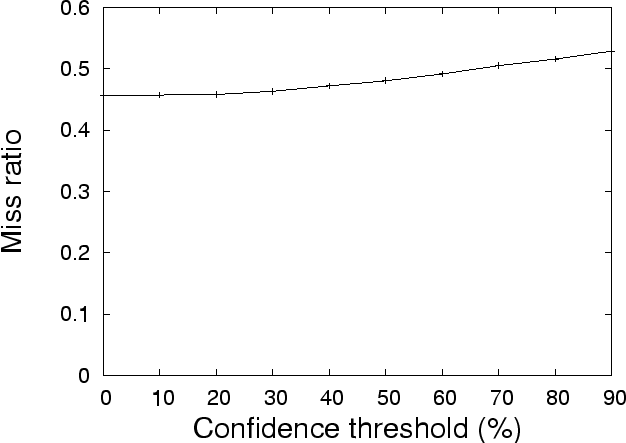

Since the correlation rules have different confidences, we can set a confidence

threshold to limit the number of rules that are used for prefetching. All the

rules with confidence lower than the threshold are ignored. Obviously, the

higher the confidence threshold is, the fewer the rules are used. Therefore, CDP

acts less aggressively. In order to adjust the threshold to make prefetching

adapt to the current disk workload, we keep track of the current load on each

disk. When the workload is too high, say the disk utilization is more than 80%,

we increase the confidence threshold for correlation rules that direct the

issuing of prefetch requests to this disk. Once the disk load drops down to a

low level, say the utilization is less than 50%, we decrease the confidence

threshold for correlation rules so that more rules can be used for prefetching.

By doing this, the overhead on disk bandwidth caused by prefetches is kept

within an acceptable range.

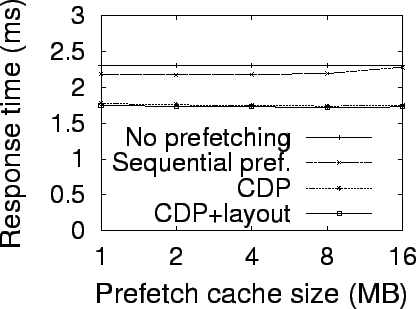

Block correlations can help lay out data on disks to improve performance. The

dominant latencies in a disk access are the seek time and rotation delay. So if

correlated blocks can be allocated together on a disk and can be fetched using

one disk access, the total seek time and rotation delay for all these blocks can

be reduced. Thereafter, both of the throughput and the response time can be

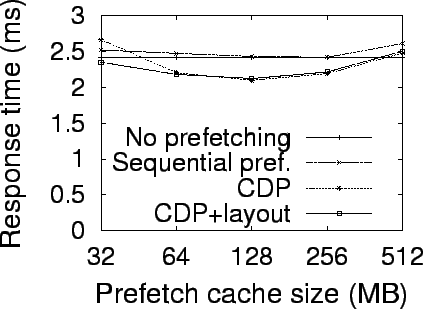

improved. But CDP is more effective than disk layout for improving response time

as shown in section 5.5. We can lay out

the blocks on disks based on block correlatoins like that: if we know a

correlation

into the storage cache since it will probably be accessed soon.

Doing such can avoid future accesses to disks to fetch these blocks. Several

design issues should be considered while using block correlations for

prefetching. One of the most important issues is how to effectively share the

limited size cache for both caching and prefetching. If prefetching is too

aggressive, it can pollute the storage cache and may even degrade the cache hit

ratio and system performance. This problem has been investigated thoroughly by

previous work [9,10,45]. We therefore do not investigate

it further in our paper. In our simulation experiments, we simply fix the cache

size for prefetched data so it does not compete with non-prefetching requests.

However, the total cache size is fixed at the same value for the system with and

without prefetching in order to have a fair comparison. Another design issue is

the extra disk load imposed by prefetch requests. If the disk load is too heavy,

the disk utilization is close to 100%. In this case, prefetching can add

significant overheads to demand requests, canceling out the benefits of improved

storage cache hit ratio. Two methods can be used to alleviate this problem. The

first method is to differentiate between demand requests and prefetch requests

by using a priority-based disk scheduling scheme. In particular, the system uses

two waiting queues in the disk scheduler: critical and non-critical. All the

demand requests are issued to the critical queue, while the prefetch requests

are issued to the non-critical queue which has lower priority. The other method

is to throttle the prefetch requests to a disk if the disk is heavily utilized.

Since the correlation rules have different confidences, we can set a confidence

threshold to limit the number of rules that are used for prefetching. All the

rules with confidence lower than the threshold are ignored. Obviously, the

higher the confidence threshold is, the fewer the rules are used. Therefore, CDP

acts less aggressively. In order to adjust the threshold to make prefetching

adapt to the current disk workload, we keep track of the current load on each

disk. When the workload is too high, say the disk utilization is more than 80%,

we increase the confidence threshold for correlation rules that direct the

issuing of prefetch requests to this disk. Once the disk load drops down to a

low level, say the utilization is less than 50%, we decrease the confidence

threshold for correlation rules so that more rules can be used for prefetching.

By doing this, the overhead on disk bandwidth caused by prefetches is kept

within an acceptable range.

Block correlations can help lay out data on disks to improve performance. The

dominant latencies in a disk access are the seek time and rotation delay. So if

correlated blocks can be allocated together on a disk and can be fetched using

one disk access, the total seek time and rotation delay for all these blocks can

be reduced. Thereafter, both of the throughput and the response time can be

improved. But CDP is more effective than disk layout for improving response time

as shown in section 5.5. We can lay out

the blocks on disks based on block correlatoins like that: if we know a

correlation  from C-Miner, we can try to allocate them contiguously

in a disk. Whenever any one of these blocks is read, all four blocks are fetched

together into the storage cache using one disk access. Since some blocks may

appear in several patterns, we allocate the block based on the rules with

highest support value. One of the main design issues is how to maintain the

directory information and reorganize data without an impact on the foreground

workload. After reorganizing disk layouts, we need to map logical block numbers

to new physical block numbers. The mapping table might become very large. Some

previous work has studied these issues and shown that disk layout reorganization

is feasible to implement [50]. They proposed a

two-tiered software architecture to combine multiple disk layout heuristics so

that it adapts to different environments. Block correlation-directed disk layout

can be one of the heuristics in their framework. Due to space limitation, we do

not discuss this issue further.

from C-Miner, we can try to allocate them contiguously

in a disk. Whenever any one of these blocks is read, all four blocks are fetched

together into the storage cache using one disk access. Since some blocks may

appear in several patterns, we allocate the block based on the rules with

highest support value. One of the main design issues is how to maintain the

directory information and reorganize data without an impact on the foreground

workload. After reorganizing disk layouts, we need to map logical block numbers

to new physical block numbers. The mapping table might become very large. Some

previous work has studied these issues and shown that disk layout reorganization

is feasible to implement [50]. They proposed a

two-tiered software architecture to combine multiple disk layout heuristics so

that it adapts to different environments. Block correlation-directed disk layout

can be one of the heuristics in their framework. Due to space limitation, we do

not discuss this issue further.

Simulation Results

Evaluation Methodology

To evaluate the benefits of exploiting block correlations in block prefetching

and disk data layout, we use trace-driven simulations with several large disk

traces collected in real systems. Our simulator combines the widely used DiskSim

simulator [20] with a storage cache simulator,

CacheSim, to simulate a complete storage system. CacheSim implements the Least

Recently Used (LRU) replacement policy. Accesses to the simulated storage system

first go through a storage cache and only read misses or writes access physical

disks. The simulated disk specification is similar to that of the 10000 RPM IBM

Ultrastar 36Z15. The parameters are taken from the disk's data sheet [28,11]. Our experiments use the following four real

system traces:

- TPC-C Trace is an I/O trace collected on a storage system connected

to a Microsoft SQL Server via storage area network. The Microsoft Server SQL

clients connect to the Microsoft SQL Server via Ethernet and run the TPC-C

benchmark [39] for 2 hours. The database consists of 256

warehouses and the footprint is 60GB, and the storage system employs a RAID of 4

disks. A more detailed description of this trace can be found in [69,13].

- Cello-92 was collected at Hewlett-Packard Laboratories in 1992 [49,48]. It captured all low-level disk I/O

performed by the system. We used the trace gathered on Cello, which is a

timesharing system used by a group of researchers at HP Labs to do simulations,

compilation, editing and email. The trace includes the accesses to 8 disks. We

have also tried other HP disk trace files, and the results are similar.

- Cello-96 is similar to Cello-92. The only difference is that this

trace was collected in 1996 and thereby contains more modern workloads. It

includes the accesses to 20 disks from multiple users and miscellaneous

applications. It contains a lot of sequential access patterns, so the simple

sequential prefetching approaches can significantly benefit from them.

- OLTP is a trace of an OLTP application running at a large financial

institution. It was made available by the Storage Performance Council [58]. The disk subsystem is composed of 19

disks.

All the traces are collected after filtering through a first-level buffer cache

such as the database server cache. Fortunately, unlike other access patterns

such temporal locality that can be filtered by large first-level buffer caches,

most block correlations can still be discovered at the second-level. Only those

correlations involving ``hot'' blocks that always stay at the first-level can be

lost at the second-level. However, these correlations are not useful to exploit

anyway since ``hot'' blocks are kept at the first-level and therefore are rarely

accessed at the second-level. In our experiments, we use only the first half

part of the trace to mine block correlations using C-Miner. Using these

correlation rules, we evaluate the performance of correlation-directed

prefetching and data layout using the rest of the traces. The correlation rules

are kept unchanged during the evaluation phase. For example, in Cello-92, we use

the first 3-days' trace to mine block correlations and use the following 4 days

to evaluate the correlation-directed prefetching and data layout. The reason for

doing this is to show the stable characteristic of block correlations and

predictive powers of our method. To provide a more fair comparison, we also

implement the commonly used sequential prefetching scheme. At non-consecutive

misses to disks, the system also issues a prefetch request to load 16

consecutive blocks. We have also tried prefetching more or fewer blocks, but the

results are similar or worse.

Visualization of Block Correlations

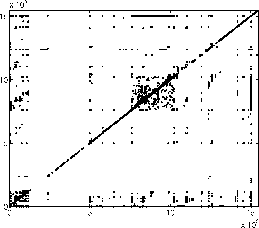

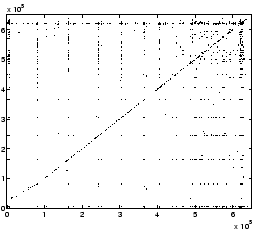

Figure 4 plots the block

correlations discovered by C-Miner from the Cello-96 and TPC-C traces.

Since multi-block correlations are difficult to visualize, we plot only

dual-block correlations. If there is an association rule

, we plot a corresponding point at

, we plot a corresponding point at  . Therefore, each point

. Therefore, each point  in the graphs indicates a correlation between blocks

in the graphs indicates a correlation between blocks  and

and  . Since the traces contain multiple disks' accesses, we plot the disk

block address using a unified continuous address space by plotting one disk

address space after another.

. Since the traces contain multiple disks' accesses, we plot the disk

block address using a unified continuous address space by plotting one disk

address space after another.

Figure 4: Block correlations mined from

traces

(a) Cello-96 trace

|

(b) TPC-C trace

|

|

Simple patterns such as spatial locality can be demonstrated in such a

correlation graph. It is indicated by dark areas around the diagonal line. This

is because the spatial locality can be represented by an association rule

where

where  is a small number, which means that if block

is a small number, which means that if block  accessed, its neighbor blocks are likely to be accessed soon. Since

accessed, its neighbor blocks are likely to be accessed soon. Since  is small, the points

is small, the points  are around the diagonal line, as shown on the Cello-96 traces (Figure 4a).

The graph for the TPC-C trace does not have such apparent characteristic,

indicating TPC-C does not have strong spatial locality. Some more complex

patterns can also be seen from correlation graphs. For example, in figure 4b,

there are many horizontal or vertical lines, indicating some blocks are

correlated to many other blocks. Because this is a database I/O trace, these hot

blocks with many correlations are likely to be the root of trees or subtrees. In

the next subsection, we visualize block correlations specifically for tree

structures.

In order to demonstrate the capability of C-Miner to discover semantics

in a tree structure, we use a synthetic trace that simulates a client that

searches data in a B-tree data structure, which is commonly used in databases.

The B-tree maintains the indices for 5000 data items, each block has space for

four search-key values and five pointers. We perform 1000 searches. To simulate

a real-world situation where some ``hot'' data items are searched more

frequently than others, searches are not uniformly distributed. Instead, we use

a Zipf distribution and 80% of searches are to 100 ``hot'' data items. The block

correlations mined from the B-tree trace are visualized in figures 5.

Note here constructing this tree does not take any semantic information from the

application (the synthetic trace generator). The edges between nodes are

reconstructed purely based on block correlations. Due to the space limitation,

we only show part of the correlations. Each rule

are around the diagonal line, as shown on the Cello-96 traces (Figure 4a).

The graph for the TPC-C trace does not have such apparent characteristic,

indicating TPC-C does not have strong spatial locality. Some more complex

patterns can also be seen from correlation graphs. For example, in figure 4b,

there are many horizontal or vertical lines, indicating some blocks are

correlated to many other blocks. Because this is a database I/O trace, these hot

blocks with many correlations are likely to be the root of trees or subtrees. In

the next subsection, we visualize block correlations specifically for tree

structures.

In order to demonstrate the capability of C-Miner to discover semantics

in a tree structure, we use a synthetic trace that simulates a client that

searches data in a B-tree data structure, which is commonly used in databases.

The B-tree maintains the indices for 5000 data items, each block has space for

four search-key values and five pointers. We perform 1000 searches. To simulate

a real-world situation where some ``hot'' data items are searched more

frequently than others, searches are not uniformly distributed. Instead, we use

a Zipf distribution and 80% of searches are to 100 ``hot'' data items. The block

correlations mined from the B-tree trace are visualized in figures 5.

Note here constructing this tree does not take any semantic information from the

application (the synthetic trace generator). The edges between nodes are

reconstructed purely based on block correlations. Due to the space limitation,

we only show part of the correlations. Each rule

is denoted as a directed edge with support as its weight. The

figure illustrates that the block correlations implicate a tree-like structure.

Also note that our approach to obtain block correlations is fully transparent

without any assumption on storage front-ends.

is denoted as a directed edge with support as its weight. The

figure illustrates that the block correlations implicate a tree-like structure.

Also note that our approach to obtain block correlations is fully transparent

without any assumption on storage front-ends.

Stability of Block Correlations

In order to show that block correlations are relatively stable, we use the

correlation rules mined from the first 3 days of the Cello-92 trace. Our

simulator applies these rules to the next 4 days' trace without updating any

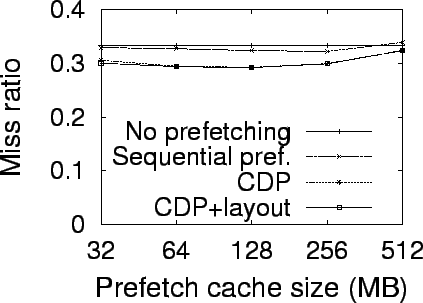

rules. Figure 6 shows the miss ratio for

the next 4 days' trace using correlated-directed prefetching (CDP). The

miss ratios in the figure are calculated by aggregating every 10000 read

operations. This figure shows that CDP is always better than the base case. This

implies that correlations mined from the first 3 days are still effective for

the next 4 days. In other words, block correlations are relatively stable for a

relative long period of time. Therefore, there is no need to run C-Miner

continuously in the background to update block correlations. This also shows

that, as long as the mining algorithm is reasonably efficient, the mining

overhead is not a big issue.

Figure 6: Miss ratio for Cello-92

(64MB; 4MB)

|

Data Mining Overhead

Table 1 shows the running time and space

overheads for mining different traces. C-Miner is running on an Intel

Xeon 2.4GHz machine and Windows 2000 Server. The time and space overhead does

not depend on the confidence of rules as we discussed in section 3.2

but the number of rules does. The results show that C-Miner can

effectively and practically discover block correlations for different workloads.

For example, it takes less than 1 hour to discover half a million association