Check engine lights, tire pressure warning signals, and low fuel indicators. These and many other symbols adorn the dashboards of our vehicles. But what do they all have in common? Besides the obvious, the loud colors and the bright flashes, each of these symbols is doing its best to tell us something is wrong before it becomes a problem that affects our driving. Our check engine light tells us to quite literally check our engine now so that we do not suddenly encounter a problem while on the road. What can we take away, then, from the design of the automobile? Understanding the presence of issues before they manifest themselves as physical problems is one of the best ways to ensure we do not end up in a dire situation. Preventative thought is key.

Traditionally, security and privacy have been addressed at the very end of the Software Development Lifecycle. However, recent updates to best practice suggest that engineers should integrate these principles into design and development, resolving issues before hackers have the chance to discover them [1].

With the effort to prevent security and privacy issues, though, comes the question: How can risks be detected before they have occurred? Threat modeling serves as a promising answer. Threat modeling attempts to evaluate a system’s architecture and data flows and report on the presence of threats which hackers might exploit [2].

This is an extremely beneficial process, but it comes at cost – time. Conducting a thorough threat model can take hours, if not an entire working day. Additionally, the number of people competent in conducting a threat model may be limited. Together, these factors make it difficult to roll out threat modeling at scale.

Certain tooling, though, might help alleviate this issue. Several tools are being developed to automate the threat modeling process [3, 4, 5, 6, 7, 8]. Because of the range of tools from which to choose, it can be difficult to know which one is best for a given threat modeling venture. We strive to minimize that difficulty by providing a review of prominent tools, with a focus on their functionality and the user experience they afford.

The following sections are laid out as follows: Section 2 provides background on what threat modeling is, various threat modeling frameworks, and how threat modeling is typically conducted. Section 3 describes the methodology used to select the tools to be evaluated, the criteria used to evaluate them, and how those criteria were applied. Section 4 describes the results of applying the evaluation criteria and discusses points of interest in these results. Finally, Section 5 wraps up our work and highlights potentially helpful future endeavors.

To better understand why various threat modeling tools might be used, it is important to first understand what threat modeling is. Wenjun Xiong and Robert Lagerstrom conducted a literature review in which they attempted to come up with a definition [2]. This attempt, however, led them to multiple definitions. According to their survey of several articles, “Uzunov and Fernandez (2014)… [state that] ‘threat modeling is a process that can be used to analyze potential attacks or threats, and can also be supported by threat libraries or attack taxonomies,’ [9]” while Punam Bedi et al. assert that threat modeling “‘provides a structured way to secure software design, which involves understanding an adversary’s goal in attacking a system based on system’s assets of interest.’ [10]” These are just two interpretations of many, but a few commonalities exist between a number of these explanations. Namely, threat modeling is: 1) an attempt to anticipate the points at which a system can be exploited 2) supported by some form of systematic process 3) with the intent of highlighting the areas that need attention to prevent damage.

While a “systematic” nature is common to many practical implementations of threat modeling, the structure that the process takes on may be lent to it by one of many different frameworks. Some of the most frequently-used of these frameworks are as follows:

STRIDE—STRIDE is a threat modeling framework developed at Microsoft and intended for use in highlighting security threats. STRIDE is an acronym for six key security threat categories [11]:

- Spoofing

- Tampering

- Repudiation

- Information Disclosure

- Denial of Service

- Elevation of Privilege

PASTA—PASTA is a threat modeling framework developed at security consulting company VerSprite and intended for use in highlighting security threats [12]. Rather than providing key threat categories to which the threat modeling team should pay attention, PASTA, which stands for Process Attack Simulation and Threat Analysis, enumerates seven stages to be followed to identify threats [12]:

- Define Business Context of Application

- Technology Enumeration

- Application Decomposition

- Threat Analysis

- Weakness/Vulnerability Identification

- Attack Simulation

- Residual Risk Analysis

As evident from the content of these frameworks, threat modeling is traditionally used to detect security threats. However, recent efforts have begun to highlight how it can be used in the realm of privacy as well. Because privacy threat modeling is less common, fewer frameworks have cropped up for this purpose. However, there is one framework that looks similar to STRIDE that is currently leading the charge in the industry.

LINDDUN—LINDDUN is a threat modeling framework developed at KU Leuven and intended for use in highlighting privacy threats [13]. LINDDUN is an acronym for seven key privacy threat categories [14]:

- Linkability

- Identifiability

- Non-repudiation

- Detectability

- Disclosure of Information

- Unawareness

- Non-compliance

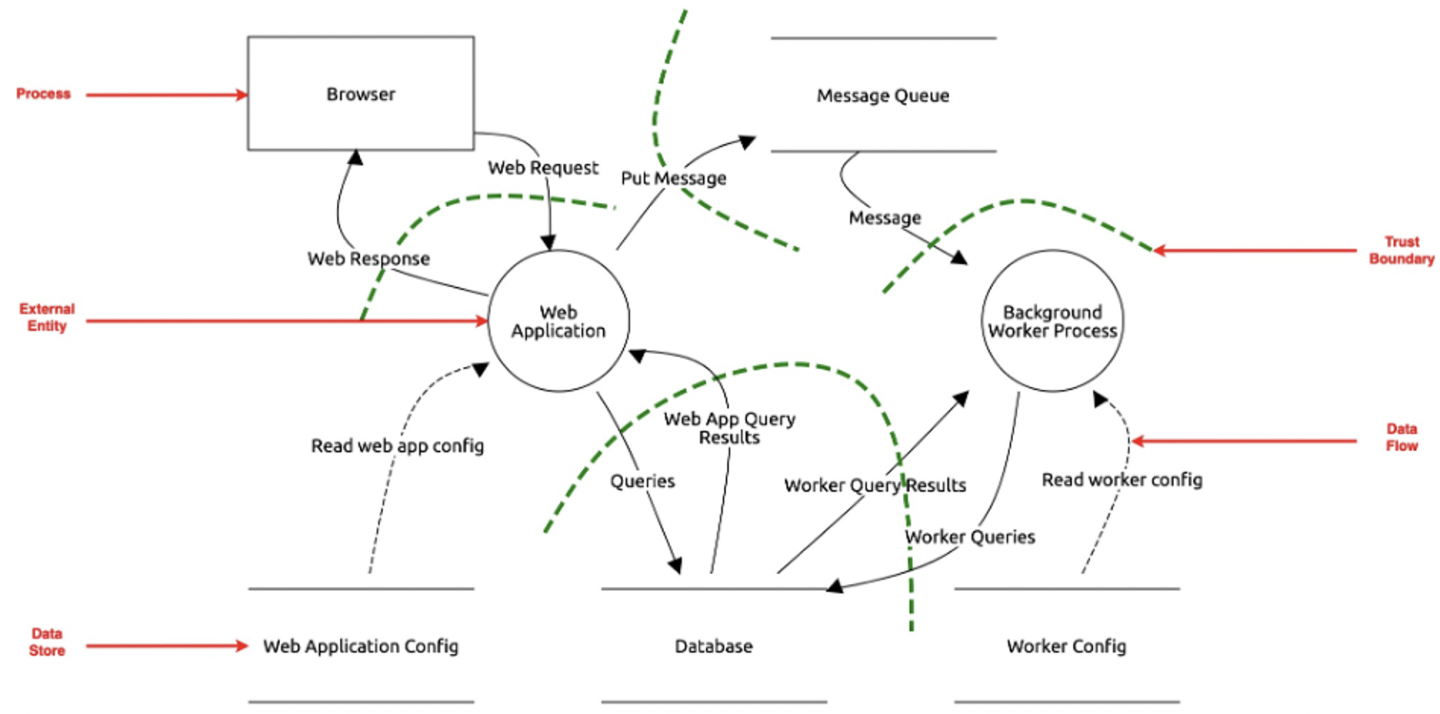

Whether looking to detect security threats, privacy threats, or both, the actual process by which the threat model is conducted tends to look relatively similar. The path toward a threat model typically begins with collection of artifacts describing the system. The key artifact that the system owner’s team needs to provide to the threat modeling team is a Data Flow Diagram (DFD). A DFD is a graphical representation of a system, including all its key architectural components, the data that lives in and moves through the system, the paths between architectural components by which the data moves, and demarcations of zones within the system requiring authentication and/or authorization [15].

Once this diagram has been provided, the threat modeling team uses it to prepare questions for the threat model. Then, during the threat model itself, the DFD is used as a guide for the conversation. The system team explains their architectural components and data flows and the ways they fit together, and the threat modeling team asks their prepared questions and any new ones that arise as the DFD is explained. All of this Q&A culminates in the uncovering and documenting of threats, which are to be resolved by the system team going forward.

We conducted our review of threat modeling tools in three main phases: Tool Discovery, Evaluation Criteria Selection, and Application of Evaluation Criteria.

Tool Discovery

Inclusion Criteria

The first step toward performing an evaluation of current threat modeling tools was to find out what tools exist. We began by defining some inclusion criteria to be used when searching for available tooling. First, each tool must be freely available through open-source means, and its repo must contain commits from less than or equal to approximately one year ago. In addition, each tool must be able to be applied to any type of system. Also regarding scope of functionality, each tool must be able to produce some form of threat report as an output. Finally, all tools selected must require input only in the form of commonly used programming languages and/or data formats.

Search Details

As there is no single database that acts as a source of truth for all threat modeling tools, we performed simple Internet searches instead. We conducted two searches with slight keyword variations. One search used the search term “automated threat modeling tools,” while the other used “threat modeling tools.”

With the goal of obtaining only the most relevant results and hopefully the most ideal candidates for evaluation, we manually reviewed the first two pages of search results. We reviewed each result that was either a link to a particular tool or a link to a webpage discussing several tools. Because there was similarity between the search terms, there was similarity between the results, so we eliminated any duplicates. Upon exploring each of the remaining links, we recorded the names of any tools whose descriptions appeared to fit the defined inclusion criteria.

Evaluation Criteria Selection

Just as we needed to select criteria for inclusion in our library of tools to evaluate, we also needed to select criteria for performing the evaluation. In general, we tried to keep two key principles, among others, in mind. In our inclusion criteria, we ensured the tool could be applied to any type of application, and similarly in our evaluation criteria, we valued the principle of versatility. We also valued the principle of ease of use/minimality of learning curve. With these concepts to guide us, we developed the following evaluation criteria.

Complexity of Logic

This criterion answers the question: “What level of complexity of logic for detecting the presence of a specific threat does this tool support?” Some methodologies for programmatically detecting the presence of a threat are simplistic, assigning threats to elements in a system based simply on the DFD type of the element, whereas others are more complex, checking various attributes of each element. With this criterion, we strive to understand whether the programmatic implementation that each tool leverages is more rudimentary or intricate.

The level of complexity of each tool’s logic is important because it dictates the degree of effect the threat modeling tool has on workload. A tool which implements highly simplistic logic would give the team a starting point when going into a threat model, but would likely not inspire confidence that the threats reported are accurate. Any doubt about this accuracy would necessitate discussion, eating away at the team’s valuable time. In other words, the more complex the logic a tool supports, the more likely it is to serve as a solid starting point for a threat model and, in turn, the more likely it is to save the team time.

Amenability to Custom Threats

This criterion answers the question: “Does this tool support the introduction of custom threats?” Every tool natively provides support for the detection of some number of threats. The type of threats and what specific threats are natively checked for may be dictated by a particular framework or some other means. Either way, native support only goes so far, and there will be a limit to the range of threats available.

In devising a library of threats for which to analyze, it is not unlikely that threats will be pulled from disparate sources. Additionally, some threats are specific to some types of applications (i.e., ML-specific threats, cloud-specific threats). In short, any organization employing a threat modeling tool is likely to have their own bespoke threat library. Because the contents of these libraries will vary, any viable tool should be able to support the introduction of custom threats.

Operational Usability

This criterion answers the question: “How difficult is it to make this tool a part of the workflows of the existing threat modeling stakeholders?” There are several stakeholders, ranging from threat model architects to system owners to legal personnel. This tool will require input from some stakeholders and will provide output to others. Therefore, for whatever the interaction will be between a given class of individuals and the tool, it is important to understand the level of friction that will be generated when the tool is introduced.

The reasoning behind the inclusion of this criterion once again brings us back to the concept of time. Difficulty in interacting with the tool means more time spent learning what to do and less time leveraging the tool to perform threat models. If the time spent learning the tool outweighs the time saved by it, the whole purpose of automating threat models is defeated.

Security Functionality

This criterion answers the question: “How does this tool detect and categorize security threats?” As discussed earlier, different threat modeling tools provide different native functionality regarding their scope of threats included. Some are based on a specific security framework, while others may focus on individual technical risks, and still others may be based on both. Even within any one of these three categories, there are countless possible implementation variations. With this criterion, we strive to understand how a particular tool classifies security threats.

Different organizations follow different practices for threat modeling. One organization might not care about risk classification by category, while, on the more rigid side of things, another might require strict adherence to STRIDE. A viable tool, therefore, should ideally support both scenarios, enabling threat classification in compliance with organizational guidelines, no matter what they are.

Extensibility for Privacy

This criterion answers the question: “Does this tool support privacy threat modeling natively, and, if not, can it feasibly be modified to do so?” As discussed earlier, threat modeling is traditionally used only for the purpose of detecting security threats. As such, most automated threat modeling tools are focused on security and cannot be assumed to facilitate discovery of privacy threats.

Assessing Extensibility for Privacy of a tool is key because of the increasing focus on privacy during system development. As time goes on, more and more privacy regulations are being passed. This is driving an increasing need to put effort into compliance, which, when performed completely by humans, requires significant time and effort. Hence, automating at least some of this effort is critical in enabling organizations to keep up with privacy law.

Application of Evaluation Criteria

With the Evaluation Criteria defined, we took to assessing each tool, keeping the criteria selected in mind. We explored the tools through their documentation, through interacting with their source code, and/or through performing a simulated threat model on a simple dummy system using the tool. For each tool, we took detailed notes on where the tool sat within each criterion to be examined. Our findings from this process are detailed in the following section.

Applying the Inclusion Criteria described above to the two Internet searches conducted generated a set of six threat modeling tools to analyze. These tools are as follows: Computer Aided Integration of Requirements and Information Security (CAIRIS), Threats Manager Studio, Threatspec, PyTM, Threat Dragon, and Threagile [3, 4, 5, 6, 7, 8]. Based on the evaluation criteria described, we created the following Pugh Matrix to represent our findings.

While there is detail to report on each of these tools, we discuss here only the tools with the lowest and highest quality scores in an attempt to highlight the characteristics of an ideal threat modeling tool and the characteristics of a tool that falls short.

CAIRIS

CAIRIS scored lowest, with a total quality score of -4. CAIRIS is a tool intended to facilitate the threat modeling process by enabling users to generate several different types of system diagrams, create attacker personas, assess mitigation tactics, and more [16]. It is accessible via the Internet for demo purposes and, should users decide to proceed after trialing it, it may be cloned and leveraged for free under the Apache License 2.0. Users create diagrams, facilitating system assessment, by navigating through several dropdown menus to input relevant information in this tool.

While CAIRIS fell short in a few areas, its Operational Usability is what truly struck us as most problematic. CAIRIS presents an overwhelming number of features in a UI that feels a few steps away from being fully realized. The feeling of too many features stems from the seven different options in the menu bar at the top, six of which have several options in a dropdown when selected. There are several functions that have no explicit relation to performing a threat model. The sense of a lack of full realization in the overall UI stems from the non-intuitive nature of the layout. For instance, creation of a DFD does not take place on one screen. Rather, the creation of DFD elements and the viewing of the actual DFD requires navigating to two different menu/sub-menu combinations. In short, CAIRIS’s functionality, while it may be quite useful, is difficult to leverage from a user perspective.

The opaque nature of CAIRIS, in turn, makes it difficult to add custom threats or determine how a privacy framework might be integrated into the tool, resulting in negative scores in each of these categories as well. Coupled with the absence of support for any security framework threat classification, this makes CAIRIS appear to be the least ideal tool of those analyzed for automating the threat modeling process.

Threagile

Threagile scored highest, with a total quality score of 4. Threagile is a tool intended to facilitate the threat modeling process by asking users to describe their system and its data flows in a YAML file, analyzing the YAML, and providing a report on the individual technical risks found in the system associated with specific components. Threagile is accessible as a command line application that runs in a Docker container against a file living on the user’s local file system. Users pass in the location of the file on the system, as well as any optional flags/switches desired to configure the tool, and the output files are generated in the same location as the input, providing feedback on the system described.

Threagile received a positive score in several evaluation criteria categories, but its true highlight was its performance with regard to Complexity of Logic and Amenability to Custom Threats. Threagile requests user input that describes the technical components of a system in more detail than a traditional DFD, collecting information such as the technology type of each component, the kind of encryption a component leverages, the protocol leveraged by each data flow, etc. Because it requests such detailed information during the intake process, unlike other tools which request only the information necessary to construct a traditional DFD, Threagile can make decisions about the presence or absence of a threat with finer granularity than most other tools.

In part because of the complex logic that Threagile supports, it is also adept at allowing for incorporation of custom threats. The under-the-hood native Threagile model can be modified to take in new attributes about the elements of a system to support checking for the presence of user-introduced threats. The logic that checks for each threat is written in GoLang, so as long as someone on the threat modeling team has a working knowledge of the language, they are free to introduce as many new threats as are needed to fit a particular use case. These levels of Amenability to Custom Threats and of Complexity of Logic, along with its other optimal characteristics, make Threagile a solid choice for the automation of the threat modeling process.

On the other end of the spectrum, though, it should be noted that Threagile’s Operational Usability is not the strongest. Users may find the need to enter input in the form of a YAML file cumbersome and onerous compared to the drag-and-drop input mechanisms afforded by other tools. Without a drag-and-drop interface, Threagile becomes accessible to fewer individuals, ruling out those who are not familiar with YAML from taking advantage of the tool, unless they are willing to learn a new data format in addition to learning a new tool. However, despite this one drawback, Threagile presents well regarding all other criteria, making it still a reasonable choice if the user is willing to work to overcome this hurdle.

Of late, threat modeling is becoming a more and more important process. Because it is expensive, though, automating it is an appealing option. Selecting the right tool for automation can be difficult, but we have attempted here to provide an assessment of some tooling available for this purpose.

An ideal automated threat modeling tool should support complex logic for threat detection, enable addition of custom threats, be easily understood by the user and easy to integrate into one’s daily workflow, and support functionality for standard security threat classification, as well as provide the option for privacy threat detection. Of the tools analyzed, Threagile appears to best fit these characteristics.

To understand if the quality scores we assigned to the tools here hold up, it would be beneficial to apply each of them to a real-world system on which a traditional threat model has been performed. The threats detected by each tool should then be compared to the threats detected in the traditional threat model to verify the quality of the tool from a functional perspective. The same five criteria should be rated by the actual users of the different tools. Moreover, several users should perform the test for each tool on the selected system so that their ratings can be averaged to gain a more accurate measure of concepts like Operational Usability, as each of these criteria ratings is subjective. Such work would help to validate the findings reported here.

The opinions expressed in this paper are those of the authors themselves and do not reflect those of their employer. Any errors in the paper are the authors’ own.

The authors are supported by employment at their individual institutions. No separate funding was made available for this research.

Conceptualization: VG, KT

Methodology: VG, KT

Investigation: KT

Writing – original draft: KT

Writing – review & editing: VG, KT

Authors declare that they have no competing interests.

All data are available in the main text.