Today’s cluster managers, like Kubernetes, keep the beating heart of datacenters running. These managers are architected as a collection of loosely-coupled controllers and follow the state-reconciliation principle: each controller’s job is to monitor the actual cluster state and its input desired state, then modify the cluster state until it is reconciled with the desired state; a controller builds on top of underlying controllers by modifying their input desired states.

The Kubernetes ecosystem has thousands of such controllers available. Each Kubernetes deployment comprises an appropriate subset of controllers, a flexible modularity enabled by the loosely-coupled architecture.

Cluster management is challenging because of so many independently-authored controllers interacting asynchronously and concurrently. Even more challenging is that failures may interrupt a controller’s operations. In principle, each controller should wake up from failure, observe that the current state does not match the desired state, and proceed to reconcile. In practice, a very common bug pattern is for a controller’s correct operation to depend on its internal state (stack frame or program counter); if a failure occurs, that state is lost, and the controller logic is unable to recognize that it has left its task incomplete.

This kind of failure is called a “liveness” bug: the controller does not do anything immediately apparent as wrong (which would be a safety violation), but it never gets around to doing the expected right thing. We studied controller failures and found that this form of failure is dominant in the controller ecosystem. Recent testing techniques [3, 10] can reveal such bugs using automatically generated tests and fault injections, but testing cannot confirm a controller’s correctness.

Our goal is to confirm statically, at compile time, that a controller always gets around to doing the right thing, even in the presence of asynchrony, concurrency and failures. To this end, we present Anvil, a framework for developing controllers and proving their correctness. We have used Anvil to implement and verify three practical Kubernetes controllers that can readily be deployed on real-world Kubernetes platforms. Anvil and the verified controllers are available at https://github.com/vmware-research/verifiable-controllers.

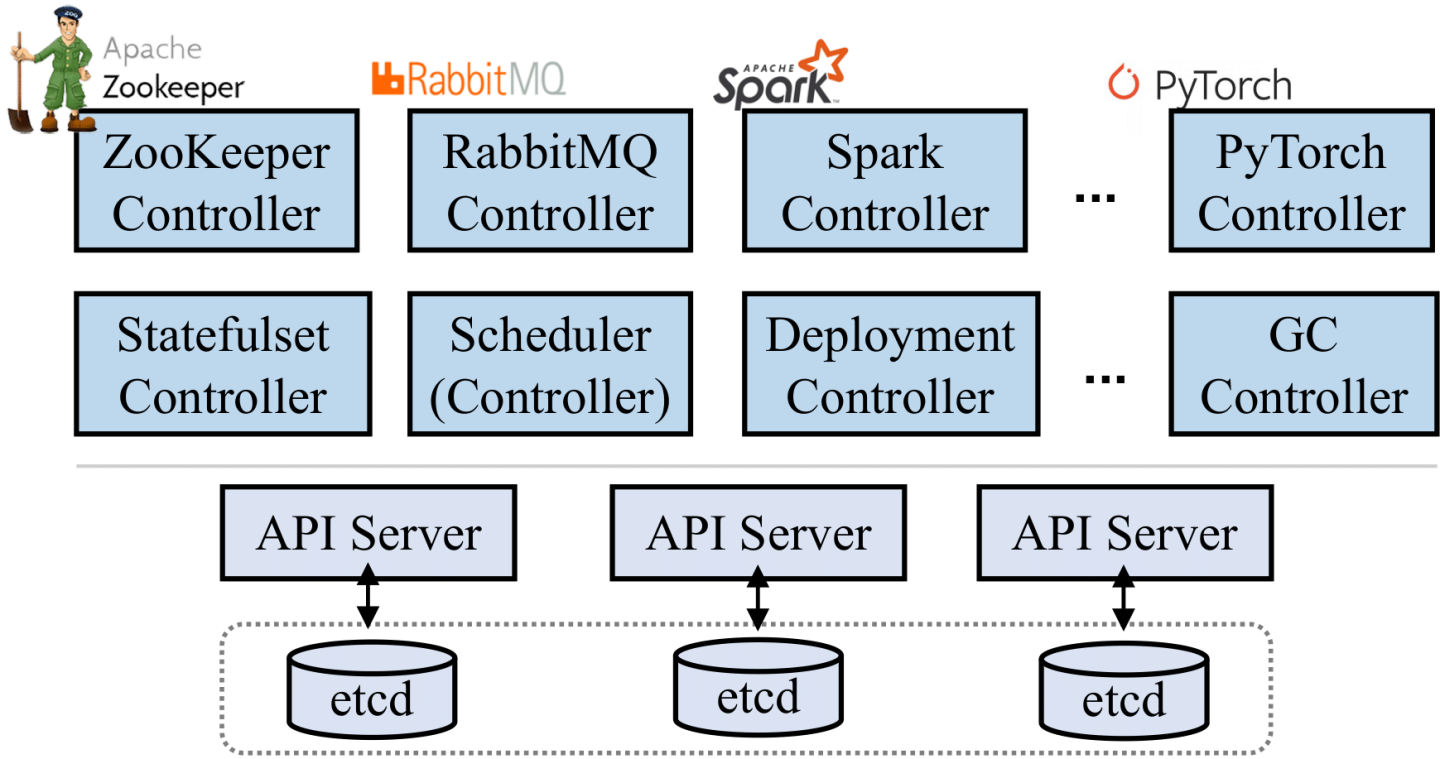

Modern cluster managers like Kubernetes follow the state-reconciliation pattern, where controllers repeatedly attempt to reconcile between the current cluster state and some desired state [2]. We use Kubernetes as a representative example to present the basics of state reconciliation. In Kubernetes, the cluster state is represented as data objects, and all cluster management logic is implemented in a fleet of controllers, running as microservices. Figure 1 illustrates Kubernetes’ architecture. Kubernetes’ core comprises an ensemble of API servers and a highly available, strongly consistent data store (e.g., etcd) that houses the cluster state. The cluster state is represented by a collection of objects. Every entity in the cluster has a corresponding object in the cluster state, including pods, volumes, nodes, and groups of applications. All controllers in Kubernetes interact with the cluster state via API servers.

reconcile() function, which is invoked whenever the desired state description (or its relevant cluster states) is changed.This design enables Kubernetes to be highly extensible: supporting a new application or feature is a matter of adding a new controller and a corresponding custom object type to describe the desired state; it does not require changes to API servers or existing state objects. Kubernetes’ extensibility has led to the emergence of a thriving ecosystem of thousands of custom controllers that have been developed by commercial vendors and open-source communities.

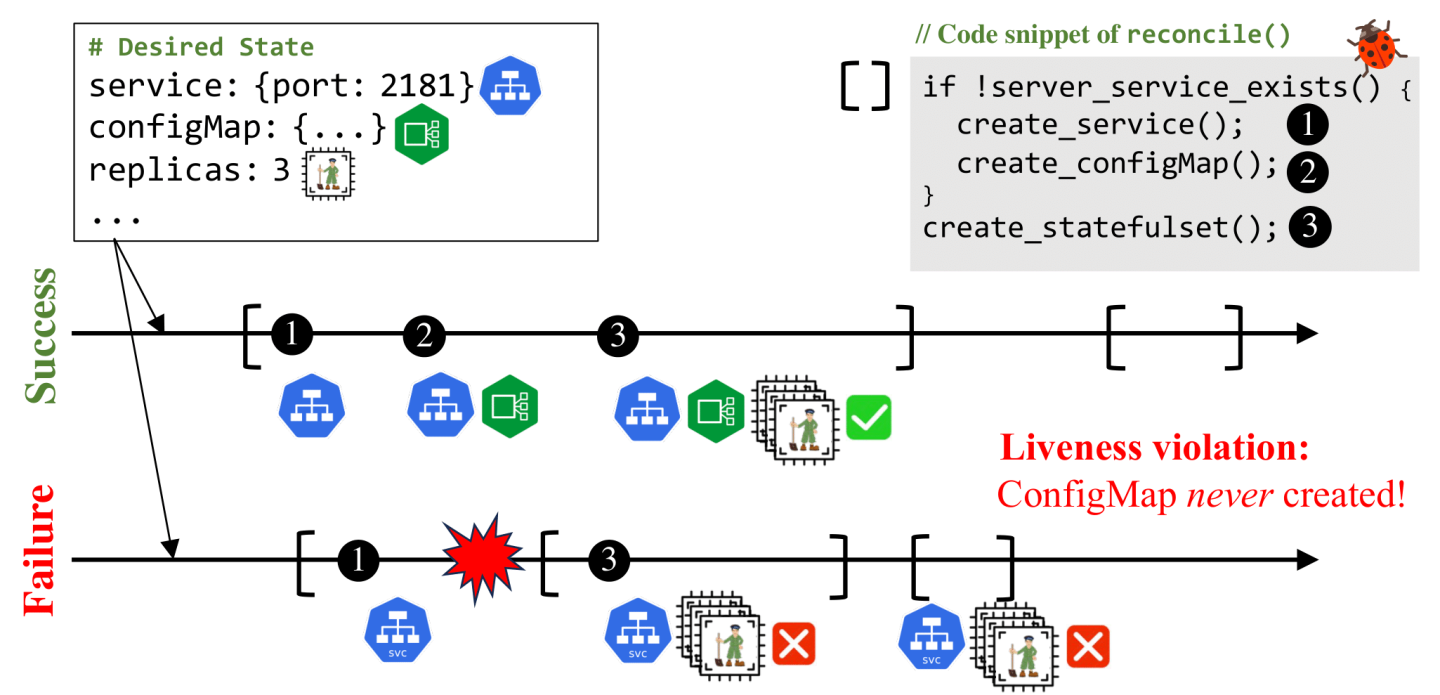

Figure 2 exemplifies the reconciliation process of a Kubernetes controller for managing ZooKeeper. To create a ZooKeeper cluster, the controller takes three steps to create: (1) a networked service (a Kubernetes service object), (2) a ZooKeeper configuration (a config map object), and (3) a stateful application (a stateful set object) with three replicas. Each step is performed by creating a new state object of the corresponding resources via the API server, which then triggers Kubernetes built-in controllers, e.g., the StatefulSet controller will create three sets of pods and volumes to run containerized ZooKeeper nodes. In the end, the cluster state matches the desired state. Later, if the desired state changes (e.g., its number of replicas is increased), the ZooKeeper controller will start a new iteration of reconciliation that updates the stateful set object to scale up the ZooKeeper cluster.

A bug in a controller’s reconciliation can result in the controller never being able to match the desired state, even when reconcile() is called repeatedly—a liveness violation. Controllers are expected to be level-triggered [5]: reconcile() can be called from any current cluster state to match any given desired state, with no guarantee that the controller has seen the entire history of cluster state changes [12]. In addition, controllers run within dynamic and complex environments and thus must tolerate unexpected failures, network interruptions and concurrency and asynchrony issues while running reconcile().

Figure 2 shows one of many bug patterns of controllers [3, 8, 9, 10]. If the controller crashes between steps (1) and (2) during an execution of reconcile(), Kubernetes will reboot the controller. The freshly invoked reconcile() call now faces the intermediate state created by the previous failed execution (step (1)). However, in this case, the controller would never perform step (2) due to a buggy predicate, which only checks whether the networked service exists, but not whether the config map also exists.

The enormity of cluster state space and the complexity of failure events lead to a state-space explosion problem, making it prohibitively expensive (if not impossible) to test a controller’s behavior under all possible scenarios. In particular, liveness violations are notoriously hard to detect because a testing tool, by observing a controller’s external behavior, cannot decide whether the controller will never realize the desired state or is just running slow.

Anvil is a framework that allows developers to confirm statically, at compile time, that a controller performs state reconciliation correctly. Anvil uses formal verification—an approach that confirms the correctness of a program by proving that the program always adheres a correctness specification.

With Anvil, developers implement the controller’s state-reconciliation logic in Rust, write a specification and a proof to show that the controller correctly implements the specification; the proof is mechanically checked to ensure that the proof itself is correct.

Implementing Controllers

In Anvil, developers implement a controller using a state machine to enable formal verification. Figure 3 shows a snippet of the Anvil Controller API specified using a Rust trait: it involves defining the initial state and the transitions of a state machine.

Anvil’s reconcile() uses the state machine: it starts from the initial state and invokes step() iteratively until all steps are done or if any step encounters an error. Each iteration of step() returns the next state in the state machine, together with an external request. The external request is typically a REST call to Kubernetes API servers, but can also be extended to non-Kubernetes APIs. The response to the external request is passed as an argument to the next iteration of step(). Note that the API enforces no more than one external request per step(), making the state-machine transition atomic with respect to cluster-state changes. Anvil’s reconcile() interfaces a trusted Kubernetes client library (kube-rs [1]) which invokes reconcile() upon changes, handles its output, and requeues the next invocation.

Figure 4 shows the step() implementation of a ZooKeeper controller (Figure 2). The state machine starts from the CheckService state, where it returns a request to read the service object from the API server (service_get_req) and the next state to transition to ReconcileService. The reconcile() method fetches the service object by calling the API server, and moves on to the next iteration of step(), bringing the state machine to the ReconcileService branch. The controller proceeds to create or update the service based on the response of service_get_req. In this way, the controller progressively reconciles each cluster-state object and eventually matches the desired state declared by ZKD.

To verify the controller, developers need to first write a correctness specification that they want the controller to implement. However, controllers, unlike some other systems (e.g., consensus systems or storage systems), do not have well-known protocols or properties that formally define their correctness. How do we formally specify controller correctness?

Specifying Controller Correctness

We formalize controller correctness as eventually stable reconciliation (ESR), a specification that captures key properties of state reconciliation. We follow the principle that the specification must be: (1) powerful enough to preclude a broad range of bugs, (2) generally applicable to diverse controllers, and (3) concise enough for manual inspection.

ESR captures two key properties of any controller’s state reconciliation behavior: (1) progress: given a desired state description, the controller must eventually make the cluster state match that desired state (unless the desired state changes), and (2) stability: if the controller successfully brought the cluster to the desired state, it must keep the cluster in that state (unless the desired state changes).

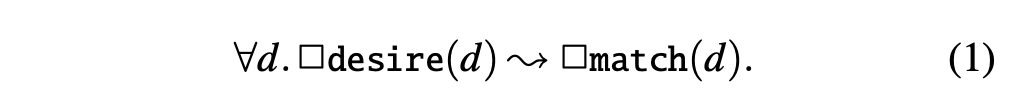

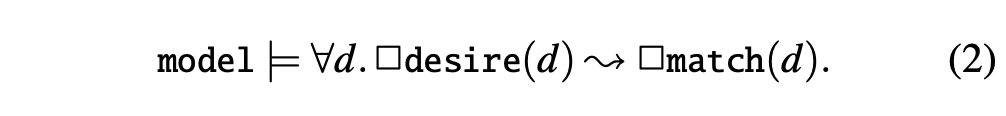

We formalize ESR as a TLA (temporal logic of actions [6]) formula. In TLA, the behavior of the state machine is captured by its set of traces, infinite sequences of system states. The ESR formula should hold for all traces of the controllers’s execution under all possibilities for asynchrony, concurrency, and failures. We use d to denote a state description, desire(d) to denote whether d is the current description of the desired state (the controller’s input), match(d) to denote whether the current cluster state matches the description d. Our definition of ESR is given by the following formula:

Informally, ESR asserts that if at some point the desired state stops changing, then the cluster will eventually reach a state that matches it, and stay that way forever. The universal quantifier ∀ (for-all) is used to assert on all possible state descriptions. For example, ∀d.P(d) holds if and only if P(d) holds for all possible d. The temporal operators ↝ (leads-to) and ☐(always) are used in temporal logics to reason about the future of an execution trace. If predicates P and Q talk about the current state, then ☐P says that P holds in the current state and all future states, while P ↝ Q says that if P holds at some state then Q holds in the same state or some future state. Temporal logics such as TLA also allow nesting of temporal operators; for example, P ↝ ☐Q means that if P holds then eventually we get to a point such that from that point onwards, Q always holds.

The formalization of ESR captures the key correctness properties shared by virtually all controllers: progress and stability. We elaborate on this with a detailed dissection of Equation 1. The innermost conclusion of the formula is ☐match(d) after ↝, which states that eventually the controller matches the desired state (progress), and from then on, it always keeps the cluster state at the desired state (stability). Before ↝, ☐desire(d) is a realistic and necessary premise for the controller to match the desired state—if the desired state description keeps changing forever, the controller will keep chasing a moving target forever, and nothing can be guaranteed as we do not wish to assume a bound on how long state reconciliation takes. Finally, the ∀d states that the controller reconciles all desired state descriptions.

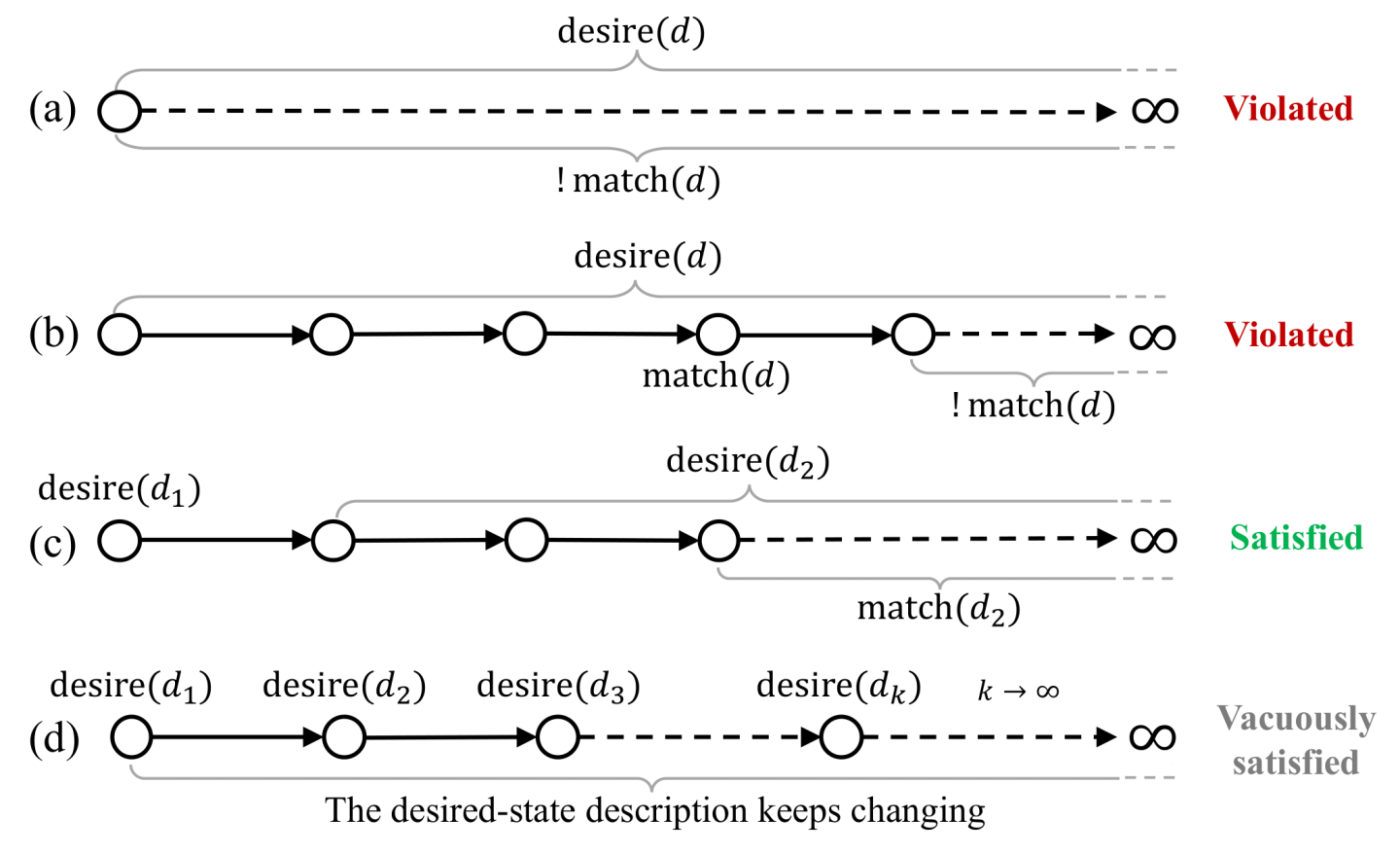

ESR precludes any bug that prevents the controller from eventually making the cluster state match the desired state stably. Figure 5 illustrates the ESR definition in some examples that violate or satisfy ESR: (a) violates progress because the cluster state never matches d, (b) violates stability because the cluster state first matches d but then deviates from d, (c) satisfies ESR because the cluster state eventually matches and always matches d2, and (d) vacuously satisfies ESR because the desired state never stops changing, so ☐desire(d) does not hold for any fixed d.

The verification goal for each controller is to prove that the controller satisfies ESR—all possible executions of the controller satisfy ESR. We use model to describe all possible executions of the controller that runs in an environment with asynchrony, concurrency and faults. Then the statement that the controller satisfies ESR is formalized as:

The ⊨ symbol in this context means that any execution that the controller might produce satisfies ESR.

Strictly speaking, ESR only guarantees one successful state reconciliation—the one that happens after the desired state stops changing forever. However, in practice the controller has no way of knowing if the desired state will change in the future or not. Therefore, we can expect that a controller that satisfies ESR will bring the cluster to match the desired state (and keep it like that) for any desired state that remains unchanged for long enough.

Anvil provides a TLA library that makes writing TLA formulas similar to writing code, inspired by previous work [4]. Figure 6 presents the ESR theorem written using Anvil. The definition of match varies across controllers; e.g., the match(d)for the ZooKeeper controller in Figure 4 checks if the service, config map and stateful set exist in the data store and match the desired state description d. Note that Anvil is not limited to ESR; developers can specify and prove other controller properties using Anvil.

With the controller implementation and specification ready, developers need to write a proof to show that the controller correctly implements the specification. To ensure the correctness of the proof itself, the proof is mechanically checked by the Verus verifier [7].

Proving Controller Correctness

Proving that a controller implements ESR requires reasoning about how the controller makes progress towards the desired state and then keeps the cluster state at the desired state stably.

To reason about the controller’s progress, most of the proof involves demonstrating that if condition P holds then eventually Q holds (i.e., P ↝ Q). For example, if the controller sends a request, then eventually the request is received and handled by the API server. Proving P ↝ Q is typically done by applying the WF1 proof rule [6] (WF stands for “weak fairness”) that informally states that “Action A makes P lead to Q” with the following requirements: (1) running A in a state satisfying P makes Q hold in the next state, (2) P holds until A runs, and (3) if A remains enabled (A can possibly occur), then A eventually occurs; this condition is referred to as weak fairness.

Proving that the controller keeps the cluster state at the desired state stably is done by induction: if the cluster state currently matches the desired state, then no matter what action happens next, the cluster state will still match the desired state.

To reduce the developers’ burden on proof, Anvil provides many proof utilities including a model of the controller environment, a set of reasonable assumptions on the environment (e.g., weak fairness), and reusable lemmas encoding temporal proof rules (e.g., WF1) that can be directly assembled into developers’ ESR proofs. In addition, Anvil comes with a general ESR proof strategy. The proof strategy structures the proof by dividing the proof goal into several more approachable lemmas. We refer readers interested in more details about writing proofs to our OSDI’24 paper [11].

We have used Anvil to build three verified Kubernetes controllers for managing different applications and services (ZooKeeper, RabbitMQ, and FluentBit). For each controller, we use a mature, widely used controller as a reference (either the official Kubernetes controller of the applications or from companies that offer related products).

We aim to implement verified controllers that are feature rich with production quality. For the ZooKeeper and RabbitMQ controllers, we implement key features offered by the reference controllers including scaling, version upgrading, resource allocation, pod placement, and configurations, as well as network and storage management. For the FluentBit controller, we implement all the features offered by the reference controller. All the verified controllers can readily be deployed in real-world Kubernetes platforms and manage their respective applications.

For verification, we spent around two person-months on verifying ESR for the ZooKeeper controller, during which we developed the proof strategy. We took much less time (around two person-weeks) to verify the other two controllers using the same proof strategy and similar invariants. We find Anvil’s ability to formally verify a controller’s implementation invaluable; we discovered four deep bugs via verification. Some of the bugs also exist in the reference controllers but were not detected by testing [3, 10].

Table 1 shows the efforts to implement and verify each controller using Anvil. We also measured the controllers’ state-reconciliation time and the verified controllers achieve competitive performance compared to the unverified references.

| Controller | Trusted (LoC) | Verified Impl (LoC) | Proof (LoC) |

|---|---|---|---|

| ZooKeeper | 950 | 1134 | 8352 |

| RabbitMQ | 548 | 1598 | 7228 |

| FluentBit | 828 | 1208 | 8395 |

We are continuously improving Anvil and building more verified controllers using Anvil. Currently, we are building verified Kubernetes built-in controllers, including ReplicaSet, Deployment and StatefulSet controllers. In future work, we aim to gradually replace existing (unverified) controllers with verified controllers using Anvil, including both custom and built-in ones.

Kubernetes controllers are getting increasingly important but their correctness is challenging. Anvil is a framework for developing Kubernetes controllers and confirming their correctness using formal verification. Our work shows that it is not only feasible but also pragmatic to implement, verify, and maintain practical Kubernetes controllers. We hope that Anvil leads to a practical path towards provably correct cloud infrastructures.

More details are available in the full Anvil paper and Anvil's documentation.