The Domain Name System (DNS) is the glue that holds the Internet together by providing the essential mapping from names to IP addresses. Over time DNS has evolved into a complex and intricate protocol, spread across numerous RFCs. Bugs in DNS implementations can lead to incorrect or implementation-dependent behavior, security vulnerabilities, and more. We introduce the first approach for finding RFC compliance errors in DNS nameserver implementations via automatic test generation. Our SCALE (Small-scope Constraint-driven Automated Logical Execution) approach jointly generates zone files and corresponding queries to cover RFC behaviors specified by an executable model of DNS resolution. We have built a tool called Ferret based on this approach and applied it to test 8 open-source DNS implementations. Ferret identified 30 new unique bugs, of which 20 have already been fixed; one of them is a critical vulnerability in BIND (CVE-2021-25215) that attackers could easily exploit to crash DNS resolvers and nameservers remotely.

The DNS is the Internet's phonebook, allowing users to connect to online services through user-friendly domain names in place of IP addresses. Organizations across the Internet run DNS nameservers, which use DNS configurations called zone files to determine how to handle each query, either returning an IP address, rewriting the query to another query, or delegating the responsibility to another nameserver. There are many popular nameserver implementations of the DNS protocol in the wild, both open-source and in public or private clouds.

With revisions and the addition of new features into the DNS, it has become challenging to maintain an efficient, high-throughput, multithreaded implementation that is also bug-free and compliant with the DNS RFC specifications. As a result, nameserver implementations frequently suffer from incorrect or implementation-specific behavior that causes outages, security vulnerabilities, and more. Therefore it is critical to ensure that the implementations adhere to the RFC specifications. Our goal is to find RFC compliance errors in DNS implementations by automatically generating test cases that cover a wide range of RFC behaviors. The major hindrance and a key technical challenge is that a DNS test case consists of both a query and a zone file, which is a collection of resource records that specify how the nameserver should handle queries. Zone files are highly structured objects with various syntactic and semantic well-formedness requirements, and the query must be related to the zone file for the test even to reach the core query resolution logic.

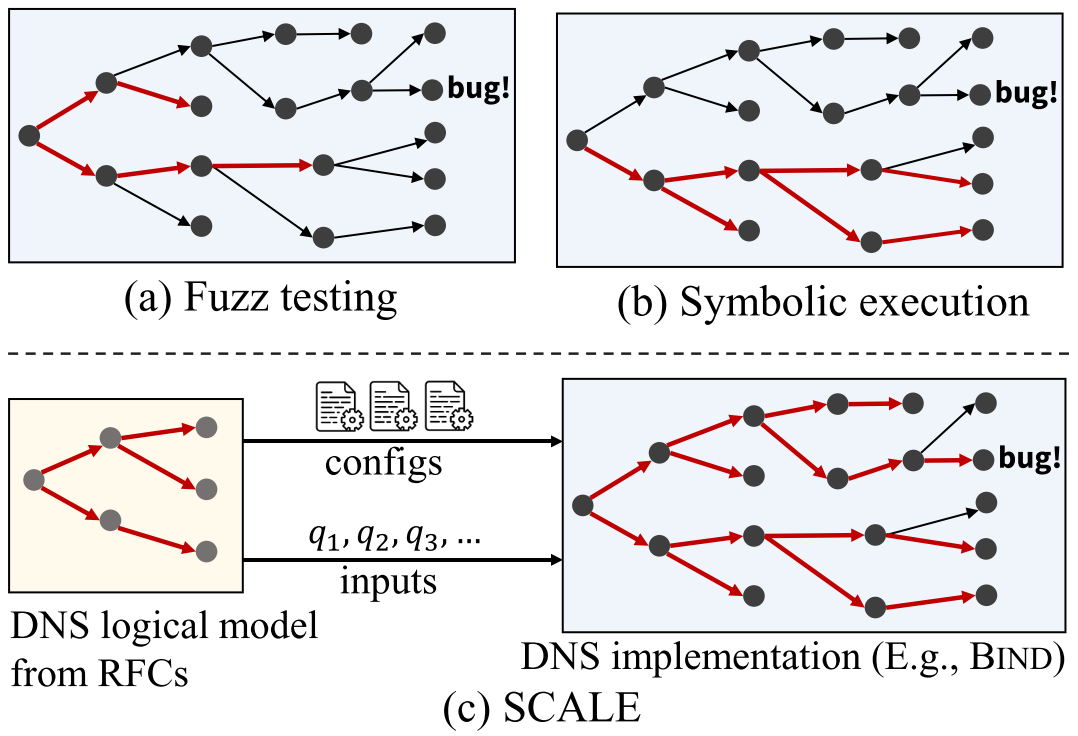

Existing standard automated test generation approaches are not suitable for RFC compliance checking of DNS implementations as shown in Figure 1 (a), (b). Fuzz testing is scalable but is often unable to navigate complex input requirements, which are necessary to generate behavioral tests for DNS. As a result, fuzzers for DNS only generate queries and hence are used only to find parsing errors. Symbolic execution can solve for input conditions but suffers from path explosion and has difficulty with complex data structures and program logic, and will thus only typically explore a small subset of possible program paths. We present SCALE as a way to solve this problem.

SCALE: Automated Test Generation for Protocol Implementations

SCALE (Small-scope Constraint-driven Automated Logical Execution) approach jointly generates zone files and corresponding queries, does so in a way that covers many distinct protocol behaviors, and is applicable to black-box protocol implementations. The key insight behind SCALE is that we can leverage the existence of RFCs to define a logical model of the behaviors of a network protocol and then use this model to guide test generation. Specifically, with SCALE we create an executable version of a protocol’s logical semantics and we employ symbolic execution on that executable version to generate high-coverage tests, which can then be executed on any protocol implementation (see Figure 1 (c)).

Ferret: A Tool Based on SCALE Approach for DNS Nameservers

We employed the SCALE approach to build the tool Ferret (https://github.com/dns-groot/Ferret) for automated testing of DNS nameserver implementations. Ferret generates tests using a logical model of DNS resolution implemented in a modeling language called Zen [1] that has built-in support for symbolic execution. Ferret then performs differential testing by running these tests on multiple DNS nameserver implementations and comparing their results. Differential testing helps to identify implementation-dependent behaviors when RFCs are ambiguous or underspecified.

Ferret generated over 13.5K test cases (https://github.com/dns-groot/FerretDataset), of which 62% resulted in some difference among implementations. Since the number of failed tests is high for inspection, we also developed a hybrid fingerprint for each test, which combines information from the test’s path in the Zen model with the results of differential testing, and then grouped tests by fingerprint. The failed tests roughly form 80 groups, from which we identified 30 unique bugs across all the implementations, including at least two bugs each in popular implementations like Bind, Knot, NSD, and PowerDNS.

We first give a brief overview of DNS before delving deeper.

The DNS provides a mapping from a domain name to various pieces of information, IP addresses being the most common. The domain namespace is a hierarchical tree divided into many zones for easy management. A zone is a collection of records that share a common end domain name. For example, the usenix.org zone has only records ending with usenix.org. Each zone has resource records, which map a domain name to some information, as shown below:

| EXAMPLE RECORD | DESCRIPTION |

|---|---|

exm.org. A 1.2.3.1 | IPv4 record |

*.exm.org. AAAA 1::db8::2:1 | Wildcard IPv6 record |

sub.exm.org. NS ns.dns.com. | Delegation record |

cs.exm.org. DNAME cs.org. | Domain redirection |

www.exm.org. CNAME exm.org. | Canonical name |

Each zone is available from servers called nameservers. When a query comes to a nameserver, it picks a zone file that can answer it and then finds the records closest to the query name. It then creates a response based on the query type and the records selected. If the record picked is an NS record, then it means that the user has to contact another nameserver to obtain the answer. If it is A/AAAA/TXT, then the search terminates. If it is a DNAME or CNAME record, the nameserver rewrites the query partially or entirely and restarts.

We next describe a specific DNS test case that Ferret generated, both to illustrate Ferret’s capabilities and to describe the challenges for automatic test generation in this setting. A test case for DNS nameservers consists of a zone file and a query. The Ferret-generated test shown below crashed the Bind nameserver, the most widely used DNS nameserver implementation in the world.

| Zone file | attack.com. SOA ... |

| Query | 〈host.attack.host.attack.com., DNAME〉 |

In this test case, the DNAME record is applied to rewrite the input query to end with "com." instead of ending with "host.attack.com.". When it performs the rewrite, the nameserver adds the record used for the rewrite, the DNAME record in this case, as well as the new query after rewrite, "〈host.attack.com., DNAME〉", to the response. The new query matches exactly with the DNAME record, and since the record is already present in the response, the nameservers are simply expected to return the response.

All implementations except Bind behaved as expected. Bind did not respond, and the query timed out. Inspecting the logs, we found that the server crashed with an assertion failure due to an attempt to add the same DNAME record to the response twice.

The crash represents a serious security vulnerability. An attacker can use this test case to attack a DNS zone files hosting service that uses Bind by remotely initiating a denial of service attack. The more severe attack using this test case is that the attacker can crash any public Bind based resolver with a bit of effort. A resolver is the DNS software that sends to and receives queries from the DNS nameservers on behalf of a client, using a cache for faster lookups. The attacker first requests the DNAME record from a public recursive resolver running Bind, which fetches the result from the attacker’s authoritative nameserver serving the test zone file. The DNAME record is cached, and then the test query is sent to the resolver. The resolver uses the cached DNAME record and ultimately crashes as described earlier.

The Bind security team called it an “easily-weaponized denial-of-service vector” and asked us to keep it confidential until a controlled release. Bind released a Common Vulnerabilities and Exposure (CVE-2021-25215) with a “high severity” rating and asked developers and users to upgrade to the patched version. The attack affected all maintained Bind versions, which in turn affected Red Hat Enterprise Linux (RHEL), Slackware, Ubuntu, and Infoblox.

This simple-looking test illustrates the challenge in identifying errors in nameservers. The test case has a lot of subtle dependencies that must be met to crash the server.

- First, there should be a

DNAMErecord in the zone file. - Second, the

DNAMErecord target(com)should be a parent of the record domain name(host.attack.com.). - Third, the query type should be

DNAMEonly. - Finally, the query should have at least two repetitions of the

“host. attack”part and end withcom.

Our SCALE approach meets this challenge, as described next.

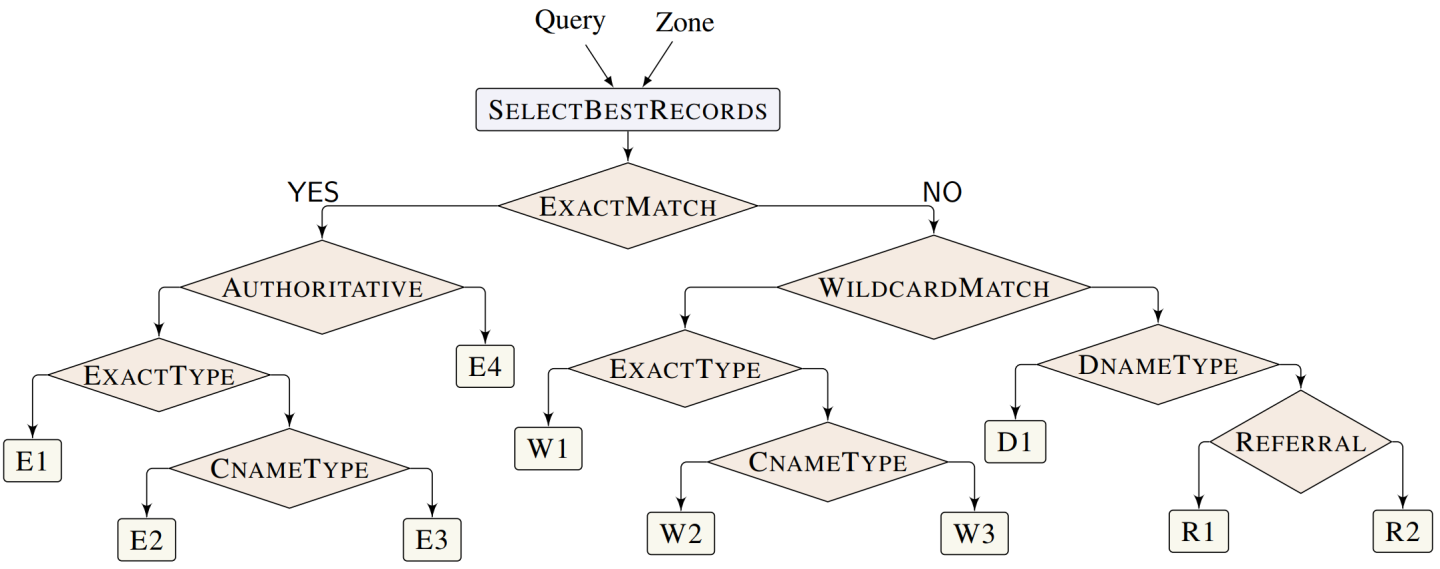

The goal of the SCALE approach is to leverage an executable formal model of a protocol to generate test cases automatically that cover a wide range of behaviors. Our executable semantics for DNS is based on the paper formalism of DNS semantics from our prior work [2] and focuses on query lookup at a single nameserver, which we model as a stateless function that takes a user query and a zone file and produces a DNS response. Figure 2 shows an abstract view of this function.

Given the input query and zone, DNS will first select the closest matching records in the zone for the query using the “SELECTBESTRECORDS” function and then follow the decision logic laid out in Figure 2 using these records. Each leaf node represents a unique case in the DNS. For example, the tree shows four different cases of exact matches, labeled E1 through E4.

Zen Implementation of Formal Semantics

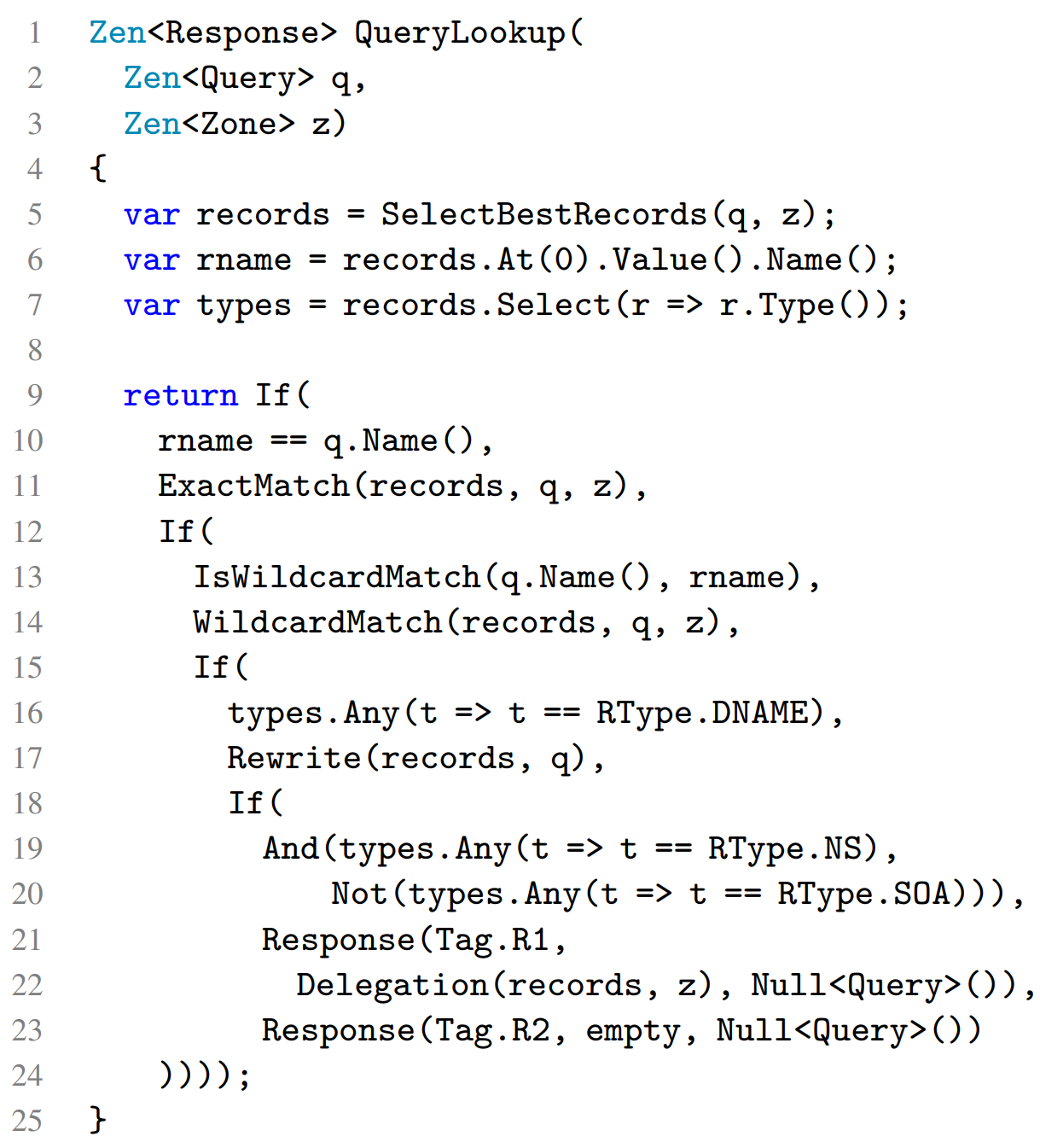

The test generation module of Ferret, the tool built specifically for DNS nameservers based on the SCALE approach, is a Zen implementation of the DNS semantics shown abstractly in Figure 2. Zen is a domain-specific language (DSL) embedded in C# with built-in support for symbolic execution. Figure 3 shows the model’s main query-lookup function, as depicted in the earlier Figure 2.

We mark the zone file and the query as symbolic using special Zen types, and the tool will then leverage automatic constraints solvers to produce concrete values for these inputs that take different execution paths, thereby enabling us to explore the space of DNS behaviors and feature interactions systematically. Our complete executable model consists of 520 lines of C# code. The model can also easily extend to new DNS RFCs that would be added in the future.

However, making the symbolic execution of the Zen code effective required us to address several challenges. We describe two major ones next.

Challenge 1: Generating Valid Zone Files

Naively performing symbolic execution produces zone files that get rejected by the implementations, failing to test any query resolution logic. They are rejected because a zone file must satisfy several well-formedness constraints to be accepted. For instance, if there is a DNAME record in a zone file for math.uni.edu., then no other records below this domain name may exist for any record type (e.g., an A record for fun.math.uni.edu. is not allowed). To solve this challenge, we formalized all the validity conditions from DNS RFCs as predicates in Zen and appended them to the constraints under which the query lookup function takes a particular execution path, thereby forcing the constraint solvers to generate only valid zone files.

Invalid zone files: We can also leverage Zen to systematically generate invalid zone files that violate the validity predicates. Invalid zone files help expose bugs, where an implementation accepts an invalid zone file and returns incorrect responses to the user. If an implementation rejects the invalid zone file, there is no issue, but if accepted, we need to test how the nameserver uses the zone for query lookup.

Challenge 2: Handling Unbounded Data

There are several sources of unboundedness in the formal model, making it hard for symbolic execution. A zone file can contain an unbounded number of records which makes the Zen model of query lookup have an unbounded number of paths, as the SelectBestRecords functions must examine all the records in the zone to pick the best ones. SMT constraint solvers have limited support for unbounded data structures such as lists, and in general, reasoning about such constraints requires quantifiers, which lead to undecidability. Therefore, we generate inputs with a bounded size, e.g., at most N records in a zone file. Though bounding things in this way can limit coverage, we have experimental evidence of the existence of a small-scope property for DNS (refer to our NSDI 2022 paper), meaning that many interesting behaviors, and behavioral errors, can be exercised with small tests.

Ferret generated 12,673 valid test cases for a maximum zone-file size of 4 in 6 hours. These tests exhaustively cover the model, i.e., these are all the possible paths in the model for that size bound. With this bound, each return point (leaf) in the model is covered by at least one test case. Each return point represents a distinct RFC-specified scenario for DNS resolution. Ferret then ran each test against all the eight implementations (shown in the table below) and found more than one response in the majority (8240) of them.

| Implementation | Language | Description | Bugs found | Crashes |

|---|---|---|---|---|

| Bind | C | de facto standard | 4 | 1 |

| PowerDNS | C++ | popular in North Europe | 2 | -- |

| NSD | C | hosts several TLDs | 4 | -- |

| Knot | C | hosts several TLDs | 5 | -- |

| CoreDNS | Go | used in Kubernetes | 6 | 1 |

| YADIFA | C | created by EURid (.eu) | 3 | -- |

| TrustDNS | Rust | security, safety focused | 4 | 1 |

| MaraDNS | C | lightweight server | 2 | -- |

Ferret then fingerprinted the 8240 tests and grouped them. A fingerprint consists of (1) the leaf in the formal model shown in Figure 2 and (2) the response groupings. An example fingerprint is 〈R1, {NSD, Knot, PowerDNS, YADIFA}, {Bind, CoreDNS}, {TrustDNS, MaraDNS}〉. The table below shows the number of tests generated for each case in the model, the number of tests where there was more than one group, and the number of unique fingerprints formed for each model case.

| Model case | #Tests | #Tests failing | #Fingerprints |

|---|---|---|---|

| E1 | 3180 | 239 | 7 |

| E2 | 12 | 10 | 5 |

| E3 | 96 | 12 | 3 |

| E4 | 6036 | 5312 | 11 |

| W1 | 60 | 33 | 8 |

| W2 | 24 | 21 | 9 |

| W3 | 18 | 16 | 1 |

| D1 | 230 | 65 | 4 |

| R1 | 2980 | 2529 | 27 |

| R2 | 37 | 3 | 1 |

In total the 8,240 tests with more than one group were partitioned into 76 unique fingerprints, for a reduction of more than two orders of magnitude. By manually inspecting the fingerprints, we identified 24 unique bugs, all of which have been confirmed as actual bugs (no false positives) and 14 of them are fixed by the time of writing.

Ferret generated 900 ill-formed zone files, 100 violating each of the 9 validity conditions in 2.5 hours. We used these zone files to test the four most widely used DNS implementations — Bind, NSD, Knot, PowerDNS — as these have a mature zone-file preprocessor available. As a first step, Ferret checked all of the zone files with each implementation’s preprocessor. For the implementations which accept the zone file, Ferret tested it and by inspecting the failed tests, we identified 6 new errors, all of which have been fixed.

Due to its importance as the “phonebook” of the Internet, DNS implementation bugs can impact millions of users. In this work, we introduced Ferret, the first automatic test generator for testing RFC compliance of DNS nameserver implementations. The SCALE approach underlying Ferret uses symbolic execution of a formal model to jointly generate configurations together with inputs. Ferret combines this technique with differential testing and fingerprinting to identify and automatically triage implementation errors. In total Ferret identified 30 new bugs, including at least two for each of the 8 implementations that we tested.

We believe our SCALE approach to RFC compliance testing and “ferreting” out bugs through (i) symbolic execution of a small formal model to jointly generate configurations together with inputs, combined with (ii) differential testing, and (iii) fingerprinting, could be useful more broadly beyond the DNS. For instance, there are many other complex and distributed protocols used at different network layers such as routing protocols like BGP and OSPF, flow control protocols like PFC, new transport layer protocols such as QUIC, and many more. It would be interesting future work to apply the SCALE methodology beyond DNS.