|

||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The reliability of a system depends on all its components, and not just the hard drive(s). A natural question is therefore what the relative frequency of drive failures is, compared to that of other types of hardware failures. To answer this question we consult data sets HPC1, COM1, and COM2, since these data sets contain records for all types of hardware replacements, not only disk replacements. Table 3 shows, for each data set, a list of the ten most frequently replaced hardware components and the fraction of replacements made up by each component. We observe that while the actual fraction of disk replacements varies across the data sets (ranging from 20% to 50%), it makes up a significant fraction in all three cases. In the HPC1 and COM2 data sets, disk drives are the most commonly replaced hardware component accounting for 30% and 50% of all hardware replacements, respectively. In the COM1 data set, disks are a close runner-up accounting for nearly 20% of all hardware replacements.

While Table 3 suggests that disks are among the most commonly replaced hardware components, it does not necessarily imply that disks are less reliable or have a shorter lifespan than other hardware components. The number of disks in the systems might simply be much larger than that of other hardware components. In order to compare the reliability of different hardware components, we need to normalize the number of component replacements by the component's population size.

Unfortunately, we do not have, for any of the systems, exact population counts of all hardware components. However, we do have enough information in HPC1 to estimate counts of the four most frequently replaced hardware components (CPU, memory, disks, motherboards). We estimate that there is a total of 3,060 CPUs, 3,060 memory dimms, and 765 motherboards, compared to a disk population of 3,406. Combining these numbers with the data in Table 3, we conclude that for the HPC1 system, the rate at which in five years of use a memory dimm was replaced is roughly comparable to that of a hard drive replacement; a CPU was about 2.5 times less often replaced than a hard drive; and a motherboard was 50% less often replaced than a hard drive.

Table 2: Node outages that were attributed to hardware problems broken down by the responsible hardware component. This includes all outages, not only those that required replacement of a hardware component.

The above discussion covers only failures that required a hardware component to be replaced. When running a large system one is often interested in any hardware failure that causes a node outage, not only those that necessitate a hardware replacement. We therefore obtained the HPC1 troubleshooting records for any node outage that was attributed to a hardware problem, including problems that required hardware replacements as well as problems that were fixed in some other way. Table 2 gives a breakdown of all records in the troubleshooting data, broken down by the hardware component that was identified as the root cause. We observe that 16% of all outage records pertain to disk drives (compared to 30% in Table 3), making it the third most common root cause reported in the data. The two most commonly reported outage root causes are CPU and memory, with 44% and 29%, respectively.

For a complete picture, we also need to take the severity of an anomalous event into account. A closer look at the HPC1 troubleshooting data reveals that a large number of the problems attributed to CPU and memory failures were triggered by parity errors, i.e. the number of errors is too large for the embedded error correcting code to correct them. In those cases, a simple reboot will bring the affected node back up. On the other hand, the majority of the problems that were attributed to hard disks (around 90%) lead to a drive replacement, which is a more expensive and time-consuming repair action.

Ideally, we would like to compare the frequency of hardware problems that we report above with the frequency of other types of problems, such software failures, network problems, etc. Unfortunately, we do not have this type of information for the systems in Table 1. However, in recent work [27] we have analyzed failure data covering any type of node outage, including those caused by hardware, software, network problems, environmental problems, or operator mistakes. The data was collected over a period of 9 years on more than 20 HPC clusters and contains detailed root cause information. We found that, for most HPC systems in this data, more than 50% of all outages are attributed to hardware problems and around 20% of all outages are attributed to software problems. Consistently with the data in Table 2, the two most common hardware components to cause a node outage are memory and CPU. The data of this recent study [27] is not used in this paper because it does not contain information about storage replacements.

4 Disk replacement rates

4.1 Disk replacements and MTTF

|

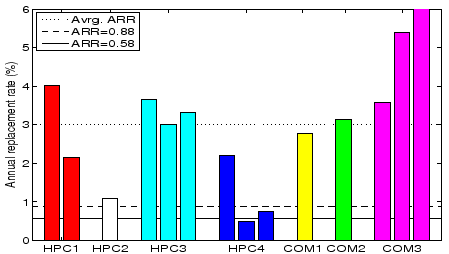

In the following, we study how field experience with disk replacements compares to datasheet specifications of disk reliability. Figure 1 shows the datasheet AFRs (horizontal solid and dashed line), the observed ARRs for each of the seven data sets and the weighted average ARR for all disks less than five years old (dotted line). For HPC1, HPC3, HPC4 and COM3, which cover different types of disks, the graph contains several bars, one for each type of disk, in the left-to-right order of the corresponding top-to-bottom entries in Table 1. Since at this point we are not interested in wearout effects after the end of a disk's nominal lifetime, we have included in Figure 1 only data for drives within their nominal lifetime of five years. In particular, we do not include a bar for the fourth type of drives in COM3 (see Table 1), which were deployed in 1998 and were more than seven years old at the end of the data collection. These possibly ``obsolete'' disks experienced an ARR, during the measurement period, of 24%. Since these drives are well outside the vendor's nominal lifetime for disks, it is not surprising that the disks might be wearing out. All other drives were within their nominal lifetime and are included in the figure.

Figure 1 shows a significant discrepancy between the observed ARR and the datasheet AFR for all data sets. While the datasheet AFRs are between 0.58% and 0.88%, the observed ARRs range from 0.5% to as high as 13.5%. That is, the observed ARRs by data set and type, are by up to a factor of 15 higher than datasheet AFRs.

Most commonly, the observed ARR values are in the 3% range. For example, the data for HPC1, which covers almost exactly the entire nominal lifetime of five years exhibits an ARR of 3.4% (significantly higher than the datasheet AFR of 0.88%). The average ARR over all data sets (weighted by the number of drives in each data set) is 3.01%. Even after removing all COM3 data, which exhibits the highest ARRs, the average ARR was still 2.86%, 3.3 times higher than 0.88%.

It is interesting to observe that for these data sets there is no significant discrepancy between replacement rates for SCSI and FC drives, commonly represented as the most reliable types of disk drives, and SATA drives, frequently described as lower quality. For example, the ARRs of drives in the HPC4 data set, which are exclusively SATA drives, are among the lowest of all data sets. Moreover, the HPC3 data set includes both SCSI and SATA drives (as part of the same system in the same operating environment) and they have nearly identical replacement rates. Of course, these HPC3 SATA drives were decommissioned because of media error rates attributed to lubricant breakdown (recall Section 2.1), our only evidence of a bad batch, so perhaps more data is needed to better understand the impact of batches in overall quality.

It is also interesting to observe that the only drives that have an observed ARR below the datasheet AFR are the second and third type of drives in data set HPC4. One possible reason might be that these are relatively new drives, all less than one year old (recall Table 1). Also, these ARRs are based on only 16 replacements, perhaps too little data to draw a definitive conclusion.

A natural question arises: why are the observed disk replacement rates so much higher in the field data than the datasheet MTTF would suggest, even for drives in the first years of operation. As discussed in Sections 2.1 and 2.2, there are multiple possible reasons.

First, customers and vendors might not always agree on the definition of when a drive is ``faulty''. The fact that a disk was replaced implies that it failed some (possibly customer specific) health test. When a health test is conservative, it might lead to replacing a drive that the vendor tests would find to be healthy. Note, however, that even if we scale down the ARRs in Figure 1 to 57% of their actual values, to estimate the fraction of drives returned to the manufacturer that fail the latter's health test [1], the resulting AFR estimates are still more than a factor of two higher than datasheet AFRs in most cases.

Second, datasheet MTTFs are typically determined based on accelerated (stress) tests, which make certain assumptions about the operating conditions under which the disks will be used (e.g. that the temperature will always stay below some threshold), the workloads and ``duty cycles'' or powered-on hours patterns, and that certain data center handling procedures are followed. In practice, operating conditions might not always be as ideal as assumed in the tests used to determine datasheet MTTFs. A more detailed discussion of factors that can contribute to a gap between expected and measured drive reliability is given by Elerath and Shah [6].

Below we summarize the key observations of this section.

Observation 1: Variance between datasheet MTTF and disk replacement rates in the field was larger

than we expected. The weighted average ARR was 3.4 times larger than 0.88%, corresponding to a datasheet MTTF of 1,000,000 hours.

Observation 2: For older systems (5-8 years of age), data

sheet MTTFs underestimated replacement rates by as much as a factor of 30.

Observation 3: Even during the first few years of a system's lifetime (![]() years),

when wear-out is not expected to be a significant factor, the difference between datasheet MTTF and observed

time to disk replacement was as large as a factor of 6.

years),

when wear-out is not expected to be a significant factor, the difference between datasheet MTTF and observed

time to disk replacement was as large as a factor of 6.

Observation 4: In our data sets, the replacement rates of SATA disks are not worse than the replacement rates of SCSI or FC disks. This may indicate that disk-independent factors, such as operating conditions, usage and environmental factors, affect replacement rates more than component specific factors. However, the only evidence we have of a bad batch of disks was found in a collection of SATA disks experiencing high media error rates. We have too little data on bad batches to estimate the relative frequency of bad batches by type of disk, although there is plenty of anecdotal evidence that bad batches are not unique to SATA disks.

4.2 Age-dependent replacement rates

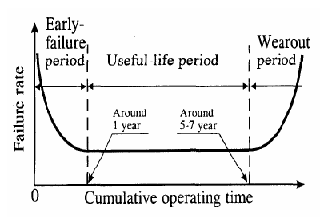

One aspect of disk failures that single-value metrics such as MTTF and AFR cannot capture is that in real life failure rates are not constant [5]. Failure rates of hardware products typically follow a ``bathtub curve'' with high failure rates at the beginning (infant mortality) and the end (wear-out) of the lifecycle. Figure 2 shows the failure rate pattern that is expected for the life cycle of hard drives [4,5,33]. According to this model, the first year of operation is characterized by early failures (or infant mortality). In years 2-5, the failure rates are approximately in steady state, and then, after years 5-7, wear-out starts to kick in.

The common concern, that MTTFs do not capture infant mortality, has lead the International Disk drive Equipment and Materials Association (IDEMA) to propose a new standard for specifying disk drive reliability, based on the failure model depicted in Figure 2 [5,33]. The new standard requests that vendors provide four different MTTF estimates, one for the first 1-3 months of operation, one for months 4-6, one for months 7-12, and one for months 13-60.

The goal of this section is to study, based on our field replacement data, how disk replacement rates in large-scale installations vary over a system's life cycle. Note that we only see customer visible replacement. Any infant mortality failure caught in the manufacturing, system integration or installation testing are probably not recorded in production replacement logs.

The best data sets to study replacement rates across the system life cycle are HPC1 and the first type of drives of HPC4. The reason is that these data sets span a long enough time period (5 and 3 years, respectively) and each cover a reasonably homogeneous hard drive population, allowing us to focus on the effect of age.

We study the change in replacement rates as a function of age at two different time granularities, on a per-month and a per-year basis, to make it easier to detect both short term and long term trends. Figure 3 shows the annual replacement rates for the disks in the compute nodes of system HPC1 (left), the file system nodes of system HPC1 (middle) and the first type of HPC4 drives (right), at a yearly granularity.

We make two interesting observations. First, replacement rates in all years, except for year 1, are larger than the datasheet MTTF would suggest. For example, in HPC1's second year, replacement rates are 20% larger than expected for the file system nodes, and a factor of two larger than expected for the compute nodes. In year 4 and year 5 (which are still within the nominal lifetime of these disks), the actual replacement rates are 7-10 times higher than the failure rates we expected based on datasheet MTTF.

The second observation is that replacement rates are rising significantly over the years, even during early years in the lifecycle. Replacement rates in HPC1 nearly double from year 1 to 2, or from year 2 to 3. This observation suggests that wear-out may start much earlier than expected, leading to steadily increasing replacement rates during most of a system's useful life. This is an interesting observation because it does not agree with the common assumption that after the first year of operation, failure rates reach a steady state for a few years, forming the ``bottom of the bathtub''.

Next, we move to the per-month view of replacement rates, shown in Figure 4. We observe that for the HPC1 file system nodes there are no replacements during the first 12 months of operation, i.e. there's is no detectable infant mortality. For HPC4, the ARR of drives is not higher in the first few months of the first year than the last few months of the first year. In the case of the HPC1 compute nodes, infant mortality is limited to the first month of operation and is not above the steady state estimate of the datasheet MTTF. Looking at the lifecycle after month 12, we again see continuously rising replacement rates, instead of the expected ``bottom of the bathtub''.

Below we summarize the key observations of this section.

Observation 5: Contrary to common and proposed models, hard drive replacement rates do not enter

steady state after the first year of operation. Instead replacement rates seem to steadily

increase over time.

Observation 6: Early onset of wear-out seems to have a much stronger

impact on lifecycle replacement rates than infant mortality, as experienced by end customers,

even when considering only the first three or five years

of a system's lifetime. We therefore recommend that wear-out be incorporated into

new standards for disk drive reliability.

The new standard suggested by IDEMA does not take wear-out into account [5,33].

5 Statistical properties of disk failures

In the previous sections, we have focused on aggregate statistics, e.g. the average number of disk replacements in a time period. Often one wants more information on the statistical properties of the time between failures than just the mean. For example, determining the expected time to failure for a RAID system requires an estimate on the probability of experiencing a second disk failure in a short period, that is while reconstructing lost data from redundant data. This probability depends on the underlying probability distribution and maybe poorly estimated by scaling an annual failure rate down to a few hours.

The most common assumption about the statistical characteristics of disk failures is that they form a Poisson process, which implies two key properties:

- Failures are independent.

- The time between failures follows an exponential distribution.

The goal of this section is to evaluate how realistic the above assumptions are. We begin by providing statistical evidence that disk failures in the real world are unlikely to follow a Poisson process. We then examine each of the two key properties (independent failures and exponential time between failures) independently and characterize in detail how and where the Poisson assumption breaks. In our study, we focus on the HPC1 data set, since this is the only data set that contains precise timestamps for when a problem was detected (rather than just timestamps for when repair took place).

5.1 The Poisson assumption

|

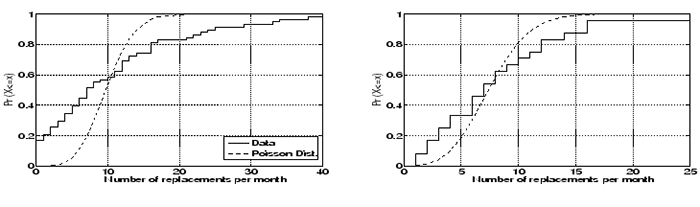

The Poisson assumption implies that the number of failures during a given time interval (e.g. a week or a month) is distributed according to the Poisson distribution. Figure 5 (left) shows the empirical CDF of the number of disk replacements observed per month in the HPC1 data set, together with the Poisson distribution fit to the data's observed mean.

We find that the Poisson distribution does not provide a good visual fit for the number of

disk replacements per month in the data, in particular for very small

and very large numbers of replacements in a month.

For example, under the Poisson distribution the probability of seeing

![]() failures in a given month is less than

0.0024, yet we see 20 or more disk replacements in nearly 20% of all months

in HPC1's lifetime.

Similarly, the probability of seeing zero or one failure in a given month is

only 0.0003 under the Poisson distribution, yet in 20% of all

months in HPC1's lifetime we observe zero or one disk replacement.

failures in a given month is less than

0.0024, yet we see 20 or more disk replacements in nearly 20% of all months

in HPC1's lifetime.

Similarly, the probability of seeing zero or one failure in a given month is

only 0.0003 under the Poisson distribution, yet in 20% of all

months in HPC1's lifetime we observe zero or one disk replacement.

A chi-square test reveals that we can reject the hypothesis that the number of disk replacements per month follows a Poisson distribution at the 0.05 significance level. All above results are similar when looking at the distribution of number of disk replacements per day or per week, rather than per month.

One reason for the poor fit of the Poisson distribution might be that failure rates are not steady over the lifetime of HPC1. We therefore repeat the same process for only part of HPC1's lifetime. Figure 5 (right) shows the distribution of disk replacements per month, using only data from years 2 and 3 of HPC1. The Poisson distribution achieves a better fit for this time period and the chi-square test cannot reject the Poisson hypothesis at a significance level of 0.05. Note, however, that this does not necessarily mean that the failure process during years 2 and 3 does follow a Poisson process, since this would also require the two key properties of a Poisson process (independent failures and exponential time between failures) to hold. We study these two properties in detail in the next two sections.

5.2 Correlations

In this section, we focus on the first key property of a Poisson process, the independence of failures. Intuitively, it is clear that in practice failures of disks in the same system are never completely independent. The failure probability of disks depends for example on many factors, such as environmental factors, like temperature, that are shared by all disks in the system. When the temperature in a machine room is far outside nominal values, all disks in the room experience a higher than normal probability of failure. The goal of this section is to statistically quantify and characterize the correlation between disk replacements.

We start with a simple test in which we determine the correlation of the number of disk replacements observed in successive weeks or months by computing the correlation coefficient between the number of replacements in a given week or month and the previous week or month. For data coming from a Poisson processes we would expect correlation coefficients to be close to 0. Instead we find significant levels of correlations, both at the monthly and the weekly level.

The correlation coefficient between consecutive weeks is 0.72, and the correlation coefficient between consecutive months is 0.79. Repeating the same test using only the data of one year at a time, we still find significant levels of correlation with correlation coefficients of 0.4-0.8.

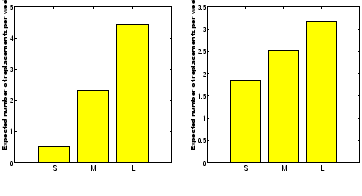

Statistically, the above correlation coefficients indicate a strong correlation, but it would be nice to have a more intuitive interpretation of this result. One way of thinking of the correlation of failures is that the failure rate in one time interval is predictive of the failure rate in the following time interval. To test the strength of this prediction, we assign each week in HPC1's life to one of three buckets, depending on the number of disk replacements observed during that week, creating a bucket for weeks with small, medium, and large number of replacements, respectively 1. The expectation is that a week that follows a week with a ``small'' number of disk replacements is more likely to see a small number of replacements, than a week that follows a week with a ``large'' number of replacements. However, if failures are independent, the number of replacements in a week will not depend on the number in a prior week.

|

Figure 7 (left) shows the expected number of disk replacements in a week of HPC1's lifetime as a function of which bucket the preceding week falls in. We observe that the expected number of disk replacements in a week varies by a factor of 9, depending on whether the preceding week falls into the first or third bucket, while we would expect no variation if failures were independent. When repeating the same process on the data of only year 3 of HPC1's lifetime, we see a difference of a close to factor of 2 between the first and third bucket.

|

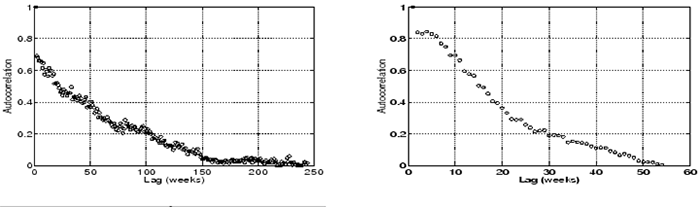

So far, we have only considered correlations between successive time intervals, e.g. between two successive weeks. A more general way to characterize correlations is to study correlations at different time lags by using the autocorrelation function. Figure 6 (left) shows the autocorrelation function for the number of disk replacements per week computed across the HPC1 data set. For a stationary failure process (e.g. data coming from a Poisson process) the autocorrelation would be close to zero at all lags. Instead, we observe strong autocorrelation even for large lags in the range of 100 weeks (nearly 2 years).

We repeated the same autocorrelation test for only parts of HPC1's lifetime and find similar levels of autocorrelation. Figure 6 (right), for example, shows the autocorrelation function computed only on the data of the third year of HPC1's life. Correlation is significant for lags in the range of up to 30 weeks.

Another measure for dependency is long range dependence, as quantified by the Hurst exponent ![]() .

The Hurst exponent measures how fast the autocorrelation functions drops with increasing lags.

A Hurst parameter between 0.5-1 signifies a statistical process with a long memory and a

slow drop of the autocorrelation function.

Applying several different estimators (see Section 2)

to the HPC1 data, we determine a Hurst exponent between 0.6-0.8 at the weekly granularity.

These values are comparable to Hurst exponents reported for Ethernet traffic, which is known to

exhibit strong long range dependence [16].

.

The Hurst exponent measures how fast the autocorrelation functions drops with increasing lags.

A Hurst parameter between 0.5-1 signifies a statistical process with a long memory and a

slow drop of the autocorrelation function.

Applying several different estimators (see Section 2)

to the HPC1 data, we determine a Hurst exponent between 0.6-0.8 at the weekly granularity.

These values are comparable to Hurst exponents reported for Ethernet traffic, which is known to

exhibit strong long range dependence [16].

Observation 7: Disk replacement counts exhibit significant levels of autocorrelation.

Observation 8: Disk replacement counts exhibit long-range dependence.

5.3 Distribution of time between failure

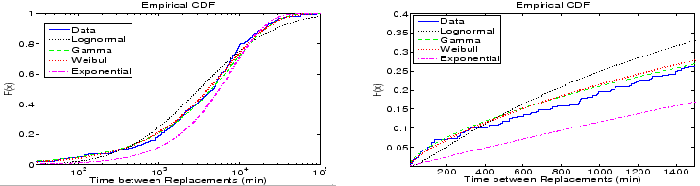

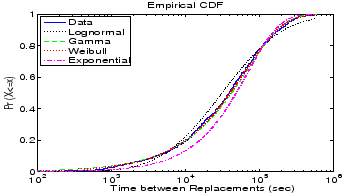

In this section, we focus on the second key property of a Poisson failure process, the exponentially distributed time between failures. Figure 8 shows the empirical cumulative distribution function of time between disk replacements as observed in the HPC1 system and four distributions matched to it.

We find that visually the gamma and Weibull distributions are the best fit to the data, while exponential and lognormal distributions provide a poorer fit. This agrees with results we obtain from the negative log-likelihood, that indicate that the Weibull distribution is the best fit, closely followed by the gamma distribution. Performing a Chi-Square-Test, we can reject the hypothesis that the underlying distribution is exponential or lognormal at a significance level of 0.05. On the other hand the hypothesis that the underlying distribution is a Weibull or a gamma cannot be rejected at a significance level of 0.05.

Figure 8 (right) shows a close up of the empirical CDF and the distributions matched to it, for small time-between-replacement values (less than 24 hours). The reason that this area is particularly interesting is that a key application of the exponential assumption is in estimating the time until data loss in a RAID system. This time depends on the probability of a second disk failure during reconstruction, a process which typically lasts on the order of a few hours. The graph shows that the exponential distribution greatly underestimates the probability of a second failure during this time period. For example, the probability of seeing two drives in the cluster fail within one hour is four times larger under the real data, compared to the exponential distribution. The probability of seeing two drives in the cluster fail within the same 10 hours is two times larger under the real data, compared to the exponential distribution.

|

The poor fit of the exponential distribution might be due to the fact that failure rates change over the lifetime of the system, creating variability in the observed times between disk replacements that the exponential distribution cannot capture. We therefore repeated the above analysis considering only segments of HPC1's lifetime. Figure 9 shows as one example the results from analyzing the time between disk replacements in year 3 of HPC1's operation. While visually the exponential distribution now seems a slightly better fit, we can still reject the hypothesis of an underlying exponential distribution at a significance level of 0.05. The same holds for other 1-year and even 6-month segments of HPC1's lifetime. This leads us to believe that even during shorter segments of HPC1's lifetime the time between replacements is not realistically modeled by an exponential distribution.

While it might not come as a surprise that the simple exponential distribution does

not provide as good a fit as the more flexible two-parameter distributions,

an interesting question is what properties of the empirical time between failure

make it different from a theoretical exponential distribution.

We identify as a first differentiating feature that the data exhibits higher variability than a

theoretical exponential distribution. The data has a ![]() of 2.4, which is more than two times

higher than the

of 2.4, which is more than two times

higher than the ![]() of an exponential distribution, which is 1.

of an exponential distribution, which is 1.

A second differentiating feature is that the time between disk replacements in the data exhibits decreasing hazard rates. Recall from Section 2.4 that the hazard rate function measures how the time since the last failure influences the expected time until the next failure. An increasing hazard rate function predicts that if the time since a failure is long then the next failure is coming soon. And a decreasing hazard rate function predicts the reverse. The table below summarizes the parameters for the Weibull and gamma distribution that provided the best fit to the data.

| Distribution / Parameters | ||||

| Weibull | Gamma | |||

| Shape | Scale | Shape | Scale | |

| HPC1 compute nodes | 0.73 | 0.037 | 0.65 | 176.4 |

| HPC1 filesystem nodes | 0.76 | 0.013 | 0.64 | 482.6 |

| All HPC1 nodes | 0.71 | 0.049 | 0.59 | 160.9 |

Disk replacements in the filesystem nodes, as well as the compute nodes, and across all nodes, are fit best with gamma and Weibull distributions with a shape parameter less than 1, a clear indicator of decreasing hazard rates.

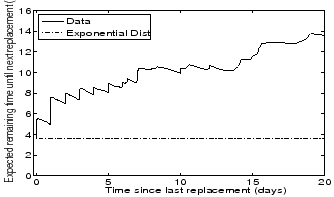

Figure 10 illustrates the decreasing hazard rates of the time between replacements by plotting the expected remaining time until the next disk replacement (Y-axis) as a function of the time since the last disk replacement (X-axis). We observe that right after a disk was replaced the expected time until the next disk replacement becomes necessary was around 4 days, both for the empirical data and the exponential distribution. In the case of the empirical data, after surviving for ten days without a disk replacement the expected remaining time until the next replacement had grown from initially 4 to 10 days; and after surviving for a total of 20 days without disk replacements the expected time until the next failure had grown to 15 days. In comparison, under an exponential distribution the expected remaining time stays constant (also known as the memoryless property).

Note, that the above result is not in contradiction with the increasing replacement rates we observed in

Section 4.2 as a function of drive age, since here we look at the distribution of the time

between disk replacements in a cluster, not disk lifetime distributions (i.e. how long did a drive live

until it was replaced).

Observation 9: The hypothesis that time between disk replacements follows

an exponential distribution can be rejected with high confidence.

Observation 10: The time between disk replacements has a higher variability

than that of an exponential distribution.

Observation 11: The distribution of time between disk replacements

exhibits decreasing hazard rates, that is, the expected remaining time until the next

disk was replaced grows with the time it has been since the last disk replacement.

6 Related work

There is very little work published on analyzing failures in real, large-scale storage systems, probably as a result of the reluctance of the owners of such systems to release failure data.

Among the few existing studies is the work by Talagala et al. [29], which provides a study of error logs in a research prototype storage system used for a web server and includes a comparison of failure rates of different hardware components. They identify SCSI disk enclosures as the least reliable components and SCSI disks as one of the most reliable component, which differs from our results.

In a recently initiated effort, Schwarz et al. [28] have started to gather failure data at the Internet Archive, which they plan to use to study disk failure rates and bit rot rates and how they are affected by different environmental parameters. In their preliminary results, they report ARR values of 2-6% and note that the Internet Archive does not seem to see significant infant mortality. Both observations are in agreement with our findings.

Gray [31] reports the frequency of uncorrectable read errors in disks and finds that their numbers are smaller than vendor data sheets suggest. Gray also provides ARR estimates for SCSI and ATA disks, in the range of 3-6%, which is in the range of ARRs that we observe for SCSI drives in our data sets.

Pinheiro et al. analyze disk replacement data from a large population of serial and parallel ATA drives [23]. They report ARR values ranging from 1.7% to 8.6%, which agrees with our results. The focus of their study is on the correlation between various system parameters and drive failures. They find that while temperature and utilization exhibit much less correlation with failures than expected, the value of several SMART counters correlate highly with failures. For example, they report that after a scrub error drives are 39 times more likely to fail within 60 days than drives without scrub errors and that 44% of all failed drives had increased SMART counts in at least one of four specific counters.

Many have criticized the accuracy of MTTF based failure rate predictions and have pointed out the need for more realistic models. A particular concern is the fact that a single MTTF value cannot capture life cycle patterns [4,5,33]. Our analysis of life cycle patterns shows that this concern is justified, since we find failure rates to vary quite significantly over even the first two to three years of the life cycle. However, the most common life cycle concern in published research is underrepresenting infant mortality. Our analysis does not support this. Instead we observe significant underrepresentation of the early onset of wear-out.

Early work on RAID systems [8] provided some statistical analysis

of time between disk failures for disks used in the 1980s, but didn't find sufficient evidence to reject the

hypothesis of exponential times between failure with high confidence.

However, time between failure has been analyzed for other, non-storage data in

several studies [11,17,26,27,30,32].

Four of the studies use distribution fitting and find the Weibull distribution

to be a good fit [11,17,27,32], which agrees with our results.

All studies looked at the hazard rate function, but come to

different conclusions. Four of them [11,17,27,32]

find decreasing hazard rates (Weibull shape parameter ![]() ).

Others find that hazard rates are flat [30], or increasing [26].

We find decreasing hazard rates with Weibull shape parameter of 0.7-0.8.

).

Others find that hazard rates are flat [30], or increasing [26].

We find decreasing hazard rates with Weibull shape parameter of 0.7-0.8.

Large-scale failure studies are scarce, even when considering IT systems in general and not just storage systems. Most existing studies are limited to only a few months of data, covering typically only a few hundred failures [13,20,21,26,30,32]. Many of the most commonly cited studies on failure analysis stem from the late 80's and early 90's, when computer systems where significantly different from today [9,10,12,17,18,19,30].

7 Conclusion

Many have pointed out the need for a better understanding of what disk failures look like in the field. Yet hardly any published work exists that provides a large-scale study of disk failures in production systems. As a first step towards closing this gap, we have analyzed disk replacement data from a number of large production systems, spanning more than 100,000 drives from at least four different vendors, including drives with SCSI, FC and SATA interfaces. Below is a summary of a few of our results.

- Large-scale installation field usage appears to differ widely from nominal datasheet MTTF conditions.

The field replacement rates of systems were significantly larger than we expected based on datasheet MTTFs.

- For drives less than five years old, field replacement rates were larger

than what the datasheet MTTF suggested by a factor of 2-10.

For five to eight year old drives, field replacement rates were a factor of 30 higher than what the datasheet

MTTF suggested.

- Changes in disk replacement rates during the first five years of the lifecycle were more dramatic

than often assumed. While replacement rates are often expected to be in steady state in year

2-5 of operation (bottom of the ``bathtub curve''), we observed a continuous increase in replacement

rates, starting as early as in the second year of operation.

- In our data sets, the replacement rates of SATA disks are not worse than the

replacement rates of SCSI or FC disks. This may indicate that

disk-independent factors, such as operating conditions, usage and

environmental factors, affect replacement rates more than component specific

factors. However, the only evidence we have of a bad batch of disks was

found in a collection of SATA disks experiencing high media error rates. We

have too little data on bad batches to estimate the relative frequency of

bad batches by type of disk, although there is plenty of anecdotal evidence

that bad batches are not unique to SATA disks.

- The common concern that MTTFs underrepresent infant mortality

has led to the proposal of new standards that incorporate infant mortality [33].

Our findings suggest that the underrepresentation of the early onset of wear-out

is a much more serious factor than underrepresentation of infant mortality

and recommend to include this in new standards.

- While many have suspected that the commonly made assumption of exponentially

distributed time between failures/replacements is not realistic, previous studies have not found

enough evidence to prove this assumption wrong with significant statistical

confidence [8]. Based on our data analysis, we are able to reject the

hypothesis of exponentially distributed time between disk replacements with high confidence.

We suggest that researchers and designers use field replacement data, when possible, or

two parameter distributions, such as the Weibull distribution.

- We identify as the key features that distinguish the empirical distribution of time between disk replacements

from the exponential distribution, higher levels of variability and decreasing hazard rates.

We find that the empirical distributions are fit well by a Weibull distribution with

a shape parameter between 0.7 and 0.8.

- We also present strong evidence for the existence of correlations between disk replacement interarrivals. In particular, the empirical data exhibits significant levels of autocorrelation and long-range dependence.

8 Acknowledgments

We would like to thank Jamez Nunez and Gary Grider from the High Performance Computing Division at Los Alamos National Lab and Katie Vargo, J. Ray Scott and Robin Flaus from the Pittsburgh Supercomputing Center for collecting and providing us with data and helping us to interpret the data. We also thank the other people and organizations, who have provided us with data, but would like to remain unnamed. For discussions relating to the use of high end systems, we would like to thank Mark Seager and Dave Fox of the Lawrence Livermore National Lab. Thanks go also to the anonymous reviewers and our shepherd, Mary Baker, for the many useful comments that helped improve the paper.

We thank the members and companies of the PDL Consortium (including APC, Cisco, EMC, Hewlett-Packard, Hitachi, IBM, Intel, Network Appliance, Oracle, Panasas, Seagate, and Symantec) for their interest and support.

This material is based upon work supported by the Department of Energy under Award Number DE-FC02-06ER257672and on research sponsored in part by the Army Research Office, under agreement number DAAD19-02-1-0389.

Footnotes

- ... respectively 1

- More precisely, we choose the cutoffs between the buckets such that each bucket contains the same number of samples (i.e. weeks) by using the 33th percentile and the 66th percentile of the empirical distribution as cutoffs between the buckets.

- ... DE-FC02-06ER257672

- This report was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor any agency thereof, nor any of their employees, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government or any agency thereof. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof.

Bibliography

- 1

- Personal communication with Dan Dummer, Andrei Khurshudov, Erik Riedel, Ron Watts of Seagate, 2006.

- 2

-

G. Cole.

Estimating drive reliability in desktop computers and consumer electronics systems. TP-338.1. Seagate.

2000. - 3

-

Peter F. Corbett, Robert English, Atul Goel, Tomislav Grcanac, Steven Kleiman,

James Leong, and Sunitha Sankar.

Row-diagonal parity for double disk failure correction.

In Proc. of the FAST '04 Conference on File and Storage Technologies, 2004. - 4

-

J. G. Elerath.

AFR: problems of definition, calculation and measurement in a commercial environment.

In Proc. of the Annual Reliability and Maintainability Symposium, 2000. - 5

-

J. G. Elerath.

Specifying reliability in the disk drive industry: No more MTBFs.

In Proc. of the Annual Reliability and Maintainability Symposium, 2000. - 6

-

J. G. Elerath and S. Shah.

Server class drives: How reliable are they?

In Proc. of the Annual Reliability and Maintainability Symposium, 2004. - 7

-

Sanjay Ghemawat, Howard Gobioff, and Shun-Tak Leung.

The Google file system.

In Proc. of the 19th ACM Symposium on Operating Systems Principles (SOSP'03), 2003. - 8

-

Garth A. Gibson.

Redundant disk arrays: Reliable, parallel secondary storage. Dissertation. MIT Press.

1992. - 9

-

J. Gray.

Why do computers stop and what can be done about it.

In Proc. of the 5th Symposium on Reliability in Distributed Software and Database Systems, 1986. - 10

-

J. Gray.

A census of tandem system availability between 1985 and 1990.

IEEE Transactions on Reliability, 39(4), 1990. - 11

-

T. Heath, R. P. Martin, and T. D. Nguyen.

Improving cluster availability using workstation validation.

In Proc. of the 2002 ACM SIGMETRICS international conference on Measurement and modeling of computer systems, 2002. - 12

-

R. K. Iyer, D. J. Rossetti, and M. C. Hsueh.

Measurement and modeling of computer reliability as affected by system activity.

ACM Trans. Comput. Syst., 4(3), 1986. - 13

-

M. Kalyanakrishnam, Z. Kalbarczyk, and R. Iyer.

Failure data analysis of a LAN of Windows NT based computers.

In Proc. of the 18th IEEE Symposium on Reliable Distributed Systems, 1999. - 14

-

T. Karagiannis.

Selfis: A short tutorial.

Technical report, University of California, Riverside, 2002. - 15

-

Thomas Karagiannis, Mart Molle, and Michalis Faloutsos.

Long-range dependence: Ten years of internet traffic modeling.

IEEE Internet Computing, 08(5), 2004. - 16

-

Will E. Leland, Murad S. Taqqu, Walter Willinger, and Daniel V. Wilson.

On the self-similar nature of ethernet traffic.

IEEE/ACM Transactions on Networking, 2(1), 1994. - 17

-

T.-T. Y. Lin and D. P. Siewiorek.

Error log analysis: Statistical modeling and heuristic trend analysis.

IEEE Transactions on Reliability, 39(4), 1990. - 18

-

J. Meyer and L. Wei.

Analysis of workload influence on dependability.

In Proc. International Symposium on Fault-Tolerant Computing, 1988. - 19

-

B. Murphy and T. Gent.

Measuring system and software reliability using an automated data collection process.

Quality and Reliability Engineering International, 11(5), 1995. - 20

-

D. Nurmi, J. Brevik, and R. Wolski.

Modeling machine availability in enterprise and wide-area distributed computing environments.

In Euro-Par'05, 2005. - 21

-

D. L. Oppenheimer, A. Ganapathi, and D. A. Patterson.

Why do internet services fail, and what can be done about it?

In USENIX Symposium on Internet Technologies and Systems, 2003. - 22

-

David Patterson, Garth Gibson, and Randy Katz.

A case for redundant arrays of inexpensive disks (RAID).

In Proc. of the ACM SIGMOD International Conference on Management of Data, 1988. - 23

-

E. Pinheiro, W. D. Weber, and L. A. Barroso.

Failure trends in a large disk drive population.

In Proc. of the FAST '07 Conference on File and Storage Technologies, 2007. - 24

-

Vijayan Prabhakaran, Lakshmi N. Bairavasundaram, Nitin Agrawal, Haryadi S.

Gunawi, Andrea C. Arpaci-Dusseau, and Remzi H. Arpaci-Dusseau.

Iron file systems.

In Proc. of the 20th ACM Symposium on Operating Systems Principles (SOSP'05), 2005. - 25

-

Sheldon M. Ross.

In Introduction to probability models. 6th edition. Academic Press. - 26

-

R. K. Sahoo, R. K., A. Sivasubramaniam, M. S. Squillante, and Y. Zhang.

Failure data analysis of a large-scale heterogeneous server environment.

In Proc. of the 2004 International Conference on Dependable Systems and Networks (DSN'04), 2004. - 27

-

B. Schroeder and G. Gibson.

A large-scale study of failures in high-performance computing systems.

In Proc. of the 2006 International Conference on Dependable Systems and Networks (DSN'06), 2006. - 28

-

T. Schwarz, M. Baker, S. Bassi, B. Baumgart, W. Flagg, C. van Ingen, K. Joste,

M. Manasse, and M. Shah.

Disk failure investigations at the internet archive.

In Work-in-Progess session, NASA/IEEE Conference on Mass Storage Systems and Technologies (MSST2006), 2006. - 29

-

Nisha Talagala and David Patterson.

An analysis of error behaviour in a large storage system.

In The IEEE Workshop on Fault Tolerance in Parallel and Distributed Systems, 1999. - 30

-

D. Tang, R. K. Iyer, and S. S. Subramani.

Failure analysis and modelling of a VAX cluster system.

In Proc. International Symposium on Fault-tolerant computing, 1990. - 31

-

C. van Ingen and J. Gray.

Empirical measurements of disk failure rates and error rates.

In MSR-TR-2005-166, 2005. - 32

-

J. Xu, Z. Kalbarczyk, and R. K. Iyer.

Networked Windows NT system field failure data analysis.

In Proc. of the 1999 Pacific Rim International Symposium on Dependable Computing, 1999. - 33

-

Jimmy Yang and Feng-Bin Sun.

A comprehensive review of hard-disk drive reliability.

In Proc. of the Annual Reliability and Maintainability Symposium, 1999.

About this document ...

This document was generated using the LaTeX2HTML translator Version 2002 (1.67)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

Copyright © 1997, 1998, 1999,

Ross Moore,

Mathematics Department, Macquarie University, Sydney.

| Last changed: 14 Feb. 2007 ch |