All sessions will be held at the Georgia Tech Hotel and Conference Center

Downloads for Registered Attendees

(Sign in to your USENIX account to download these files.)

Friday, May 11, 2018

8:00 am-8:55 am

Continental Breakfast

8:55 am–9:00 am

Opening Remarks

9:00 am–10:00 am

Session 1

How to Successfully Harness Machine Learning to Combat Fraud and Abuse

Elie Bursztein, Google

While machine learning is integral to innumerable anti-abuse systems including spam and phishing detection, the road to reap its benefits is paved with numerous abuse specific challenges.

Drawing from concrete examples we will discuss how these challenges are addressed at Google and provide a roadmap to anyone interested in applying machine learning to fraud and abuse problems.

Elie Bursztein leads Google's anti-abuse research, which helps protect users against Internet threats. Elie has contributed to applied-cryptography, machine learning for security, malware understanding, and web security; authoring over fifty research papers in the field. Most recently he was involved in finding the first SHA-1 collision. Elie is a beret aficionado, blog at https://elie.net, tweets @elie, and performs magic tricks in his spare time. Born in Paris, he received a Ph.D from ENS-cachan in 2008 before working at Stanford University and ultimately joining Google in 2011. He now lives with his wife in Mountain View, California.

In Deep Trouble: Bot Prevention in the Age of Deep Learning

Jason Polakis, Assistant Professor, Computer Science Department, University of Illinois at Chicago

Recent advancements in deep learning have demonstrated impressive results. These techniques have direct application in many areas that rely on computer vision and speech recognition, and are being increasingly adopted by major tech companies. However, the wide availability of deep learning systems and online services has also rendered these capabilities freely accessible to cyber-criminals. In this talk I will focus on the significant impact of deep learning attacks against CAPTCHAs, which typically constitute the first line of defense against automated bot activities. To that end, I will present recent work in which we used deep learning services to deploy highly effective low-cost attacks against the most prevalent CAPTCHA services. First, I will outline our attacks against Google’s image ReCaptcha, the first CAPTCHA-breaking attack to extract semantic information from images. Next, I will detail how we used speech recognition services to create effective generic solvers for audio CAPTCHAs. Finally, I will discuss the long term implications for CAPTCHA design, as we have appear to have reached a point where attempting to distinguish humans from bots through straightforward tasks is unrealistic.

Jason Polakis is an Assistant Professor in the Computer Science Department at the University of Illinois at Chicago. He received his B.Sc. (2007), M.Sc. (2009), and Ph.D. (2014) degrees in Computer Science from the University of Crete, Greece, while working as a research assistant in the Distributed Computing Systems Lab at FORTH.

Prior to joining UIC, Jason was a postdoctoral research scientist in the Computer Science Department at Columbia University, and a member of the Network Security Lab.

10:00 am–10:30 am

Break with Refreshments

10:30 am–12:00 pm

Session 2

"You Can't Just Turn the Crank": Machine Learning for Fighting Abuse on the Consumer Web

David Freeman, Research Scientist/Engineer, Facebook

Fighting fake registrations, phishing, spam and other types of abuse on the consumer web appears at first glance to be an application tailor-made for machine learning: you have lots of data and lots of features, and you are looking for a binary response (is it an attack or not) on each request. However, building machine learning systems to address these problems in practice turns out to be anything but a textbook process. In particular, you must answer such questions as:

- How do we obtain quality labeled data?

- How do we keep models from "forgetting the past"?

- How do we test new models in adversarial environments?

- How do we stop adversaries from learning our classifiers?

In this talk I will explain how machine learning is typically used to solve abuse problems, discuss these and other challenges that arise, and describe some approaches that can be implemented to produce robust, scalable systems.

David Freeman is a research scientist/engineer at Facebook working on integrity and abuse problems. He previously led anti-abuse engineering and data science teams at LinkedIn, where he built statistical models to detect fraud and abuse and worked with the larger machine learning community at LinkedIn to build scalable modeling and scoring infrastructure. He has published numerous academic papers on aspects of computer security and recently co-authored a book on Machine Learning and Security, published by O'Reilly. He holds a Ph.D. in mathematics from UC Berkeley and did postdoctoral research in cryptography and security at CWI and Stanford University.

Impact of Bots on eCommerce and Bot Detection Methods

Tummalapalli Sudhamsh Reddy, Senior Data Scientist, Kayak Software

By some estimates, almost half of the web traffic is due to bots. Some search engines such as Google or Bing, as well as other affiliate sites have bots that scrape eCommerce websites such as Wayfair or Amazon for information which are encouraged, as this helps drive traffic to the site. Other bots are much more malicious and they focus on activities such as stealing content from the site via web scraping (Price Scraping or Content Scraping), form fraud, inventory locking, click fraud, credit card fraud etc. Some of the impacts of this kind of malicious activity are that they can skew website analytics information, allow competitors to steal information, commit ad fraud, and can also impact the user experience of the site by slowing it down by generating traffic that increases server loads and wastes bandwidth.

In this talk we will focus on:

- Some of the impacts of such bots on eCommerce sites

- The different types of bots that exist

- Some features and methods that can be used to detect such bots

Dr. Tummalapalli Sudhamsh Reddy is a senior data Scientist at Kayak Software Corp, which is one of the leading travel metasearch engines in the world. Amongst his many roles at Kayak, Sudhamsh is responsible for the bot detection and search results ranking. Before joining Kayak, Sudhamsh developed fraud detection models for detecting credit card fraud at ACI Worldwide Inc. He has previously been a guest scientist at Fermi National Accelerator Lab and Brookhaven National Lab.

Dr. Reddy obtained his M.S and Ph.D. in Computer Science from the University of Texas at Arlington.

Automating the Discovery and Investigation of Targeted Attacks with AI and Machine Learning

Alejandro Borgia, VP Product Management, Security Analytics & Research, Symantec

Targeted attacks represent one of the most dangerous threats to enterprise security today. Yet they are often hidden from view under a mountain of alerts generated by security systems, giving attackers time to gain access to systems and seize valuable data. Symantec’s new Targeted Attack Analytics (TAA) technology leverages advanced machine learning to automate the discovery of targeted attacks, identifying truly targeted activity and prioritizing it in the form of a highly reliable incident report. TAA, which is the result of an internal joint-effort between Symantec’s Attack Investigation Team and a team of Symantec’s top security data scientists on the leading edge of machine learning research, analyzes a broad range of data from one of the largest threat data lakes in the world to automate targeted threat detection.

Alejandro Borgia leads Product Management for Symantec’s Security Analytics and Research division, which includes the company’s Security Technology and Response (STAR) organization, the Center for Advanced Machine Learning (CAML), and Shared Engineering Services (SES). STAR delivers the company’s industry-leading threat protection technologies, advanced security analytics, and investigations into new targeted attacks; CAML is Symantec’s center of excellence for advanced machine learning and artificial intelligence; and the Shared Engineering Services organization includes product security, product localization and internationalization, and engineering development tools.

12:00 pm–1:30 pm

Conference Luncheon

Sponsored by Google

1:30 pm–3:00 pm

Session 3

Security Data Science at Cloud Scale

Yogesh Roy, Principal Engineer, Microsoft

Hyperscale cloud computing platforms offer a rich set of services that handle billions of transactions and generate petabyte scale logs. A single service like Azure Active Directory in Microsoft’s cloud handles 450 billion logins per month generating over 10 Petabyte of logs annually. The Azure Security Data Science team is tasked with detecting malicious activities and behaviors in our cloud services by employing data driven approaches to security.

In this talk, we present three classes of problems. First, we show how to detect unusual logins behavior in AAD, a foundational Azure service – which at first pass may look trivial, but at a scale of 5 billion enterprise logins per day, even a small amount of false positive rate can cripple security analysts. We discuss our solution that employs a combination of random walks and Markov chains that drastically reduces false positive rates. Next, we share our approach for combining detections from multiple Azure services in a graphical model, giving us the ability to detect complex multistage attacks in the cloud. Finally, we discuss approaches towards determining user risk score, addressing questions like: how does one model risk? What are the different components that contribute to the riskiness of a user? We answer these questions by sharing our experience in building unsupervised risk score function that integrates various detections that we have.

Yogesh Roy is a Principal Applied Machine Learning Manager in the Azure Security Division, working at the intersection of machine learning and cloud security and developing solutions to protect Microsoft cloud customers on services like Azure Active Directory, Azure Resource Manager, Azure Information Protection, KeyVault, etc. He has 20+ year of experience working in the software industry working on areas spanning distributed high performance computing, cloud services & security, information retrieval, ranking and relevance, and mobile computing. He is has worked on many initiatives leveraging machine learning techniques for extracting value out of big data. At Microsoft, he also worked on Bing search, where he was involved in delivering new search page experiences and improving the ranking and relevance for deep links on the search results.

Structure2vec: Deep Learning for Security Analytics over Graphs

Le Song, Associate Director, Center for Machine Learning, Georgia Institute of Technology, and Alibaba

Networks and graphs are prevalent in many real world applications such as online social networks, transactions in payment platforms, user-item interactions in recommendation systems and relational information in knowledge bases. The availability of large amount of graph data has posed great new challenges. How to represent such data to capture similarities or differences between involved entities? How to learn predictive models and perform reasoning based on a large amount of such data? Previous deep learning models, such as CNN and RNN, are designed for images and sequences, but they are not applicable to graph data.

In this talk, I will present an embedding framework, called Structure2Vec, for learning representation for graph data in an end-to-end fashion. Structure2Vec provides a unified framework for integrating information from node characteristics, edge features, heterogeneous network structures and network dynamics, and linking them to downstream supervised and unsupervised learning, and reinforcement learning. I will also discuss several applications in security analytics where Structure2Vec leads to significant improvement over previous state-of- the-arts.

Le Song is an Associate Professor in the Department of Computational Science and Engineering, College of Computing, and an Associate Director of the Center for Machine Learning, Georgia Institute of Technology. Le is also working with Ant Financial AI Department on risk management, security and finance related problems. He received his Ph.D. in Machine Learning from University of Sydney and NICTA in 2008, and then conducted his post-doctoral research in the Department of Machine Learning, Carnegie Mellon University, between 2008 and 2011. Before he joined Georgia Institute of Technology in 2011, he was a research scientist at Google briefly. His principal research direction is machine learning, especially nonlinear models, such as kernel methods and deep learning, and probabilistic graphical models for large scale and complex problems, arising from artificial intelligence, network analysis and other interdisciplinary domains. He is the recipient of the Recsys '16 Deep Learning Workshop Best Paper Award, AISTATS '16 Best Student Paper Award, IPDPS '15 Best Paper Award, NSF CAREER Award '14, NIPS '13 Outstanding Paper Award, and ICML '10 Best Paper Award. He has also served as the area chair or senior program committee for many leading machine learning and AI conferences such as ICML, NIPS, AISTATS, AAAI and IJCAI. He is also the action editor for JMLR, and associate editor for IEEE PAMI.

Convicted by Memory: Recovering Spatial-Temporal Digital Evidence from Memory Images

Brendan Saltaformaggio, Assistant Professor, School of Electrical Computer Engineering, Georgia Institute of Technology

Memory forensics is becoming a crucial capability in modern cyber forensic investigations. In particular, memory forensics can reveal "up to the minute" evidence of a device's usage, often without requiring a suspect's password to unlock the device, and it is oblivious to any persistent storage encryption schemes. Prior to my work, researchers and investigators alike considered raw data-structure recovery the ultimate goal of memory forensics. This, however, was far from sufficient as investigators were still largely unable to understand the content of the recovered evidence; hence, unlocking the true potential of such evidence in memory images remained an open research challenge.

In this talk, I will focus on my research efforts which break from traditional data-recovery-oriented forensics and instead leverage program analysis to automatically locate, reconstruct, and render spatial-temporal evidence from memory images. I will describe the evolution of this work, starting with the reuse of binary program components to overcome the burden of recovering and understanding highly probative data structures, e.g., photos, chat contents, and edited documents. Then, shifting away from the recovery of data structures, I will introduce spatial-temporal evidence recovery, culminating in the instrumentation of program executions to recreate full sequences of previous smartphone app screens, all from only a single snapshot of a device's memory. Finally, to highlight the role of memory forensics in my overall research agenda, I will briefly present my ongoing and future work in integrated cyber/cyber-physical attack defense and forensics.

Dr. Brendan Saltaformaggio is an Assistant Professor in the School of Electrical and Computer Engineering at Georgia Tech. He is the Director of the Cyber Forensics Innovation (CyFI) Laboratory, whose mission is to further the investigation of advanced cyber crimes and the analysis and prevention of next-generation malware attacks, particularly in mobile and IoT environments. This research has led to numerous publications at top cyber security venues, including a Best Student Paper Award from the 2014 USENIX Security Symposium.

3:00 pm–3:30 pm

Break with Refreshments

3:30 pm–5:15 pm

Session 4

Enterprise Scale Machine Learning For Detecting Email Based Attacks

Bayan Bruss, Senior Machine Learning Engineer, Capital One

Email is an effective means for perpetrating cyber attacks on organizations as the attack surface tends to be broad and easily exploitable. Email based attacks can result in credential harvesting, drive-by downloads and other malicious activities such as executive spoofing. In many organizations attackers easily avoid commercial email filters. This moves the front line of defense to a company’s employees to report potentially malicious emails. Studies have shown that even immediately after receiving training, the average employee still misses more than 30% of phishing attempts. Furthermore, even if they do detect a malicious email there is no guarantee that they will report the incident. To address these problems, Capital One has built an enterprise-scale detection system that uses Machine Learning to identify the highest priority attacks that have evaded existing defenses and send them to trained analysts for validation and remediation. The validated data feeds back into the ML system allowing it to dynamically adapt to changes in the threat landscape. The initial version of this system has been deployed for the Cybersecurity Operations Center (CSOC) on the subset of emails reported by Capital One employees. This set of emails is overwhelmingly (95%) harmless marketing emails, with roughly 5% being malicious emails. This makes it hard for analysts to spend the time needed on the more urgent cases. The system described here classifies each email as either malicious or benign by analyzing body text, embedded URLs, sender information, and headers. The system automatically generates analyst alerts in commonly used platforms such as Slack, and ticketing systems like Jira. Current model results allow a reduction in analyst workload by over 75%. The success of this initial use case sets Capital One up for the development of an enterprise scale platform inspecting all inbound emails with a wide variety of Machine Learning enabled security tasks currently under development. This includes automated HTML content analysis, attachment analysis, image classification, and a broad suite of URL enrichments and classifications that leads into comprehensive infrastructure analysis. Furthermore, Capital One intends to leverage this broad set of capabilities across the spectrum of attack vectors as appropriate.

Bayan is a Senior Machine Learning Engineer within Capital One’s Center For Machine Learning. He leads a team of Machine Learning Engineers developing machine learning based cybersecurity products to strengthen Capital One’s cyber defenses and respond to a dynamic threat landscape. Bayan’s team currently focuses on building a machine learning platform for identifying phishing & other malicious emails entering Capital One. Prior to Capital One, Bayan worked as a Data Science and Big Data engineering consultant with Accenture. There he worked on a range of projects across the Federal and Financial Services industries. He is broadly interested in NLP, and has conducted research on the cross section of social psychology, culture and computational linguistics.

Security Alerting and Event Management in the Era of Machine Learning: Our Experience in the Industry

Flavio Villanustre, CISO and VP of Technology, LexisNexis® Risk Solutions

Gone are the days when prevention almost guaranteed complete risk mitigation. While prevention is still paramount, early detection and containment/attack mitigation are critical to any reasonable information security program and to the long term survivability of any organization. Moreover, systems have become increasingly more complex and bad actors have developed more sophisticated attacks, introducing new challenges and rendering traditional Security Incident Event Management solutions far less effective. However, there are new techniques in the toolbox of the information security practitioner which can help overcome some of these obstacles and level the field in this asymmetric cyber-warfare. In particular, unsupervised and semi-supervised learning techniques both, from traditional machine learning and from deep learning algorithms, can be used to more effectively identify known and novel attacks and reduce the burden on rule developers. During this presentation, we will introduce the audience to our experience evolving our security incident event management to cope with the modern threat landscape.

Dr. Flavio Villanustre is CISO and VP of Technology for LexisNexis® Risk Solutions. He also leads the open source HPCC Systems® platform initiative, which is focused on expanding the community gathering around the HPCC Systems Big Data platform, originally developed by LexisNexis Risk Solutions in 2001 and later released under an open source license in 2011. Flavio’s expertise covers a broad range of subjects, including hardware and systems, software engineering, and data analytics and machine learning. He has been involved with open source software for more than two decades, founding the first Linux users’ group in Buenos Aires in 1994.

Panel: AI in Security, Gaps Between Theory and Practice. Demonstrating Value to Customers

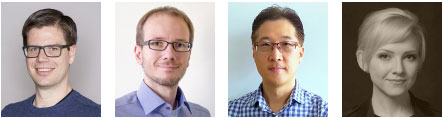

Adam Hunt, Chief Data Scientist, RiskIQ; Sven Krasser, Chief Scientist, CrowdStrike; Sean Park, Senior Malware Scientist, Trend Micro; Kelly Shortridge, Product Manager, Securityscorecard

As Chief Data Scientist, Adam Hunt leads the data science & data engineering teams at RiskIQ. Adam pioneers research automating detection of adversarial attacks across disparate digital channels including email, web, mobile, social media. Adam has received patents for identifying new external threats using machine learning. Adam received his Ph.D. in experimental particle physics from Princeton University. As an award winning member of the CMS collaboration at the Large Hadron Collider, he was an integral part in developing the online and offline analysis systems that lead to the discovery of the Higgs Boson.

Dr. Sven Krasser currently serves as Chief Scientist at CrowdStrike where he leads the machine learning efforts utilizing CrowdStrike’s Big Data information security platform. He has productized machine learning-based systems in cybersecurity for over a decade and most recently led the research and development of the first fully machine learning-based anti-malware engine featured on VirusTotal. Dr. Krasser has authored numerous peer-reviewed publications and is co-inventor of more than two dozen patented network and host security technologies.

Sean Park is Senior Malware Scientist within Trend Micro’s Machine Learning Group, an elite team of researchers solving highly difficult problems in the battle against cybercrime. His main research focus is deep learning based threat detection including generative adversarial malware clustering, metamorphic malware detection using semantic hashing and Fourier transform, malicious URL detection with attention mechanism, OS X malware outbreak detection, semantic malicious script autoencoder, and heterogeneous neural network for Android APK detection. He previously worked for Kaspersky, FireEye, Symantec, and Sophos. He also created a critical security system for banking malware at a top Australian bank.

Kelly Shortridge is currently a Product Manager at SecurityScorecard, the security risk management platform. In her spare time, she researches applications of behavioral economics and behavioral game theory to information security, on which she’s spoken at conferences internationally, including Black Hat, Hacktivity, Troopers, and ZeroNights. Previously, Kelly was the Product Manager for cross-platform Detection capabilities at BAE Systems, within the Applied Intelligence division, and also co-founded a mobile monitoring and access control startup called IperLane, where she served as COO for almost two years. Prior to IperLane, Kelly was an investment banking analyst at Teneo Capital, responsible for coverage of the data security, intelligence and analytics sectors, advising clients on M&A and capital raising assignments.