Vanessa Teague, Kim Ramchen and Lee Naish

Department of Computer Science and Software Engineering

The University of Melbourne

In elections of all kinds it is important that voters are free of coercion, votes are tallied correctly and this is seen to be the case. Electronic voting could improve the situation: some schemes offer universal verifiability, meaning that anyone can check that the announced tally is correct. Ideally it should be unnecessary to trust either the implementors of the program or the security of the computer used for voting. However, electronic voting systems must take particular care to prevent a voter from being able to prove to a coercer how they voted. This property is known as ``receipt freeness'' or ``coercion resistance''. Without it, a coercer can either promise to reward a voter if they show they voted in a particular way or threaten to cause harm to them if they do not.

Although many electronic voting systems are designed for first-past-the-post voting, the best voting schemes are those that allow voters to express more than simply their single favourite candidate. The tally method we target is known as Single Transferrable Vote (STV) 1. It can be used to fill single or multiple vacancies and with the latter, achieves a form of ``proportional representation''. The multiple-vacancy case is used in Australia, Ireland and Cambridge MA. It is particularly susceptible to coercion and is the main focus of this paper. Single-seat STV is more widespread, with uses including the London Mayoral race and various other examples throughout the United States and the British Commonwealth. In this case, the coercion problem might still apply if there are many candidates. Hence our algorithm (with an obvious simplification) might still be useful.

If votes contain little information, for example, they are just ``yes''

or ``no'', or a choice of one of a small number of possible candidates, they cannot be used

to identify individual voters. However, with STV

a vote can specify any permutation of the candidates; this has much

greater information content. Hence the

``short ballot assumption'' [RS07] fails even when there are

not very many candidates. If all

individual votes are ultimately revealed then this introduces a coercion

problem, sometimes called the ``Italian attack''.

For example, voters can be coerced to

give a particular candidate their first preference,

then the remaining preferences can be used to encode

the identity of the voter. (A

typical Australian Senate election has about 70

candidates, so there are ![]() different possible votes.) It is easy for a

coercer to choose one vote that is very unlikely to occur, demand that a voter

cast that particular vote, and then look through the ballots to see

whether that vote appears. Indeed, there are so many possible votes that a

coercer can choose a different required vote for a very large number of voters

even when imposing some political constraints on the votes, such as only

requiring those with the coercer's favourite candidate first.

Although this method of coercion requires work for the coercer prior to the vote (so a mapping from voters to

permutations can be established), it is feasible and the risk has been described in the voting

literature [Ott03]. The problem has created a tradeoff between verifiability

and coercion-resistance for paper-based STV elections,2 but it applies even more strongly to electronic

systems in which votes are printed on a bulletin board. Complete disclosure of all ballots exposes

the voters to coercion, but incomplete exposure can make verification impossible without

cryptography.

different possible votes.) It is easy for a

coercer to choose one vote that is very unlikely to occur, demand that a voter

cast that particular vote, and then look through the ballots to see

whether that vote appears. Indeed, there are so many possible votes that a

coercer can choose a different required vote for a very large number of voters

even when imposing some political constraints on the votes, such as only

requiring those with the coercer's favourite candidate first.

Although this method of coercion requires work for the coercer prior to the vote (so a mapping from voters to

permutations can be established), it is feasible and the risk has been described in the voting

literature [Ott03]. The problem has created a tradeoff between verifiability

and coercion-resistance for paper-based STV elections,2 but it applies even more strongly to electronic

systems in which votes are printed on a bulletin board. Complete disclosure of all ballots exposes

the voters to coercion, but incomplete exposure can make verification impossible without

cryptography.

Our approach is to perform the entire tallying algorithm on encrypted votes. We use homomorphic encryption to tally the votes' first preferences. The main shortcoming of our scheme is that there is a single electoral authority who learns the contents of each vote, though it does not learn the correspondence between voters and their votes. This means that we are trusting the authority not to collude with the coercer in an Italian attack. The authority produces a transcript in which each step of the tallying process can be independently verified while revealing very little information, thus reducing the opportunities for coercion. We define a new notion of coercion-resistance for STV and prove that, under reasonable assumptions, the scheme can be parameterised to be coercion resistant. The initial table of encrypted votes could be derived from an electronic voting scheme, or from inputting and then encrypting paper ballots (though verification of the latter would be difficult). One example of an end-to-end verifiable election scheme that produces the right format is contained in Appendix A. We have implemented our scheme and tested it on a subset of the real data for the Victorian State election. Although the computation is expensive, it is feasible for real elections. See section 4.3 for details.

This section gives an overview of STV and existing work on coercion-resistant STV tallying. Section 2 gives examples of coercion that can still occur even under (some of) these coercion-resistant schemes. Section 3 presents our main contribution, an algorithm for provably correct STV tallying that reveals very little unnecessary information. Analysis appears in Section 4, with our new definition in Section 4.1 and the sketch of a security proof in Section 4.2.

Assume we have

cands

candidates,

seats

seats to be filled and

![]() votes.

At any point during the tallying process some candidates have been declared

elected and some have been eliminated; the

other candidates are known as continuing candidates. Initially

all candidates are continuing. The algorithm terminates when

seats

candidates

have been elected or

cands

votes.

At any point during the tallying process some candidates have been declared

elected and some have been eliminated; the

other candidates are known as continuing candidates. Initially

all candidates are continuing. The algorithm terminates when

seats

candidates

have been elected or

cands![]() seats

have been eliminated. A candidate is elected

if they obtain at least a quota of votes. The quota is

usually

seats

have been eliminated. A candidate is elected

if they obtain at least a quota of votes. The quota is

usually

![]() seats

seats![]() . This is just

large enough to ensure it is impossible to elect

seats

. This is just

large enough to ensure it is impossible to elect

seats![]() candidates.

Initially all votes are distributed according to their first preference and all

have weight 1.

candidates.

Initially all votes are distributed according to their first preference and all

have weight 1.

The algorithm consists of repeatedly (re)distributing votes according to the vote's highest preference which is for a continuing candidate. Each candidate's tally is the sum of the weights of the votes that are distributed to them.

There are many variations,3 The details of our implementation are described in Section 3.2.1.

There are a number of existing cryptographic schemes for verifiable STV tallying that limit the information revealed. Heather [Hea07] describes how to implement STV counting securely in Prêt à Voter. McMahon [McM] and Goh & Gollé [GG05] describe structures for secure STV tallying that are not attached to a particular electronic voting scheme. None of these allows the re-weighting necessary for the multiple-seat case, though a recent unpublished scheme by Benaloh and Moran [BM] does. More subtly, every one of these works reveals every candidate's running tally after every redistribution, which still leaves open the possibility of coercion. This is described in Sections 2.2 and 2.3. (Though [BM] could probably be modified to hide this information in the same way we do. Since the details of this scheme are unpublished as yet, we do not know how it compares in efficiency or security to ours.) A similar coercion problem was addressed for the Condorcet voting scheme in [CM05], but their techniques do not extend to STV. The main advantages of our scheme are that it can perform the re-weighting step and hence tally multiple-seat races, and that it reveals much less information than other schemes.

We know of no existing definition of privacy for electronic voting that explicitly considers the possibility that just revealing anonymised votes allows coercion. Crutchfield et. al. [CMT06] consider the privacy violations of publically-released voting statistics, but their model is specifically for single-selection voting schemes, so it does not apply to our case. (They are interested in cases where precinct-based election results are unanimous or nearly so.) Previous cryptography literature has concentrated on receipt freeness, which means that a voter cannot prove to the coercer how she voted, even if she wants to. Often this is used interchangably with the term coercion resistance, introduced in [JCJ05], though sometimes a distinction is made between a coercer who can communicate with the voter before the election (requiring coercion resistance) and one who cannot (requiring receipt-freeness, which is then a weaker property). In this paper we concentrate on the stronger requirement, and call it coercion resistance. We assume the existence of an untappable channel between a voter and the authorities, implemented as a private voting booth at a polling place, so the coercer cannot communicate with the voter during the election. In this sense our definition is weaker than that of [JCJ05]. A definition by Moran and Naor [MN06] (which extends [CG96] by Canetti and Gennaro) uses an ideal functionality. They define a scheme to be coercion resistant if any successful coercion attack against it would also succeed against the ideal functionality. Since they deal only with first-past-the-post elections, they simply choose an ideal functionality that reveals all the (anonymised) votes. For STV, defining the ideal functionality is much harder: as we have just argued, an ideal functionality that revealed all the votes would expose voters to coercion via the Italian attack. The simplest one for STV would be one that outputs the set of winners and nothing else, though this is probably overly restrictive, making the problem too difficult and denying voters access to statistics that they may be interested in. In general the question of whether a particular scheme securely implements a particular ideal functionality is independent of the question of whether coercion is still possible under that functionality. In Section 4.1, we provide a definition of coercion resistance that is complementary to Moran and Naor's, in that it deals directly with whether coercion is possible given that the coercer receives certain information. It is based on one by Okamoto [Oka97]. In order to prove that an end-to-end voting scheme were coercion resistant, one could first prove that it implemented a particular ideal functionality according to the coercion-resistance definition of [MN06], then prove that that functionality was coercion resistant in the sense we define here.

In Section 4 we argue informally that our algorithm only reveals certain information, then we prove that that functionality is coercion resistant according to our definition.

In some jurisdictions, including Ireland, surpluses are redistributed by

randomly sampling some

of the votes for the elected candidate. This makes this particular kind of

coercion less effective, but it is still vulnerable to a closely related kind: the

coercer demands that the voter put

![]() after the list of unlikely candidates.

This is a riskier strategy, but still likely to succeed

even with many coerced voters (say about

after the list of unlikely candidates.

This is a riskier strategy, but still likely to succeed

even with many coerced voters (say about ![]() ). If none of

the unlikely candidates

are elected, then the coerced voter's vote passes to

). If none of

the unlikely candidates

are elected, then the coerced voter's vote passes to

![]() with full weight and has all previous preferences revealed.

with full weight and has all previous preferences revealed.

This form of coercion only works if there are are reasonable number of hopeless candidates, that is, candidates which, when eliminated, are unlikely to effect the tally of many other candidates. In the last Australian federal election, 27 candidates for the Victorian Senate seats received fewer than 100 first-preference votes. When these were eliminated, it was common for many of the other tallies to remain constant, even after several candidates had been eliminated.

Let ![]() be the number of hopeless candidates--we will

assume

be the number of hopeless candidates--we will

assume ![]() .

Here are some examples of how a coercer could make voters

pass their preferences to candidate

.

Here are some examples of how a coercer could make voters

pass their preferences to candidate

![]() :

:

The extent of this problem depends on the probability distribution of all votes. Again the probabilities involved are small, but not negligible, and could possibly be used to coerce a small number of voters and discredit the election.4 For a practical example, in the 2004 Australian Federal election, in the state of Victoria, 15 candidates' tallies did not increase when the first two elected candidates' votes were redistributed. Since the transfer values were 0.67533384 and 0.60324735, this fact would have been evident from their tallies alone, at least with some degree of confidence, even if running tallies were not revealed.

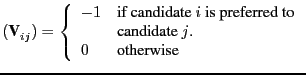

Let

cands

be the number of candidates. A vote consists of a

weight ![]() and a

cands

and a

cands![]() cands

matrix

cands

matrix

![]() with each cell separately

encrypted.

The diagonal of the matrix is unimportant and can be omitted.

For non-diagonal values (with

with each cell separately

encrypted.

The diagonal of the matrix is unimportant and can be omitted.

For non-diagonal values (with ![]() ), the

interpretation of the matrix is that

), the

interpretation of the matrix is that

The vote can be summarised in a vote summary vector ![]() of which the

of which the ![]() -th element

-th element ![]() is

is

The vote summary is (an encrypted form of) the vote traditionally cast in STV, except that the counting starts from zero: the most preferred candidate gets 0, the next gets 1, and so on until the least-favoured candidate gets cands

This means that redistributions can be performed without revealing which

votes are being redistributed and without doing any mixing. An example is shown

in Figure 1. (The values are shown in cleartext but would be

encrypted). The

authority then transforms the vote summary

into a form in which each candidate's first preference vote can be tallied

homomorphically, then declares and proves which candidate(s) should be elected

or eliminated.

The difficulty in the multiple-seat case is redistribution after an election,

which requires multiplying the weights on votes by

non-integer factors. The homomorphic nature of the encryption scheme

gives us (reasonably) efficient multiplication of the encrypted value

by an integer. Division is a

problem, because in general it requires finding roots, a hard

problem. We avoid division as follows.

Approximate each redistribution

factor by an integer ![]() divided by some fixed denominator

divided by some fixed denominator ![]() . For

example, to approximate it to 3 decimal places, choose

. For

example, to approximate it to 3 decimal places, choose ![]() .

(This is the method recommended by the Proportional

Representation Society of Australia.) Each vote begins with a weight

of 1, but upon a candidate's election all votes for that candidate have their

weights multiplied by

.

(This is the method recommended by the Proportional

Representation Society of Australia.) Each vote begins with a weight

of 1, but upon a candidate's election all votes for that candidate have their

weights multiplied by ![]() and all other votes' weights are multiplied by

and all other votes' weights are multiplied by

![]() .

The EC generates a fresh encryption

of the new weight and proves it correct

with standard (honest-verifier)

zero knowledge proofs (Step 3).

The algorithm is described compeletely in

Section 3.

.

The EC generates a fresh encryption

of the new weight and proves it correct

with standard (honest-verifier)

zero knowledge proofs (Step 3).

The algorithm is described compeletely in

Section 3.

|

Although the basic idea of STV is simple, the details are complicated in practice. Indeed, intense debate rages over the best methods for breaking ties and deciding where to redistribute votes (see [Hil07] for one example of debate over the best method for counting computerised STV votes). Most variants are shortcuts which facilitate counting by hand. Even now, with computerised counting almost ubiquitous, outdated legislation often requires these shortcuts to be performed electronically. If necessary, our scheme could be modified to incorporate many of the common counting rules, but we would strongly advocate modifying the legislation instead. We have implemented the following variants:

The last assumption is important--at several points the proof of correct tallying depends upon it. However, some jurisdictions do allow voters to stop before numbering all candidates. Heather [Hea07] suggests including a ``STOP'' candidate who is never elected or eliminated. If we were to do this, we would have to modify some of the tallying proofs and introduce an explicit count of how many votes had been exhausted, so that the quota could be appropriately reduced.

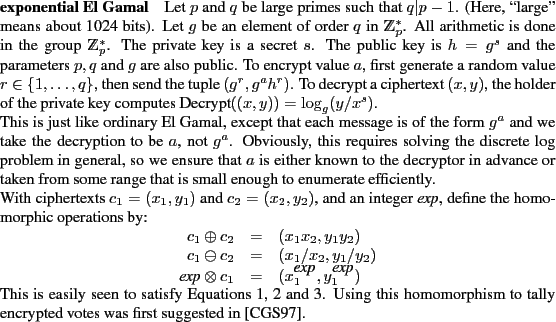

This automatically allows multiplication by a constant, which we denote

by ![]() .

.

We also need the following well-known zero knowledge proofs. In this paper, we require a trusted source of random challenges. This could be obtained from a trusted beacon, or generated jointly by a number of participants. The easiest method is the Fiat-Shamir heuristic [FS87], hashing the input to the proof. We can then use proofs that are merely honest-verifier zero knowledge.

El Gamal encryption satisfies these requirements, and we have implemented our scheme using both standard and Elliptic Curve El Gamal. The latter is much more efficient, being feasible for Australian State elections and close to feasible for federal ones. See Section 4.3 for details. The Paillier encryption scheme also has the required homomorphism and efficient proofs, but we have not implemented it yet.

We begin with a public table of votes. Everyone agrees that this is the true list of all valid votes cast in the election. The encrypted votes are not linked to voter identities in any way. (A technical point: this means that the voters can't know the randomness used to encrypt their votes, or they are subject to coercion before we begin.) One way of verifiably reaching this point, based on Heather's modifications to Prêt à Voter [Hea07], is contained in Appendix A. Perhaps there is some alternative based on public checking of the input and encryption of paper ballots. This is clearly inferior from a security perspective, but it would be very simple to add on to the existing Australian electoral processes without rolling out an end-to-end verifiable voting scheme.

The votes are encrypted with the public key of the Electoral Commission (EC hencefoward), the semi-trusted authority which carries out the tallying. The EC is trusted not to be a coercer, and not to reveal plaintext votes to the coercer or anyone else. It is not trusted to count correctly, and it does not learn the correspondence between votes and individual voters.

All votes must be valid and complete permutations. In our complete system described in Appendix A, this fact is proved by the Electoral Commission before counting commences. The system's security is based on the semantic security of (Elliptic Curve) El Gamal encryption.

For counting, the vote summary is transformed into a tallyable vote

![]() in which the

in which the ![]() -th element

-th element ![]() satisfies

satisfies

The EC produces this tallyable vote and then proves its correctness. It suffices to give (honest-verifier) zero knowledge proofs that

These are straightforward applications of Proof 1 and Proof 3 respectively.

The current tallies are contained in the encrypted tally vector

![]() , with

, with

![]() being an encryption of candidate

being an encryption of candidate ![]() 's tally,

i.e. weighted total votes after reweighting and

redistribution.

8

When

's tally,

i.e. weighted total votes after reweighting and

redistribution.

8

When ![]() candidates have been elected and their votes

redistributed, all tallies are

candidates have been elected and their votes

redistributed, all tallies are ![]() times the real tally (as in a traditional paper-based count). Obviously this

means that the necessary quota is the real quota times

times the real tally (as in a traditional paper-based count). Obviously this

means that the necessary quota is the real quota times ![]() .

Recall that

.

Recall that ![]() is the number of votes.

The maximum tally at any point is

is the number of votes.

The maximum tally at any point is ![]() , and

the next power of 2 is

, and

the next power of 2 is

![]() ,

which we denote by

MaxTally

,

which we denote by

MaxTally![]() .

Whenever we require a proof that some encrypted tally is nonnegative we use

Proof 2 and prove that the value is in the range

.

Whenever we require a proof that some encrypted tally is nonnegative we use

Proof 2 and prove that the value is in the range

![]() MaxTally

MaxTally![]() .

9

.

9

The tallying algorithm is paramaterised by ![]() . Although it is written as a

series of computations for the EC, many of the steps can be performed by any

observer--these are preceded by [public]. Obviously such computations do not

reveal any information, even when performed by the EC.

. Although it is written as a

series of computations for the EC, many of the steps can be performed by any

observer--these are preceded by [public]. Obviously such computations do not

reveal any information, even when performed by the EC.

Each step is either an election or an elimination, followed by a redistribution. The algorithm is as follows:

Recall that the EC knows the decryption of each value in

![]() (without

having to run the decryption algorithm).

(without

having to run the decryption algorithm).

More formally, let ![]() be the quota. Recall that

be the quota. Recall that ![]() is the number of

candidates elected before this step. To show that candidate

is the number of

candidates elected before this step. To show that candidate ![]() does not have a quota,

does not have a quota,

It then identifies the candidate

![]() that should be eliminated.

that should be eliminated.

Recall that we refer to candidates by an index number, and break ties by

index number, so there

are different facts to be proved for the other candidates' tallies, depending

on whether the other candidate has a higher or lower index number.

For each continuing candidate ![]() with a higher index number than

with a higher index number than

![]() , the EC proves that

, the EC proves that ![]() has a strictly higher tally as follows:

it subtracts

has a strictly higher tally as follows:

it subtracts

![]() 's (encrypted) tally from

's (encrypted) tally from ![]() 's minus 1 and

proves that the result encrypts a non-negative number.

More formally, to show that candidate

's minus 1 and

proves that the result encrypts a non-negative number.

More formally, to show that candidate

![]() has a strictly lower tally

than anyone with higher index number,

it proves in Zero Knowledge using

Proof 2 for all candidates

has a strictly lower tally

than anyone with higher index number,

it proves in Zero Knowledge using

Proof 2 for all candidates

![]() , that

, that

Similarly, for each candidate with a lower index number than

and

This shows that the tally is within the range of values for which

| 0 and | |||

| Decrypt |

Decrypt |

||

| or | |||

| 0 and | |||

| Decrypt |

Decrypt |

This implies that either the vote is being redistributed and its weight was correctly changed, or it is not being redistributed and its weight effectively remained the same.

Having demonstrated that coercion can be effective even when only limited information is revealed, we now define coercion resistance more formally. We imagine an attack model in which the coercer communicates with the voter before and possibly after the election, and also receives all public information published during the election. In our case, this is at least the transcript of the public tallying process. The coercer is trying to make the voter cast a vote with some particular property, such as putting one party ahead of another, or putting a certain candidate first. Obvious special cases are that the coercer specifies the vote entirely, or tries to prevent someone from voting. Throughout the following discussion, we consider informal voting (including abstaining) to be a special kind of vote and incorporate it into our definition of coercion-resistance. The scheme is resistant to coercion if the voter can be confident that the coercer will be unsure of whether the voter obeyed their demand or not.

Even when the coercer is only attempting to

coerce one voter, no voting scheme can be entirely secure against coercion

because simply publicising the result could be enough to inform the

coercer that the voter did not vote as required. For example, if the coercer

demands a vote for candidate ![]() and the tally shows that nobody voted

for them, then it is obvious that the voter disobeyed. This problem is

exacerbated when the coercer knows some of the votes (because of fraud or

because they are cast by political partisans). However, in a reasonably

large election this sort of thing is unlikely to reveal disobedience

decisively. Furthermore, the voter

does not have to be absolutely certain that disobedience will be undetectable.

She just has to consider the probability to be very high. Exactly how high

depends on the voter's political preferences and the mode of coercion.

For example, if

she has a strong political preference against the coercer's party and the

method of coercion is to offer her a small amount of money, then she may be

willing to accept quite a high probability of being caught disobeying; if

she is indifferent anyway and the coercer threatens to shoot her if she

disobeys, she will require an extremely low probability of being

caught.

and the tally shows that nobody voted

for them, then it is obvious that the voter disobeyed. This problem is

exacerbated when the coercer knows some of the votes (because of fraud or

because they are cast by political partisans). However, in a reasonably

large election this sort of thing is unlikely to reveal disobedience

decisively. Furthermore, the voter

does not have to be absolutely certain that disobedience will be undetectable.

She just has to consider the probability to be very high. Exactly how high

depends on the voter's political preferences and the mode of coercion.

For example, if

she has a strong political preference against the coercer's party and the

method of coercion is to offer her a small amount of money, then she may be

willing to accept quite a high probability of being caught disobeying; if

she is indifferent anyway and the coercer threatens to shoot her if she

disobeys, she will require an extremely low probability of being

caught.

The following definition assumes a voting system whose outcome is a

symmetrical function of the votes. It assumes that a voter can ``cheat''

the coercer only by submitting a different vote, not, for example, by

modifying the algorithm of some Internet voting scheme. (This is

an assumption about the vote-input scheme that precedes our tallying step.

It would have to be proven to implement an ideal functionality as

in [MN06].) We define

the scheme to be

secure if the coerced voter can undetectably submit whatever vote she

chooses, regardless of what the coercer intended.

We also assume that the voter and the coercer have a common prior probability

distribution ![]() on the set of other votes. This may include, for

example,

knowing for sure that a certain number of voters will vote in a particular

way. Define the coercer's view

VIEW

on the set of other votes. This may include, for

example,

knowing for sure that a certain number of voters will vote in a particular

way. Define the coercer's view

VIEW![]() to be everything that the

coercer generates, computes or observes before, during, or after the election.

This is a randomised function of the input votes.

Given the view, the coercer tries to determine whether the

voter voted as requested or not.

The exact definition of

VIEW

is dependent on the scheme.

For example, in

an old-fashioned paper-based scheme with secret ballot boxes, the coercer's

view would consist only of conversations with the voters before and after

the election, and the public tally results. In an electronic voting

scheme that had been proven coercion-resistant in the sense

of [MN06], i.e. one that securely implemented an ideal

functionality IDEAL, the coercer's view could consist of the view

that the ideal adversary obtains in an interaction with IDEAL.

to be everything that the

coercer generates, computes or observes before, during, or after the election.

This is a randomised function of the input votes.

Given the view, the coercer tries to determine whether the

voter voted as requested or not.

The exact definition of

VIEW

is dependent on the scheme.

For example, in

an old-fashioned paper-based scheme with secret ballot boxes, the coercer's

view would consist only of conversations with the voters before and after

the election, and the public tally results. In an electronic voting

scheme that had been proven coercion-resistant in the sense

of [MN06], i.e. one that securely implemented an ideal

functionality IDEAL, the coercer's view could consist of the view

that the ideal adversary obtains in an interaction with IDEAL.

We define the preimage of a coercer's view to be the set of vote profiles (i.e. lists of votes of all voters) that are consistent with that view.

Consider what happens when a coercer demands a vote

![]() but the voter instead casts

but the voter instead casts

![]() . Let

. Let ![]() be the profile

of all the other votes cast. Then the coercer's

view is that produced by

be the profile

of all the other votes cast. Then the coercer's

view is that produced by

![]() and

and ![]() , that is,

VIEW

, that is,

VIEW![]() .

The coercer is trying to detect whether the voter cast

.

The coercer is trying to detect whether the voter cast

![]() or some other vote. To do this, it will first estimate the

probability

of this view being produced by an obedient voter. This is the probability

of the preimage of the view, i.e.

or some other vote. To do this, it will first estimate the

probability

of this view being produced by an obedient voter. This is the probability

of the preimage of the view, i.e.

![]() .

Obviously, if

preimage

.

Obviously, if

preimage![]() or

or

![]() ,

then the

coercer knows that the voter has disobeyed. However, there are other

situations in which the coercer might still

be very suspicious of disobedience: if the voter produces a view that would

be very unlikely with the obedient vote, but quite likely otherwise, then the

coercer may believe that the voter disobeyed. Recall the example of high-precision

tallies from Section 2.3.

,

then the

coercer knows that the voter has disobeyed. However, there are other

situations in which the coercer might still

be very suspicious of disobedience: if the voter produces a view that would

be very unlikely with the obedient vote, but quite likely otherwise, then the

coercer may believe that the voter disobeyed. Recall the example of high-precision

tallies from Section 2.3.

We define the likelihood ratio of the obedient vote

![]() and the

disobedient vote

and the

disobedient vote

![]() for a particular view

VIEW

to be the ratio

of the probabilities that the view was produced with each vote13.

for a particular view

VIEW

to be the ratio

of the probabilities that the view was produced with each vote13.

We assume that the coercer has some ``suspicion

threshold''

![]() . The coercer, after getting a certain

view, computes the likelihood ratio of the obedient vote and every

possible disobedient vote, and punishes the voter when any

value is below

. The coercer, after getting a certain

view, computes the likelihood ratio of the obedient vote and every

possible disobedient vote, and punishes the voter when any

value is below

![]() . The scheme is secure from coercion if there is a

low probability

of producing an outcome that falls below the suspicion threshold.

. The scheme is secure from coercion if there is a

low probability

of producing an outcome that falls below the suspicion threshold.

There are several ways to weaken this definition. The most obvious one is

to set the suspicion threshold

![]() to zero, so the disobedience only fails if the

coercer is certain that the voter disobeyed. However, this seems too weak

because of the weighted votes example mentioned in Section 2.3.

Another weakening

is to allow the coerced voter to collude with other voters to deceive the

coercer. Finally, we could consider a coercer trying to coerce a whole

group of voters simultaneously. We do not consider these weakenings in this

paper.

to zero, so the disobedience only fails if the

coercer is certain that the voter disobeyed. However, this seems too weak

because of the weighted votes example mentioned in Section 2.3.

Another weakening

is to allow the coerced voter to collude with other voters to deceive the

coercer. Finally, we could consider a coercer trying to coerce a whole

group of voters simultaneously. We do not consider these weakenings in this

paper.

Let IDEAL TALLY(d) denote the ideal functionality described described in Claim 4.4. We prove coercion resistance for this ideal functionality. First we must make some assumptions about the probability distribution on everyone's votes, then we show that our scheme is coercion resistant.

Even with the ideal functionality, there are still opportunities for coercion

if weights and tallies are reported with too much precision, as described in

Section 2.3. Also, some low probability events still

expose the absence of a particular permutation. For example, if an elected

candidate has only slightly more than a quota, then the approximation

![]() reveals quite a lot of information about the tally. Fortunately, if the tallies

are reported to relatively few decimal places, the coercer can catch a cheating

voter only with low probability. Of course, we still need to make some assumptions about

the coercer's uncertainty about everyone else's vote.

A joint probability distribution

reveals quite a lot of information about the tally. Fortunately, if the tallies

are reported to relatively few decimal places, the coercer can catch a cheating

voter only with low probability. Of course, we still need to make some assumptions about

the coercer's uncertainty about everyone else's vote.

A joint probability distribution ![]() on

on ![]() votes induces a probability distribution on each partial tally (the tallies

that would be obtained if only those

votes induces a probability distribution on each partial tally (the tallies

that would be obtained if only those ![]() votes were cast), and we make

our assumptions based on that induced distribution.

Informally, the idea is to define the distribution to be

votes were cast), and we make

our assumptions based on that induced distribution.

Informally, the idea is to define the distribution to be

![]() -uncertain if,

given the information revealed according to Claim 4.4,

for all votes that the coercer might demand,

there is a probability of at most

-uncertain if,

given the information revealed according to Claim 4.4,

for all votes that the coercer might demand,

there is a probability of at most ![]() that the coercer's

estimated

likelihood that the voter cheated is greater than

that the coercer's

estimated

likelihood that the voter cheated is greater than

![]() .

.

![]()

To see that no other votes are cheat-revealing, apply Claim 4.4.

If the coerced voter's ``cheat'' does not affect the elimination or election

order, then the coercer's

only extra information is the approximation based on the final tally of each

candidate who wins a seat. By

Definition 4.5, this does not

make the coercer suspicious (on the basis of the ratio of their probabilities

with and without voter obedience).

![]()

The results for a standard PC running an election with 200 votes, 40

candidates, 5 seats and denominator ![]() , over the elliptic curve

defined by

, over the elliptic curve

defined by

![]() (where

(where ![]() is a 160-bit prime)

are as follows. The last three times include the time taken to read in the

file of encrypted votes.

is a 160-bit prime)

are as follows. The last three times include the time taken to read in the

file of encrypted votes.

| Size of Ciphered Votes | |

| Transcript Size | |

| Time for EC to compute Election results | 12 mins |

| Time for EC to output Proof | |

| Time to verify Transcript | 60.2 mins |

It would of course be possible to reveal more information. For example, the initial (first-preference) tallies are used by the Australian Electoral Commission to determine public campaign funding. It would be easy to modify the scheme to reveal this, or to reveal partial information using range proofs, but we have not analysed the security implications of this.

The obvious next step is to try to distribute the secret key so that no single authority could decrypt ballots. A proof of correct decryption could be achieved without explicitly reconstructing the key, using the techniques of [CGS97]. The proof of equality of two encrypted values could also be adapted. The range proofs could be distributed using the techniques of [DFK+06] or [ST06] (which is based on Paillier encryption, to which our scheme could easily be adapted). However, we do not know how to do reweighting without all the authorities knowing which votes are being redistributed. This alone could be enough for successful coercion.

This construction preserves the security assumptions that were made in the body of the paper: the EC does learn each decrypted vote, but does not learn which vote corresponds to which voter (unless the mix servers all collude with it). No other entity learns any information about the contents of any votes.

This document was generated using the LaTeX2HTML translator Version 2002-2-1 (1.71)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

Copyright © 1997, 1998, 1999,

Ross Moore,

Mathematics Department, Macquarie University, Sydney.

The command line arguments were:

latex2html -split 0 -show_section_numbers -local_icons -no_navigation -external_file ./NaishTeague2_0 NaishTeague2_0.tex

The translation was initiated by root on 2008-06-30