|

| |||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

|

OSDI '02 Paper

[OSDI '02 Tech Program Index]

Secure routing for structured peer-to-peer overlay networksTo appear in the Fifth Symposium on Operating Systems Design and Implementation (OSDI 2002)Miguel Castro1, Peter Druschel2, Ayalvadi Ganesh1, Antony Rowstron1 and Dan S. Wallach2

Abstract:

Structured peer-to-peer overlay networks provide a substrate for the

construction of large-scale, decentralized applications, including

distributed storage, group communication, and content

distribution. These overlays are highly resilient; they can route

messages correctly even when a large fraction of the nodes crash or

the network partitions. But current overlays are not secure; even a

small fraction of malicious nodes can prevent correct message delivery

throughout the overlay. This problem is particularly serious in open

peer-to-peer systems, where many diverse, autonomous parties without

pre-existing trust relationships wish to pool their resources. This

paper studies attacks aimed at preventing correct message delivery in

structured peer-to-peer overlays and presents defenses to these

attacks. We describe and evaluate techniques that allow nodes to join

the overlay, to maintain routing state, and to forward messages

securely in the presence of malicious nodes.

|

|

|

Pastry nodeIds are assigned randomly with uniform distribution from a circular 128-bit id space. Given a 128-bit key, Pastry routes an associated message toward the live node whose nodeId is numerically closest to the key. Each Pastry node keeps track of its neighbor set and notifies applications of changes in the set.

Node state:

For the purpose of routing, nodeIds and keys are thought of as a

sequence of digits in base ![]() (

(![]() is a configuration parameter

with typical value 4). A node's routing table is organized into

is a configuration parameter

with typical value 4). A node's routing table is organized into

![]() rows and

rows and ![]() columns. The

columns. The ![]() entries in row

entries in row ![]() of the

routing table contain the IP addresses of nodes whose nodeIds share

the first

of the

routing table contain the IP addresses of nodes whose nodeIds share

the first ![]() digits with the present node's nodeId; the

digits with the present node's nodeId; the ![]() th

nodeId digit of the node in column

th

nodeId digit of the node in column ![]() of row

of row ![]() equals

equals ![]() . The

column in row

. The

column in row ![]() that corresponds to the value of the

that corresponds to the value of the ![]() th digit

of the local node's nodeId remains empty. A routing table entry is

left empty if no node with the appropriate nodeId prefix is known.

Figure 1 depicts an example routing table.

th digit

of the local node's nodeId remains empty. A routing table entry is

left empty if no node with the appropriate nodeId prefix is known.

Figure 1 depicts an example routing table.

Each node also maintains a neighbor set (called a ``leaf set''). The

leaf set is the set of ![]() nodes with nodeIds that are numerically

closest to the present node's nodeId, with

nodes with nodeIds that are numerically

closest to the present node's nodeId, with ![]() larger and

larger and ![]() smaller nodeIds than the current node's id. The value of

smaller nodeIds than the current node's id. The value of ![]() is constant for all nodes in the overlay, with a typical value of

approximately

is constant for all nodes in the overlay, with a typical value of

approximately

![]() , where

, where ![]() is the number

of expected nodes in the overlay. The leaf set ensures reliable message

delivery and is used to store replicas of application objects.

is the number

of expected nodes in the overlay. The leaf set ensures reliable message

delivery and is used to store replicas of application objects.

Message routing: At each routing step, a node seeks to forward

the message to a node in the routing table whose nodeId shares with

the key a prefix that is at least one digit (or ![]() bits) longer than

the prefix that the key shares with the present node's id. If no such

node can be found, the message is forwarded to a node whose nodeId

shares a prefix with the key as long as the current node, but is

numerically closer to the key than the present node's id. If no

appropriate node exists in either the routing table or neighbor set,

then the current node or its immediate neighbor is the message's final

destination.

bits) longer than

the prefix that the key shares with the present node's id. If no such

node can be found, the message is forwarded to a node whose nodeId

shares a prefix with the key as long as the current node, but is

numerically closer to the key than the present node's id. If no

appropriate node exists in either the routing table or neighbor set,

then the current node or its immediate neighbor is the message's final

destination.

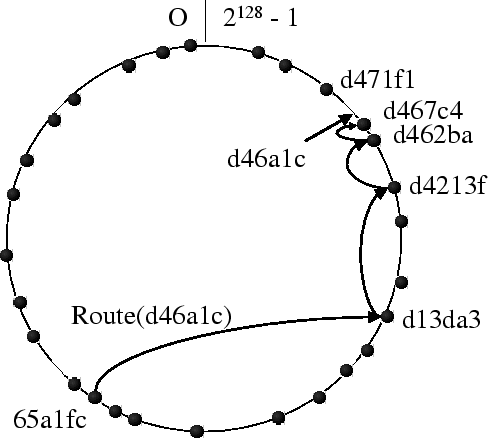

Figure 2 shows the path of an example message. Analysis

shows that the expected number of routing hops is slightly below

![]() , with a distribution that is tight around

the mean. Moreover, simulation shows that the routing is highly

resilient to crash failures.

, with a distribution that is tight around

the mean. Moreover, simulation shows that the routing is highly

resilient to crash failures.

To achieve self-organization, Pastry nodes must dynamically maintain their

node state, i.e., the routing table and neighbor set, in the presence of

node arrivals and node failures.

A newly arriving node with the new nodeId ![]() can initialize its state

by asking any existing Pastry node

can initialize its state

by asking any existing Pastry node ![]() to route a special message

using

to route a special message

using ![]() as the key. The message is routed to the existing node

as the key. The message is routed to the existing node ![]() with nodeId numerically closest to

with nodeId numerically closest to ![]() .

. ![]() then obtains the neighbor set

from

then obtains the neighbor set

from ![]() and constructs its routing table by copying rows from the

routing tables of the nodes it encountered on the original route

from

and constructs its routing table by copying rows from the

routing tables of the nodes it encountered on the original route

from ![]() to

to ![]() . Finally,

. Finally, ![]() announces

its presence to the initial members of its neighbor set, which in turn

update their own neighbor sets and routing tables. Similarly, the overlay

can adapt to abrupt node failure by exchanging a small number of

messages (

announces

its presence to the initial members of its neighbor set, which in turn

update their own neighbor sets and routing tables. Similarly, the overlay

can adapt to abrupt node failure by exchanging a small number of

messages (

![]() ) among a small number of nodes.

) among a small number of nodes.

Next, we briefly describe CAN, Chord and Tapestry, with an emphasis on the differences relative to Pastry.

Tapestry is very similar to Pastry but differs in its approach to

mapping keys to nodes

and in how it manages replication.

In Tapestry, neighboring nodes in the namespace are not aware of each

other. When a node's routing table does not have an entry for a node

that matches a key's ![]() th digit, the message is forwarded to the node

with the next higher value in the

th digit, the message is forwarded to the node

with the next higher value in the ![]() th digit, modulo

th digit, modulo ![]() , found in

the routing table. This procedure, called surrogate routing,

maps keys to a unique live node if the node routing tables are

consistent.

Tapestry does not have a direct analog to a neighbor set, although one

can think of the lowest populated level of the Tapestry routing table

as a neighbor set. For fault tolerance, Tapestry's replica function

produces a set of random keys, yielding a set of replica roots at

random points in the id space.

The expected number of routing hops in Tapestry is

, found in

the routing table. This procedure, called surrogate routing,

maps keys to a unique live node if the node routing tables are

consistent.

Tapestry does not have a direct analog to a neighbor set, although one

can think of the lowest populated level of the Tapestry routing table

as a neighbor set. For fault tolerance, Tapestry's replica function

produces a set of random keys, yielding a set of replica roots at

random points in the id space.

The expected number of routing hops in Tapestry is

![]() .

.

Chord uses a 160-bit circular id space. Unlike Pastry, Chord

forwards messages only in clockwise direction in the circular id

space. Instead of the prefix-based routing table in Pastry, Chord

nodes maintain a routing table consisting of up to

![]() pointers to other live

nodes (called a ``finger table''). The

pointers to other live

nodes (called a ``finger table''). The ![]() th entry in the finger table

of node

th entry in the finger table

of node ![]() refers to the live node with the smallest nodeId clockwise

from

refers to the live node with the smallest nodeId clockwise

from

![]() . The first entry points to

. The first entry points to ![]() 's successor, and

subsequent entries refer to nodes at repeatedly doubling distances

from

's successor, and

subsequent entries refer to nodes at repeatedly doubling distances

from ![]() . Each node in Chord also maintains pointers to its

predecessor and to its

. Each node in Chord also maintains pointers to its

predecessor and to its ![]() successors in the nodeId space (this

successor list represents the neighbor set in our model). Like Pastry,

Chord's replica function maps an object's key to the nodeIds in the

neighbor set of the key's root, i.e., replicas are stored in the

neighbor set of the key's root for fault tolerance. The expected

number of routing hops in Chord is

successors in the nodeId space (this

successor list represents the neighbor set in our model). Like Pastry,

Chord's replica function maps an object's key to the nodeIds in the

neighbor set of the key's root, i.e., replicas are stored in the

neighbor set of the key's root for fault tolerance. The expected

number of routing hops in Chord is

![]() .

.

CAN routes messages in a ![]() -dimensional space, where each node

maintains a routing table with

-dimensional space, where each node

maintains a routing table with ![]() entries and any node can be

reached in

entries and any node can be

reached in

![]() routing hops on average. The entries in a

node's routing table refer to its neighbors in the

routing hops on average. The entries in a

node's routing table refer to its neighbors in the ![]() -dimensional

space. CAN's neighbor table duals as both the routing table and the

neighbor set in our model. Like Tapestry, CAN's replica function

produces random keys for storing replicas at diverse locations.

Unlike Pastry, Tapestry and Chord, CAN's routing table does not grow

with the network size, but the number of routing hops grows faster

than

-dimensional

space. CAN's neighbor table duals as both the routing table and the

neighbor set in our model. Like Tapestry, CAN's replica function

produces random keys for storing replicas at diverse locations.

Unlike Pastry, Tapestry and Chord, CAN's routing table does not grow

with the network size, but the number of routing hops grows faster

than ![]() in this case.

in this case.

Tapestry and Pastry construct their overlay in a Internet topology-aware manner to reduce routing delays and network utilization. In these protocols, routing table entries can be chosen arbitrarily from an entire segment of the nodeId space without increasing the expected number of routing hops. The protocols exploit this by initializing the routing table to refer to nodes that are nearby in the network topology and have the appropriate nodeId prefix. This greatly facilitates proximity routing [17]. However, it also makes these systems vulnerable to certain attacks, as shown in Section 4.

The choice of entries in CAN's and Chord's routing tables is tightly constrained. The CAN routing table entries refer to specific neighboring nodes in each dimension, while the Chord finger table entries refer to specific points in the nodeId space. This makes proximity routing harder but it protects nodes from attacks that exploit attacking nodes' proximity to their victims.

The system runs on a set of ![]() nodes that form an overlay using one

of the protocols described in the previous section. We assume a bound

nodes that form an overlay using one

of the protocols described in the previous section. We assume a bound

![]() (

(

![]() ) on the fraction of nodes that may be faulty.

Faults are modeled using a constrained-collusion Byzantine failure

model, i.e., faulty nodes can behave arbitrarily and they may not all necessarily be

operating as a single conspiracy. The set of faulty nodes is

partitioned into independent coalitions, which are disjoint sets

with size bounded by

) on the fraction of nodes that may be faulty.

Faults are modeled using a constrained-collusion Byzantine failure

model, i.e., faulty nodes can behave arbitrarily and they may not all necessarily be

operating as a single conspiracy. The set of faulty nodes is

partitioned into independent coalitions, which are disjoint sets

with size bounded by ![]() (

(

![]() ). When

). When ![]() , all faulty nodes may

collude with each other to cause the most damage to the system. We

model the case where nodes are grouped into multiple independent

coalitions by setting

, all faulty nodes may

collude with each other to cause the most damage to the system. We

model the case where nodes are grouped into multiple independent

coalitions by setting ![]() . Members of a coalition can work

together to corrupt the overlay but are unaware of nodes in other

coalitions. We studied the behavior of the system with

. Members of a coalition can work

together to corrupt the overlay but are unaware of nodes in other

coalitions. We studied the behavior of the system with ![]() ranging

from

ranging

from ![]() to

to ![]() to model different failure scenarios.

to model different failure scenarios.

We assume that every node in the p2p overlay has a static IP address at which it can be contacted. In this paper, we ignore nodes with dynamically assigned IP addresses, and nodes behind network address translation boxes or firewalls. While p2p overlays can be extended to address these concerns, this paper focuses on more traditional network hosts.

The nodes communicate over normal Internet connections. We distinguish

between two types of communication: network-level, where nodes

communicate directly without routing through the overlay, and overlay-level, where messages are routed through the overlay using

one of the protocols discussed in the previous section. We use

cryptographic techniques to prevent adversaries from observing or

modifying network-level communication between correct nodes. An

adversary has complete control over network-level communication to and

from nodes that it controls. This can compromise overlay-level

communication that is routed through a faulty node. Adversaries may

delay messages between correct nodes but we assume that any message

sent by a correct node to a correct destination over an overlay route

with no faulty nodes is delivered within time ![]() with probability

with probability

![]() .

.

Next, we define a secure routing primitive that can be combined with existing techniques to construct secure applications on structured p2p overlays. Subsequent sections show how to implement the secure routing primitive under the fault and network models that we described in the previous section.

The routing primitives implemented by current structured p2p overlays provide a best-effort service to deliver a message to a replica root associated with a given key. With malicious overlay nodes, the message may be dropped or corrupted, or it may be delivered to a malicious node instead of a legitimate replica root. Therefore, these primitives cannot be used to construct secure applications. For example, when inserting an object, an application cannot ensure that the replicas are placed on legitimate, diverse replica roots as opposed to faulty nodes that impersonate replica roots. Even if applications use cryptographic methods to authenticate objects, malicious nodes may still corrupt, delete, deny access to or supply stale copies of all replicas of an object.

To address this problem, we define a secure routing primitive. The secure routing primitive ensures that when a non-faulty node sends

a message to a key ![]() , the message reaches all non-faulty members in

the set of replica roots

, the message reaches all non-faulty members in

the set of replica roots ![]() with very high probability.

with very high probability. ![]() is

defined as the set of nodes that contains, for each member of the set

of replica keys associated with

is

defined as the set of nodes that contains, for each member of the set

of replica keys associated with ![]() , a live root node that is

responsible for that replica key. In Pastry, for instance,

, a live root node that is

responsible for that replica key. In Pastry, for instance, ![]() is

simply a set of live nodes with nodeIds numerically closest to the

key. Secure routing ensures that (1) the message is eventually

delivered, despite nodes that may corrupt, drop or misroute the

message; and (2) the message is delivered to all legitimate replica

roots for the key, despite nodes that may attempt to impersonate a

replica root.

is

simply a set of live nodes with nodeIds numerically closest to the

key. Secure routing ensures that (1) the message is eventually

delivered, despite nodes that may corrupt, drop or misroute the

message; and (2) the message is delivered to all legitimate replica

roots for the key, despite nodes that may attempt to impersonate a

replica root.

Secure routing can be combined with existing security techniques to safely maintain state in a structured p2p overlay. For instance, self-certifying data can be stored on the replica roots, or a Byzantine-fault-tolerant replication algorithm like BFT [4] can be used to maintain the replicated state. Secure routing guarantees that the replicas are initially placed on legitimate replica roots, and that a lookup message reaches a replica if one exists. Similarly, secure routing can be used to build other secure services, such as maintaining file metadata and user quotas in a distributed storage utility. The details of such services are beyond the scope of this paper.

Implementing the secure routing primitive requires the solution of three problems: securely assigning nodeIds to nodes, securely maintaining the routing tables, and securely forwarding messages. Secure nodeId assignment ensures that an attacker cannot choose the value of nodeIds assigned to the nodes that the attacker controls. Without it, the attacker could arrange to control all replicas of a given object, or to mediate all traffic to and from a victim node.

Secure routing table maintenance ensures that the fraction of faulty nodes that appear in the routing tables of correct nodes does not exceed, on average, the fraction of faulty nodes in the entire overlay. Without it, an attacker could prevent correct message delivery, given only a relatively small number of faulty nodes. Finally, secure message forwarding ensures that at least one copy of a message sent to a key reaches each correct replica root for the key with high probability. Sections 3, 4 and 5 describe solutions to each of these problems.

The performance and security of structured p2p overlay networks depend on the fundamental assumption that there is a uniform random distribution of nodeIds that cannot be controlled by an attacker. This section discusses what goes wrong when the attacker violates this assumption, and how this problem can be addressed.

Attackers who can choose nodeIds can compromise the integrity of a structured p2p overlay, without needing to control a particularly large fraction of the nodes. For example, an attacker may partition a Pastry or Chord overlay if she controls two complete and disjoint neighbor sets. Such attackers may also target particular victim nodes by carefully choosing nodeIds. For example, they may arrange for every entry in a victim's routing table and neighbor set to point to a hostile node in a Chord overlay. At that point, the victim's access to the overlay network is completely mediated by the attacker.

Attackers who can choose nodeIds can also control access to target objects. The attacker can choose the closest nodeIds to all replica keys for a particular target object, thus controlling all replica roots. As a result, the attacker could delete, corrupt, or deny access to the object. Even when attackers cannot choose nodeIds, they may still be able to mount all the attacks above (and more) if they can obtain a large number of legitimate nodeIds easily. This is known as a Sybil attack [10].

Previous approaches to nodeId assignment have either assumed nodeIds are chosen randomly by the new node [5] or compute nodeIds by hashing the IP address of the node [20]. Neither approach is secure because an attacker has the opportunity either to choose nodeIds that are not necessarily random, or to choose an IP address that hashes to a desired interval in the nodeId space. Particularly as IPv6 is deployed, even modest attackers will have more potential IP addresses at their disposal than there are likely to be nodes in a given p2p network.

One solution to securing the assignment of nodeIds is to delegate the problem to a central, trusted authority. We use a set of trusted certification authorities (CAs) to assign nodeIds to principals and to sign nodeId certificates, which bind a random nodeId to the public key that speaks for its principal and an IP address. The CAs ensure that nodeIds are chosen randomly from the id space, and prevent nodes from forging nodeIds. Furthermore, these certificates give the overlay a public key infrastructure, suitable for establishing encrypted and authenticated channels between nodes.

Like conventional CAs, ours can be offline to reduce the risk of exposing certificate signing keys. They are not involved in the regular operation of the overlay. Nodes with valid nodeId certificates can join the overlay, route messages, and leave repeatedly without involvement of the CAs. As with any CA infrastructure, the CA's public keys must be well known, and can be installed as part of the node software itself, as is done with current Web browsers.

The inclusion of an IP address in the certificate deserves some explanation. Some p2p protocols, such as Tapestry and Pastry, measure the network delay between nodes and choose routing table entries that minimize delay. If an attacker with multiple legitimate nodeId certificates could freely swap certificates among nodes it controls, it might be able to increase the fraction of attacker's nodes in a target node's routing table. By binding the nodeId to an IP address, it becomes harder for an attacker to move nodeIds across nodes. We allow multiple nodeId certificates per IP address because the IP addresses of nodes may change and because otherwise, attackers could deny service by hijacking victim's IP addresses.

A downside of binding nodeIds to IP addresses is that, if a node's IP address changes, either as a result of dynamic address assignment, host mobility, or organizational network changes, then the node's old certificate and nodeId become invalid. In p2p systems where IP addresses are allowed to change dynamically, nodeId swapping attacks may be unavoidable.

Certified nodeIds work well when nodes have fixed nodeIds, which is

the case in Chord, Pastry, and Tapestry. However, it might be harder

to secure nodeId assignment in CAN. CAN nodeIds represent a zone in a

![]() -dimensional space that is split in half when a new node

joins [16]. Both the nodeId of the original node

and the nodeId of the joining node change during this process.

-dimensional space that is split in half when a new node

joins [16]. Both the nodeId of the original node

and the nodeId of the joining node change during this process.

While nodeId assignment by a CA ensures that nodeIds are chosen randomly, it is also important to prevent an attacker from easily obtaining a large number of nodeId certificates. One solution is to require an attacker to pay money for certificates, via credit card or any other suitable mechanism. With this solution, the cost of an attack grows naturally with the size of the network. For example, if nodeId certificates cost $20, controlling 10% of an overlay with 1,000 nodes costs $2,000 and the cost rises to $2,000,000 with 1,000,000 nodes. The cost of targeted attacks is even higher; it costs an expected $20,000 to obtain the closest nodeId to a particular point in the id space in an overlay with 1,000 nodes. Apart from making attacks economically expensive, these fees can also fund the operation of the CAs.

Another solution is to bind nodeIds to real-world identities instead of charging money. In practice, different forms of CAs are suitable in different situations. The identity-based CA is the preferred solution in ``virtual private'' overlays run by an organization that already maintains employment or membership records with strong identity checks. In an open Internet deployment, a money-only CA may be more suitable because it avoids the complexities of authenticating real-world identities.

None of the known solutions to nodeId assignment are effective when the overlay network is very small. For small overlay networks, we must require that all members of the network are trusted not to cheat. Only when a network reaches a critical mass, where it becomes sufficiently hard for an attacker to muster enough resources to control a significant fraction of the overlay, should untrusted nodes be allowed to join.

The CAs represent points of failure, vulnerable to both technical and legal attacks. Also, for some p2p networks, it may be cumbersome to require users to spend money or prove their real-world identities. Therefore, it would be desirable to construct secure p2p overlays without requiring centralized authorities, fees or identity checks. Unfortunately, fully decentralized nodeId assignment appears to have fundamental security limitations [10]. None of the methods we are aware of can ultimately prevent a determined attacker from acquiring a large collection of nodeIds.

However, several techniques may be able to, at a minimum, moderate the rate at which an attacker can acquire nodeIds. One possible solution is to require prospective nodes to solve crypto puzzles [15] to gain the right to use a nodeId, an approach that has been taken to address a number of denial of service attacks [13,8]. Unfortunately, the cost of solving a crypto puzzle must be acceptable to the slowest legitimate node, yet the puzzle must be hard enough to sufficiently slow down an attacker with access to many fast machines. This conflict limits the effectiveness of any such technique.

For completeness, we briefly describe here one relatively simple

approach to generate certified nodeIds in a completely distributed

fashion using crypto puzzles. The idea is to require new nodes to

generate a key pair with the property that the SHA-1 hash of the

public key has the first ![]() bits zero. The expected number of

operations required to generate such a key pair is

bits zero. The expected number of

operations required to generate such a key pair is ![]() . The

properties of public-key cryptography allow the nodes to use a secure

hash of the public key as their nodeId. This hash should be computed

using SHA-1 with a different initialization vector or MD5 to avoid

reducing the number of random bits in nodeIds. Nodes can prove that

they performed the required amount of work to use a nodeId without

revealing information that would allow others to reuse their work.

The value of

. The

properties of public-key cryptography allow the nodes to use a secure

hash of the public key as their nodeId. This hash should be computed

using SHA-1 with a different initialization vector or MD5 to avoid

reducing the number of random bits in nodeIds. Nodes can prove that

they performed the required amount of work to use a nodeId without

revealing information that would allow others to reuse their work.

The value of ![]() can be set to achieve the desired level of security.

can be set to achieve the desired level of security.

It is also possible to bind IP addresses with nodeIds to avoid attacks

on overlays that exploit network locality. The idea is to require

nodes to consume resources in order to be able to use a given nodeId

with an IP address. We do this by requiring nodes to find a string ![]() such that SHA-1(SHA-1(ipaddr,x),nodeId) has

such that SHA-1(SHA-1(ipaddr,x),nodeId) has ![]() bits equal to zero. Nodes would be required to present such an

bits equal to zero. Nodes would be required to present such an ![]() for

the pair (nodeId,ipaddr) to be accepted by others.

for

the pair (nodeId,ipaddr) to be accepted by others.

Finally, it is possible to periodically invalidate nodeIds by having some trusted entity broadcast to the overlay a message supplying a different initialization vector for the hash computations. This makes it harder for an attacker to accumulate many nodeIds over time and to reuse nodeIds computed for one overlay in another overlay. However, it requires legitimate nodes to periodically spend additional time and communication to maintain their membership in the overlay.

We now turn our attention to the problem of secure routing table

maintenance. The routing table maintenance mechanisms are used to

create routing tables and neighbor sets for joining nodes, and to

maintain them after creation. Ideally, each routing table and

neighbor set should have an average fraction of only ![]() random

entries that point to nodes controlled by the attacker (called ``bad

entries''). But attackers can increase the fraction of bad entries by

supplying bad routing updates, which reduces the probability of

routing successfully to replica roots.

random

entries that point to nodes controlled by the attacker (called ``bad

entries''). But attackers can increase the fraction of bad entries by

supplying bad routing updates, which reduces the probability of

routing successfully to replica roots.

Preventing attackers from choosing nodeIds is necessary to avoid this problem but it is not sufficient as illustrated by the two attacks discussed next. We also discuss solutions to this problem.

The first attack is aimed at routing algorithms that use network

proximity information to improve routing efficiency: attackers may

fake proximity to increase the fraction of bad routing table

entries. For example, the network model that we assumed allows an

attacker to control communication to and from faulty nodes that it

controls. When a correct node ![]() sends a probe to estimate delay to a

faulty node with a certain nodeId, an attacker can intercept the probe

and have the faulty node closest to

sends a probe to estimate delay to a

faulty node with a certain nodeId, an attacker can intercept the probe

and have the faulty node closest to ![]() reply to it. If the attacker

controls enough faulty nodes spread over the Internet, it can make

nodes that it controls appear close to correct nodes to increase the

probability that they are used for routing.

The attack is harder when

reply to it. If the attacker

controls enough faulty nodes spread over the Internet, it can make

nodes that it controls appear close to correct nodes to increase the

probability that they are used for routing.

The attack is harder when ![]() (the maximal fraction of colluding

nodes) is small even if

(the maximal fraction of colluding

nodes) is small even if ![]() is large.

is large.

This attack can be ruled out by a more restrictive communication model, since nodeId certificates bind IP addresses to nodeIds (see Section 3.2). For example, if faulty nodes can only observe messages that are sent to their own IP address [19], this attack is prevented. But note that a rogue ISP or corporation with several offices around the world could easily perform this attack by configuring their routers appropriately. The attack is also possible if there is any other form of indirection that the attacker can control, e.g., mobile IPv6.

The second attack does not manipulate proximity information. Instead,

it exploits the fact that it is hard to determine whether routing

updates are legitimate in overlay protocols like Tapestry and Pastry.

Nodes receive routing updates when they join the overlay and when

other nodes join, and they fetch routing table rows from other nodes

in their routing table periodically to patch holes and reduce hop

delays. In these systems, attackers can more easily supply routing

updates that always point to faulty nodes. This simple attack causes

the fraction of bad routing table entries to increase toward one as

the bad routing updates are propagated. More precisely, routing

updates from correct nodes point to a faulty node with probability at

least ![]() whereas this probability can be as high as one for routing

updates from faulty nodes. Correct nodes receive updates from other

correct nodes with probability at most

whereas this probability can be as high as one for routing

updates from faulty nodes. Correct nodes receive updates from other

correct nodes with probability at most ![]() and from faulty nodes

with probability at least

and from faulty nodes

with probability at least ![]() . Therefore, the probability that a

routing table entry is faulty after an update is at least

. Therefore, the probability that a

routing table entry is faulty after an update is at least

![]() , which is greater than

, which is greater than ![]() . This effect cascades

with each subsequent update, causing the fraction of faulty entries to

tend towards one.

. This effect cascades

with each subsequent update, causing the fraction of faulty entries to

tend towards one.

Systems without strong constraints on the set of nodeIds that can fill

each routing table slot are more vulnerable to this attack. Pastry

and Tapestry impose very weak constraints at the top levels of routing

tables. This flexibility makes it hard to determine if routing updates

are unbiased but it allows these systems to effectively exploit

network proximity to improve routing performance. CAN and Chord impose

strong constraints on nodeIds in routing table entries: they need to

be the closest nodeIds to some point in the id space. This makes it

hard to exploit network proximity to improve performance but it is

good for security; if attackers cannot choose the nodeIds they

control, the probability that an attacker controls the nodeId closest

to a point in the id space is ![]() .

.

To enable secure routing table maintenance, it is important to impose strong constraints on the set of nodeIds that can fill each slot in a routing table. For example, the entry in each slot can be constrained to be the closest nodeId to some point in the id space as in Chord. This constraint can be verified and it is independent of network proximity information, which can be manipulated by attackers.

The solution that we propose uses two routing tables: one that exploits network proximity information for efficient routing (as in Pastry and Tapestry), and one that constrains routing table entries (as in Chord). In normal operation, the first routing table is used to forward messages to achieve good performance. The second one is used only when the efficient routing technique fails. We use the test in Section 5.2 to detect when routing fails.

We modified Pastry to use this solution. We use the normal

locality-aware Pastry routing table and an additional constrained Pastry routing table. In the locality-aware routing table

of a node with identifier ![]() , the slot at level

, the slot at level ![]() and domain

and domain ![]() can contain any nodeId that shares the first

can contain any nodeId that shares the first ![]() digits with

digits with ![]() and

has the value

and

has the value ![]() in the

in the ![]() st digit. In the constrained routing

table, the entry is further constrained to point to the closest nodeId

to a point

st digit. In the constrained routing

table, the entry is further constrained to point to the closest nodeId

to a point ![]() in the domain. We define

in the domain. We define ![]() as follows: it shares the

first

as follows: it shares the

first ![]() digits with

digits with ![]() , it has the value

, it has the value ![]() in the

in the ![]() st digit,

and it has the same remaining digits as

st digit,

and it has the same remaining digits as ![]() .

.

Pastry's message forwarding works with the constrained routing table without modifications. The same would be true with Tapestry. But the algorithms to initialize and maintain the routing table were modified as follows.

All overlay routing algorithms rely on a bootstrap node to initialize the routing state of a newly joining node. The bootstrap node is responsible for routing a message using the nodeId of the joining node as the key. If the bootstrap node is faulty, it can completely corrupt the view of the overlay network as seen by the new node. Therefore, it is necessary to use a set of diverse bootstrap nodes large enough to ensure that with very high probability, at least one of them is correct. The use of nodeId certificates makes the task of choosing such a set easier because the attacker cannot forge nodeIds.

A newly joining node, ![]() , picks a set of bootstrap nodes and asks all

of them to route using its nodeId as the key. Then, non-faulty

bootstrap nodes use secure forwarding techniques (described in

Section 5.2) to obtain the neighbor set for the

joining node. Node

, picks a set of bootstrap nodes and asks all

of them to route using its nodeId as the key. Then, non-faulty

bootstrap nodes use secure forwarding techniques (described in

Section 5.2) to obtain the neighbor set for the

joining node. Node ![]() collects the proposed neighbor sets from each

of the bootstrap nodes, and picks the ``closest'' live nodeIds from

each proposed set to be its neighbor set (where the definition of

closest is protocol specific).

collects the proposed neighbor sets from each

of the bootstrap nodes, and picks the ``closest'' live nodeIds from

each proposed set to be its neighbor set (where the definition of

closest is protocol specific).

The locality-aware routing table is initialized as before by

collecting rows from the nodes along the route to the nodeId. The

difference is that there are several routes; ![]() picks the entry with

minimal network delay from the set of candidates it receives for each

routing table slot.

picks the entry with

minimal network delay from the set of candidates it receives for each

routing table slot.

Each entry in the constrained routing table can be initialized by

using secure forwarding to obtain the live nodeId closest to the desired

point ![]() in the id space. This is similar to what is done in

Chord. The problem is that it is quite expensive with

in the id space. This is similar to what is done in

Chord. The problem is that it is quite expensive with ![]() (recall

that

(recall

that ![]() controls the number of columns in the routing table of

Tapestry and Pastry). To reduce the overhead, we can take advantage of

the fact that, by induction, the constrained routing tables of the

nodes in

controls the number of columns in the routing table of

Tapestry and Pastry). To reduce the overhead, we can take advantage of

the fact that, by induction, the constrained routing tables of the

nodes in ![]() 's neighbor set point to entries that are close to the

desired point

's neighbor set point to entries that are close to the

desired point ![]() . Therefore,

. Therefore, ![]() can collect routing tables from the

nodes in its neighbor set and use them to initialize its constrained

routing table. From the set of candidates that it receives for each

entry, it picks the nodeId that is closest to the desired point for

that entry. As a side effect of this process,

can collect routing tables from the

nodes in its neighbor set and use them to initialize its constrained

routing table. From the set of candidates that it receives for each

entry, it picks the nodeId that is closest to the desired point for

that entry. As a side effect of this process, ![]() informs the nodes in

its neighbor set of its arrival.

informs the nodes in

its neighbor set of its arrival.

We exploit the symmetry in the constrained routing table to inform

nodes that need to update their routing tables to reflect ![]() 's

arrival:

's

arrival: ![]() checks its neighbor set and the set of candidates for

each entry to determine which candidates should update routing table

entries to point to

checks its neighbor set and the set of candidates for

each entry to determine which candidates should update routing table

entries to point to ![]() . It informs those candidates of its arrival.

. It informs those candidates of its arrival.

To ensure neighbor set stabilization in the absence of new joins

and leaves, ![]() informs the members of its neighbor set whenever it

changes and it periodically retransmits this information until its

receipt is acknowledged.

informs the members of its neighbor set whenever it

changes and it periodically retransmits this information until its

receipt is acknowledged.

The use of certified nodeIds and secure routing table maintenance

ensure that each constrained routing table (and neighbor set) has an

average fraction of only ![]() random entries that point to nodes

controlled by the attacker. But routing with the constrained routing

table is not sufficient because the attacker can reduce the

probability of successful delivery by simply not forwarding messages

according to the algorithm. The attack is effective even when

random entries that point to nodes

controlled by the attacker. But routing with the constrained routing

table is not sufficient because the attacker can reduce the

probability of successful delivery by simply not forwarding messages

according to the algorithm. The attack is effective even when ![]() is

small, as we will show. This section describes an efficient solution

to this problem.

is

small, as we will show. This section describes an efficient solution

to this problem.

All structured p2p overlays provide a primitive to send a message to a

key. In the absence of faults, the message is delivered to the root

node for the key after an average of ![]() routing hops. But routing may

fail if any of the

routing hops. But routing may

fail if any of the ![]() nodes along the route between the sender and

the root are faulty; faulty nodes may simply drop the message, route

the message to the wrong place, or pretend to be the key's root.

Therefore, the probability of routing successfully between two correct

nodes when a fraction

nodes along the route between the sender and

the root are faulty; faulty nodes may simply drop the message, route

the message to the wrong place, or pretend to be the key's root.

Therefore, the probability of routing successfully between two correct

nodes when a fraction ![]() of the nodes is faulty is only:

of the nodes is faulty is only:

![]() , which is independent of

, which is independent of ![]() .

.

The root node for a key may itself be faulty. As discussed before,

applications can tolerate root faults by replicating the information

associated with the key on several nodes -- the replica

roots. Therefore, the probability of routing successfully to a

correct replica root is only:

![]() . The value of

. The value of ![]() depends

on the overlay: it is

depends

on the overlay: it is

![]() in CAN,

in CAN,

![]() in Chord,

and

in Chord,

and

![]() in Pastry and Tapestry.

in Pastry and Tapestry.

We ran simulations of Pastry to validate this model. The model

predicts a probability of success slightly lower than the probability

that we observed in the simulations (because the number of Pastry hops

is slightly less than

![]() on

average [3]) but the error was below 2%.

on

average [3]) but the error was below 2%.

Figure 3 plots the probability of routing to a

correct replica in Pastry (computed using the model) for different

values of ![]() ,

, ![]() , and

, and ![]() . The probability drops quite fast when

. The probability drops quite fast when

![]() or

or ![]() increase. Even with only 10% of the nodes compromised, the

probability of successful routing is only 65% when there are 100,000

nodes in a Pastry overlay.

increase. Even with only 10% of the nodes compromised, the

probability of successful routing is only 65% when there are 100,000

nodes in a Pastry overlay.

In CAN, Pastry, and Tapestry, applications can reduce the number of

hops by increasing the value of ![]() or

or ![]() . Fewer hops increase the

probability of routing correctly. For example, the probability of

successful delivery with

. Fewer hops increase the

probability of routing correctly. For example, the probability of

successful delivery with ![]() and 100,000 nodes is 65% in Pastry

when

and 100,000 nodes is 65% in Pastry

when ![]() and 75% when

and 75% when ![]() . But increasing

. But increasing ![]() also increases the

cost of routing table maintenance; a high probability of routing

success requires an impractically large value of

also increases the

cost of routing table maintenance; a high probability of routing

success requires an impractically large value of ![]() . Chord currently

uses a fixed

. Chord currently

uses a fixed ![]() , which results in a low probability of success,

e.g., the probability is only 42% under the same conditions.

, which results in a low probability of success,

e.g., the probability is only 42% under the same conditions.

The results in Figure 3 show that it is important to devise mechanisms to route securely. We want a secure routing primitive that takes a message and a destination key and ensures that with very high probability at least one copy of the message reaches each correct replica root for the key. The question is how to do this efficiently.

Our approach is to route a message efficiently and to apply a failure test to determine if routing worked. We only use more expensive redundant routing when the failure test returns positive. In more detail, our secure routing primitive routes a message efficiently to the root of the destination key using the locality-aware routing table. Then, it collects the prospective set of replica roots from the prospective root node and applies the failure test to the set. If the test is negative, the prospective replica roots are accepted as the correct ones. If it is positive, message copies are sent over diverse routes toward the various replica roots such that with high probability each correct replica root is reached. We start by describing how to implement the failure test. Then we explain redundant routing and why we rejected an alternate approach called iterative routing.

Detecting routing failures is difficult because a coalition of faulty nodes can pretend to be the legitimate replica roots for a given key. Since the replica roots are determined by the structure of the overlay, a node whose nodeId is far from the key must rely on overlay routing to determine the correct set of replica roots. But if a message is routed by a faulty node, the adversary can fabricate a credible route and replica root set that contain only nodes it controls. Furthermore, it might be the case that the adversary just happens to legitimately control one of the actual replica roots. This problem is common to all structured p2p overlay protocols.

The routing failure test is based on the observation that the average density of nodeIds per unit of ``volume'' in the id space is greater than the average density of faulty nodeIds. The test works by comparing the density of nodeIds in the neighbor set of the sender with the density of nodeIds close to the replica roots of the destination key. We describe the test in detail only in the context of Pastry to simplify the presentation; the generalization to other overlays is straightforward. Overlays that distribute replica keys for a key uniformly over the id space can still use this check by comparing the density at the sender with the average distance between each replica key and its root's nodeId.

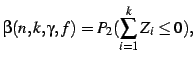

In Pastry, the set of replica roots for a key is a subset of the

neighbor set of the key's root node, called the key's root

neigbor set.

Each correct node ![]() computes the average numerical distance,

computes the average numerical distance,

![]() , between consecutive nodeIds in its neighbor set. The neighbor

set of

, between consecutive nodeIds in its neighbor set. The neighbor

set of ![]() contains

contains ![]() live nodes:

live nodes: ![]() , the

, the ![]() nodes with the

closest nodeIds less than

nodes with the

closest nodeIds less than ![]() 's, and the

's, and the ![]() nodes with the closest

nodeIds greater than

nodes with the closest

nodeIds greater than ![]() 's. To test a prospective root neighbor set,

's. To test a prospective root neighbor set,

![]() , for a key

, for a key ![]() ,

, ![]() checks that:

checks that:

If ![]() satisfies both conditions, the test returns negative;

otherwise, it returns positive. The test can be inaccurate in one of

two ways: it can return a false positive when the prospective

root neighbor set is correct, or it can return a false negative

when the prospective set is incorrect. We call the probability of

false positives

satisfies both conditions, the test returns negative;

otherwise, it returns positive. The test can be inaccurate in one of

two ways: it can return a false positive when the prospective

root neighbor set is correct, or it can return a false negative

when the prospective set is incorrect. We call the probability of

false positives ![]() and the probability of false negatives

and the probability of false negatives

![]() . The parameter

. The parameter ![]() controls the tradeoff between

controls the tradeoff between

![]() and

and ![]() . Intuitively, increasing

. Intuitively, increasing ![]() decreases

decreases

![]() but it also increases

but it also increases ![]() .

.

Assuming that there are ![]() live nodes with nodeIds uniformly

distributed over the id space (which has length

live nodes with nodeIds uniformly

distributed over the id space (which has length ![]() ), the

distances between consecutive nodeIds are approximately independent

exponential random variables with mean

), the

distances between consecutive nodeIds are approximately independent

exponential random variables with mean ![]() for large

for large ![]() . The same

holds for the distances between consecutive nodeIds of faulty nodes

that can collude together but the mean is

. The same

holds for the distances between consecutive nodeIds of faulty nodes

that can collude together but the mean is

![]() . It is

interesting to note that

. It is

interesting to note that ![]() and

and ![]() are independent of

are independent of ![]() .

They only depend on the upper bound,

.

They only depend on the upper bound, ![]() , on the fraction of colluding

nodes because faulty nodes only know the identities of faulty nodes

that they collude with.

, on the fraction of colluding

nodes because faulty nodes only know the identities of faulty nodes

that they collude with.

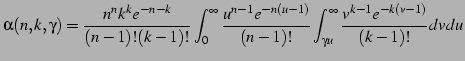

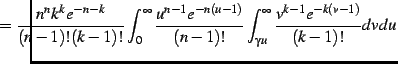

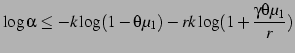

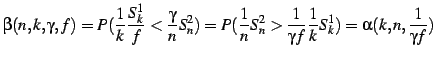

Under these assumptions, we have derived the following expressions to

compute ![]() and

and ![]() (see detailed derivation in the Appendix):

(see detailed derivation in the Appendix):

|

|

![$\displaystyle \alpha \leq \exp \left\{ -k \Bigl[ (r+1)\log \frac{r+\gamma}{r+1} - \log \gamma \Bigr] \right\}$](img79.png) |

![$\displaystyle \beta \leq \exp \left\{ -k \Bigl[ (r+1)\log \frac{r+\gamma c}{r+1} + \log (\gamma c) \Bigr] \right\}$](img80.png) |

The analysis shows that ![]() and

and ![]() are independent of

are independent of ![]() (provided

(provided ![]() ),

and that the test's accuracy can be improved by increasing the number

of distance samples used to compute the means. It is easy to increase

the number of samples

),

and that the test's accuracy can be improved by increasing the number

of distance samples used to compute the means. It is easy to increase

the number of samples ![]() used to compute

used to compute ![]() by augmenting the

mechanism that is already in place to stabilize neighbor sets. This

mechanism propagates nodeIds that are added and removed from a

neighbor set to the other members of the set; it can be extended to

propagate nodeIds further but we omit the details due to lack of

space. It is hard to increase the number of samples used to compute

by augmenting the

mechanism that is already in place to stabilize neighbor sets. This

mechanism propagates nodeIds that are added and removed from a

neighbor set to the other members of the set; it can be extended to

propagate nodeIds further but we omit the details due to lack of

space. It is hard to increase the number of samples used to compute

![]() because of some attacks that we describe below. Therefore,

we keep

because of some attacks that we describe below. Therefore,

we keep ![]() .

.

|

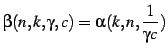

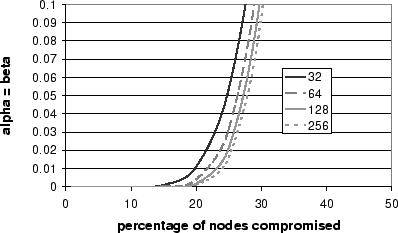

We ran several simulations to evaluate the effectiveness of our

routing failure test. The simulations ran in a system with 100,000

random nodeIds. Figure 4 plots values of ![]() and

and ![]() for different values of

for different values of ![]() with

with ![]() , the

number of samples at the sender is

, the

number of samples at the sender is ![]() , and the number of root neighbors

is

, and the number of root neighbors

is ![]() . The figure shows predicted values computed

numerically, the upper bounds, and values measured in the

simulations. The predicted curves match the measured curves almost

exactly but the upper bounds are not very tight. The minimum error is

obtained when

. The figure shows predicted values computed

numerically, the upper bounds, and values measured in the

simulations. The predicted curves match the measured curves almost

exactly but the upper bounds are not very tight. The minimum error is

obtained when

![]() , which is equal to

, which is equal to ![]() with

with

![]() in this case.

in this case.

These attacks can be avoided by having the sender contact all the prospective root neighbors to determine if they are live and if they have a nodeId certificate that was omitted from the prospective root neighbor set. To implement this efficiently, the prospective root returns to the sender a message with the list of nodeId certificates, a list with the secure hashes of the neighbor sets reported by each of the prospective root neighbors, and the set of nodeIds (not in the prospective root neighbor set) that are used to compute the hashes in this list. The sender checks that the hashes are consistent with the identifiers of the prospective root neighbors. Then, it sends each prospective root neigbor the corresponding neighbor set hash for confirmation.

In the absence of faults, the root neighbors will confirm the hashes

and the sender can perform the density comparison immediately. For a

sufficiently large timeout, this happens with probability

![]() , where binom is the binomial

distribution [6] and

, where binom is the binomial

distribution [6] and ![]() is the number of root neighbors. With

faulty nodes in the prospective root neighbor set, the routing

failure test may require more communication before the density check

can be run. We are still studying the best strategy to deal with this

case. Currently, we consider the test failed when the prospective root neighbors

don't agree and use redundant routing. But, it may be worthwhile

investing some additional communication before reverting to redundant

routing.

is the number of root neighbors. With

faulty nodes in the prospective root neighbor set, the routing

failure test may require more communication before the density check

can be run. We are still studying the best strategy to deal with this

case. Currently, we consider the test failed when the prospective root neighbors

don't agree and use redundant routing. But, it may be worthwhile

investing some additional communication before reverting to redundant

routing.

In addition to these attacks, there is a nodeId suppression attack that seems

to be unavoidable and significantly decreases the accuracy of this

test. The attacker can suppress nodeIds close to the sender by

leaving the overlay, which increases ![]() . Similarly, the attacker

can suppress nodeIds in the root neighbor set, which increases

. Similarly, the attacker

can suppress nodeIds in the root neighbor set, which increases

![]() . Furthermore, the attacker can alternate between the two

modes and honest nodes have no way of detecting in which mode they are

operating.

. Furthermore, the attacker can alternate between the two

modes and honest nodes have no way of detecting in which mode they are

operating.

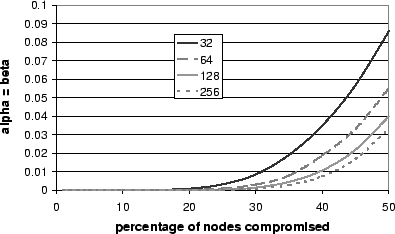

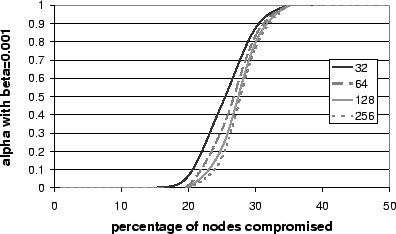

We ran simulations to compute the minimum error probability (

![]() ) with and without nodeId suppression attacks for different

values of

) with and without nodeId suppression attacks for different

values of ![]() . The probability of error increases fast with

. The probability of error increases fast with ![]() and

it is higher than

and

it is higher than ![]() for

for

![]() even with 256 samples at the

sender. The nodeId suppression attack increases the minimum

probability of error for large percentages of compromised nodes, e.g.,

the probability of error is higher than 0.001 for

even with 256 samples at the

sender. The nodeId suppression attack increases the minimum

probability of error for large percentages of compromised nodes, e.g.,

the probability of error is higher than 0.001 for

![]() even with

256 samples at the sender.

Figures 5 and 6

show the results without and with nodeId suppression attacks,

respectively.

even with

256 samples at the sender.

Figures 5 and 6

show the results without and with nodeId suppression attacks,

respectively.

|

|

These results indicate that our routing failure test is not very

accurate. But, fortunately we can trade off an increase in ![]() to

achieve a target

to

achieve a target ![]() and use redundant routing to disambiguate

false positives. We ran simulations to determine the minimum

and use redundant routing to disambiguate

false positives. We ran simulations to determine the minimum ![]() that can be achieved for a target

that can be achieved for a target

![]() with different values of

with different values of

![]() , and different numbers of samples at the sender.

Figure 7 shows the results with nodeId

suppression attacks.

, and different numbers of samples at the sender.

Figure 7 shows the results with nodeId

suppression attacks.

|

The results show that the test is not meaningful for this target

![]() and

and ![]() with nodeId suppression attacks. However,

setting

with nodeId suppression attacks. However,

setting

![]() with 256 samples at the sender enables the

routing failure test to achieve the target

with 256 samples at the sender enables the

routing failure test to achieve the target ![]() for

for

![]() .

For this value of

.

For this value of ![]() and with

and with ![]() , nodeId suppression

attacks can increase

, nodeId suppression

attacks can increase ![]() to 0.77. But without nodeId suppression

attacks the value of

to 0.77. But without nodeId suppression

attacks the value of ![]() is only 0.12, i.e., redundant routing is required 12% of the time.

is only 0.12, i.e., redundant routing is required 12% of the time.

The redundant routing technique is invoked when the routing failure test is positive. The idea is simply to route copies of the message over multiple routes toward each of the destination key's replica roots. If enough copies of the message are sent along diverse routes to each replica key, all correct replica roots will receive at least one copy of the message with high probability.

The issue is how to ensure that routes are diverse. One approach is to ask the members of the sender's neighbor set to forward the copies of the message to the replica keys. This technique is sufficient in overlays that distribute the replica keys uniformly over the id space (e.g., CAN and Tapestry). But it is not sufficient in overlays that choose replica roots in the neighbor set of the key's root (e.g., Chord and Pastry) because the routes all converge on the key's root, which might be faulty. For these overlays, we developed a technique called neighbor set anycast that sends copies of the message toward the destination key until they reach a node with the key's root in its neighbor set. Then it uses the detailed knowledge that such a node has about the portion of the id space around the destination key to ensure that all correct replica roots receive a copy of the message.

To simplify the presentation, we only describe in detail how redundant

routing works in Pastry. If a correct node ![]() sends a message to a

destination key

sends a message to a

destination key ![]() and the routing failure test is positive, it does

the following:

and the routing failure test is positive, it does

the following:

(1) ![]() sends

sends ![]() messages to the destination

key

messages to the destination

key ![]() . Each message is forwarded via a different member of

. Each message is forwarded via a different member of ![]() 's

neighbor set; this causes the messages to use diverse routes. All

messages are forwarded using the constrained routing table and they

include a nonce.

's

neighbor set; this causes the messages to use diverse routes. All

messages are forwarded using the constrained routing table and they

include a nonce.

(2) Any correct node that receives one of the messages and

has ![]() 's root in its neighbor set returns its nodeId certificate and

the nonce, signed with its private key, to

's root in its neighbor set returns its nodeId certificate and

the nonce, signed with its private key, to ![]() .

.

(3) ![]() collects in a set

collects in a set ![]() the

the ![]() nodeId certificates

numerically closest to

nodeId certificates

numerically closest to ![]() on the left, and the

on the left, and the ![]() closest to

closest to ![]() on the right. Only certificates with valid signed nonces are added to

on the right. Only certificates with valid signed nonces are added to

![]() and they are first marked pending.

and they are first marked pending.

(4) After a timeout or after all ![]() replies are received,

replies are received, ![]() sends a list with the nodeIds in

sends a list with the nodeIds in ![]() to each node marked pending in

to each node marked pending in ![]() and marks the nodes done.

and marks the nodes done.

(5) Any correct node that receives this list forwards

![]() 's original message to the nodes in its neighbor set that are not

in the list, or it sends a confirmation to

's original message to the nodes in its neighbor set that are not

in the list, or it sends a confirmation to ![]() if there are no such nodes. This may

cause steps 2 to 4 to be repeated.

if there are no such nodes. This may

cause steps 2 to 4 to be repeated.

(6) Once ![]() has received a confirmation from each of the nodes in

has received a confirmation from each of the nodes in

![]() , or step 4 was executed three times, it computes the set of

replica roots for

, or step 4 was executed three times, it computes the set of

replica roots for ![]() from

from ![]() .

.

If the timeout is sufficiently large and correct nodes have another

correct node in each half of their neighbor set1,

the probability of reaching all correct replica roots of ![]() is

approximately equal to the probability that at least one of the

anycast messages is forwarded over a route with no faults to a correct

node with the key's root in its neighbor set. Assuming independent routes, this probability is:

is

approximately equal to the probability that at least one of the

anycast messages is forwarded over a route with no faults to a correct

node with the key's root in its neighbor set. Assuming independent routes, this probability is:

We also ran simulations to determine the probability of reaching all

correct replica roots with our redundant routing technique.

Figure 8 plots the predicted probability and

the probability measured in the simulator for 100,000 nodes, ![]() ,

and

,

and ![]() . The analytic model matches the results well for high

success probabilities. The results show that the probability of

success is greater than 0.999 for

. The analytic model matches the results well for high

success probabilities. The results show that the probability of

success is greater than 0.999 for ![]() . Therefore, this technique

combined with our routing failure test can achieve a reliability of

approximately 0.999 for

. Therefore, this technique

combined with our routing failure test can achieve a reliability of

approximately 0.999 for ![]() .

.

|

We studied several versions of redundant routing that achieve the same

probability of success but perform differently. For example, the

signed nonces are used to ensure that the nodeId certificates in ![]() belong to live nodes. But nodes can avoid signing nonces by

periodically signing their clock reading in a system with loosely

synchronized clocks, and no signatures are necessary if the attacker

cannot forge IP source addresses. We are still exploring the design

space. For example, it should be possible to improve performance

significantly by sending the anycast messages one at a time and using

a version of the routing failure test after each one. This approach would also

work well when reading self-certifying data.

belong to live nodes. But nodes can avoid signing nonces by

periodically signing their clock reading in a system with loosely

synchronized clocks, and no signatures are necessary if the attacker

cannot forge IP source addresses. We are still exploring the design

space. For example, it should be possible to improve performance

significantly by sending the anycast messages one at a time and using

a version of the routing failure test after each one. This approach would also

work well when reading self-certifying data.

The performance of Pastry's secure routing primitive depends on the

cost of the routing failure test, the cost of redundant routing, and

on the probability that redundant routing can be avoided. This section

presents an analysis of these costs and probability. For simplicity,

we assume that all faulty nodes can collude (![]() ), the number of

probes used by redundant routing is equal to the neighbor set size

(

), the number of

probes used by redundant routing is equal to the neighbor set size

(![]() ), the number of samples at the source for routing failure tests

is

), the number of samples at the source for routing failure tests

is ![]() , and the number of nodes in the overlay is

, and the number of nodes in the overlay is ![]() .

.

The cost of the routing failure test when it returns negative is an

extra round-trip delay and ![]() messages. The total number of bytes

in all messages is:

messages. The total number of bytes

in all messages is:

Using PSS-R [1] for signing nodeId

certificates with 1024-bit modulus and 512-bit modulus for the node

public keys, the nodeId certificate size is 128B. Therefore, the extra

bandwidth consumed by the routing failure test is approximately 5.6 KB

with ![]() and 2.9 KB with

and 2.9 KB with ![]() (plus the space used up by message

headers). When the test returns positive, it adds the same number of

messages and bytes but the extra delay is the timeout period.

(plus the space used up by message

headers). When the test returns positive, it adds the same number of

messages and bytes but the extra delay is the timeout period.

The cost of redundant routing depends on the value of ![]() . The best

case occurs when all of the root's neighbor set is added to

. The best

case occurs when all of the root's neighbor set is added to ![]() in the first iteration. In this case, redundant routing adds

in the first iteration. In this case, redundant routing adds

![]() extra message delays and

extra message delays and

![]() messages. The total number of bytes in these messages is:

messages. The total number of bytes in these messages is:

Using PSS-R for signing nonces, the signed nonce size is 64B.

Therefore, the extra bandwidth consumed in this case is 22 KB with

![]() and 7 KB with

and 7 KB with ![]() (plus the space used up by message

headers).

(plus the space used up by message

headers).

Under attack redundant routing adds a delay of at most three timeout

periods and the expected number of extra messages is less than

![]() , where

, where

![]() is the expected number of correct nodes in the

root's neighbor set that is added to

is the expected number of correct nodes in the

root's neighbor set that is added to ![]() in the first iteration.

The expected number of messages is less than 451 with

in the first iteration.

The expected number of messages is less than 451 with ![]() and

and ![]() and less than 188 with

and less than 188 with ![]() and

and ![]() . The total number

of bytes sent under attack is similar to the best case value except

that the sender sends an additional

. The total number

of bytes sent under attack is similar to the best case value except

that the sender sends an additional

![]() IdSize

bytes in nodeId lists and the number of messages increases. This is

an additional 12 KB with

IdSize

bytes in nodeId lists and the number of messages increases. This is

an additional 12 KB with ![]() and

and ![]() and 1 KB with

and 1 KB with ![]() and

and ![]() (plus the space used up by message headers).

(plus the space used up by message headers).

The probability of avoiding redundant routing is given by

![]() , where

, where ![]() is the probability that

the overlay routes the message to the correct replica root,

is the probability that

the overlay routes the message to the correct replica root, ![]() is

the probability that there are no faulty nodes in the

neighbor set of the root, and

is

the probability that there are no faulty nodes in the

neighbor set of the root, and ![]() is the false positive rate of the routing failure

test. We use

is the false positive rate of the routing failure

test. We use

![]() , which assumes that routing

tables have an average of

, which assumes that routing

tables have an average of ![]() random bad entries. This assumption

holds for the locality-aware routing table in the absence of the

attacks discussed in Section 4 and it holds for

the constrained routing table even with these attacks. We do not have

a good model of the effect of these attacks on the locality aware

routing table but we believe that they are very hard to mount for

small values of

random bad entries. This assumption

holds for the locality-aware routing table in the absence of the

attacks discussed in Section 4 and it holds for

the constrained routing table even with these attacks. We do not have

a good model of the effect of these attacks on the locality aware

routing table but we believe that they are very hard to mount for

small values of ![]() (

(![]() ).

).

The parameters ![]() and

and ![]() , should be set based on the desired

security level, which can be expressed as the probability

, should be set based on the desired

security level, which can be expressed as the probability ![]() that all correct replica roots receive a copy of the message. The

overlay size and the assignment of values to the parameters implicitly

define a bound on

that all correct replica roots receive a copy of the message. The

overlay size and the assignment of values to the parameters implicitly

define a bound on ![]() . If this bound is exceeded,

. If this bound is exceeded, ![]() will

drop. For example, we saw that

will

drop. For example, we saw that

![]() with

with

![]() and

and ![]() .

But redundant routing is invoked 12% of the time for this value of

.

But redundant routing is invoked 12% of the time for this value of

![]() even with no faults.

even with no faults.

One can trade off security for improved performance by increasing

![]() to reduce

to reduce ![]() , and by decreasing

, and by decreasing ![]() to reduce the cost

of the routing failure test and redundant routing and to increase

to reduce the cost

of the routing failure test and redundant routing and to increase

![]() . For example, consider the following two scenarios: (1)

. For example, consider the following two scenarios: (1)

![]() with

with

![]() and

and ![]() , and

(2)

, and

(2)

![]() with

with

![]() and

and

![]() . Figure 9 plots the probability of avoiding