|

||||||||||||||

where:

Creating component models is an area of active ongoing research. Models based on simulation or emulation [12,29] require a fairly detailed knowledge of the system's internals; analytical models [22,19] require less, but device-specific information must still be taken into account to obtain accurate predictions. Black-box [4,26] models are built by recording and correlating inputs and outputs to the system in diverse states, without regarding its internal structure. Since SMART needs to explore a large candidate space in a short time, simulation based approaches are not feasible due to the long prediction overhead. Analytical models and black box approaches both work with SMART. For the SMART prototype, we use a regression based approach to bootstrap the models and refine models continuously at run time.

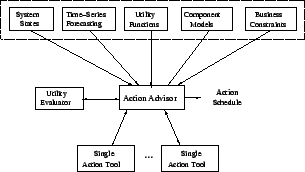

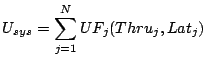

2.2 Utility EvaluatorAs the name suggests, the Utility Evaluator calculates the overall utility delivered by the storage system in a given system state. The calculation involves getting the access characteristics of each workload, and using the component models to interpolate the average response-time of each workload. The Utility Evaluator uses the throughput and response-time for each workload to calculate the utility value delivered by the storage system:where

In addition, for any given workload demands where the 2.3 Single Action ToolsThese tools automate invocation of a single action. A few examples are Chameleon [25], Facade [17] for throttling; QoSMig [10], Aqueduct [16] for migration; Ergastulum [6], Hippodrome [5] for provisioning. Each of these tools typically includes the logic for deciding the action invocation parameter values, and an executor to enforce these parameters.The single action tools take the system state, performance models and utility functions as input from the Action Advisor and outputs the invocation parameters. For example, in the case of migration, it decides the data to be migrated, the target location, and the migration speed. Every action has a cost in terms of the resource or budget overhead and a benefit in terms of the improvement in the performance of the workloads. The action invocation parameters are used to determine the resulting performance of each workload and the corresponding utility value.

2.4 Action AdvisorThe Action Advisor generates the corrective action schedule - the steps involved are as follows (details of the algorithm are covered in the next section):

Action Advisor is the core of SMART: it determines

an action schedule consisting of one or more actions (what)

with action invocation time (when) and invocation parameters (how).

The goal of Action Advisor is to pick a combination of actions that will

improve the overall system utility or,

equivalently, reduce the system utility loss. In the rest of this section,

we will first intuitively motivate the algorithm and give the

details after that.

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| (6) |

where,

The risk value ![]() of action

of action ![]() is calculated by:

is calculated by:

where

| 0 | |||

|

|||

|

Where

![]() is the system utilization when the action is invoked.

is the system utilization when the action is invoked.

For each action option returned by single action tools, the Action Advisor

calculates the risk factor ![]() and scales the

cumulative utility loss

and scales the

cumulative utility loss ![]() according to Equation 8 and

the action selection is performed based on the scaled

according to Equation 8 and

the action selection is performed based on the scaled

![]() (For example,

in GreedyPrune).

(For example,

in GreedyPrune).

4 Experiments

SMART generates an action schedule to improve system utility. To

evaluate the quality of its decision, we implemented SMART in both

a real file system GPFS [21] and a simulator. System

implementation allows us to verify if SMART

can be applied practically while simulator

provides us a more controlled and

scalable environment, which allows

us to perform repeatable experiments to gain

insights on the overhead and sensitivity to

input information errors.

The experiments are divided into three parts: First, sanity check experiments are performed to examine the impact of various configuration parameters on SMART's decision. Secondly, feasibility experiments evaluate the behavior of two representative cases in the sanity check using the GPFS prototype. Third, sensitivity test first examines the quality of the decisions with accurate component models and future prediction over a variety of scenarios using simulator. It then varies the error rate of the component models and time series prediction respectively and evaluates their impact on SMART's quality. In addition, SMART is designed to assist the administrators to make decisions. However, in order to examine the quality of SMART's decision (for example, what will happen if the administrator follows SMART's advice), selected actions are automatically executed in the experiments.

In the rest of this section, we first describe our GPFS prototype implementation and then present the experimental results of three tests.

4.1 GPFS Prototype Implementation

The SMART prototype is implemented on GPFS: a commercial high-performance distributed file-system [21]. GPFS manages the underlying storage systems as pools that differ in their characteristics of capacity, performance and availability. The storage systems can be accessed by any clients nodes running on separate physical machines transparently.

The prototype implementation involved sensors for monitoring the workloads states, actuators for executing corrective actions and an Action Advisor for decision making.

Sensors: They collect information about the run-time state of

workloads. The monitor daemon in each GPFS client node tracks the access

characteristics of the workload and writes it to a file, which can be analyzed

periodically in a centralized fashion.

Workloads are the unit of tracking and control - in the

prototype implementation, a workload is defined

manually as a collection of PIDs assigned by the OS. The monitoring

daemon does book-keeping at the GPFS read/write function call invoked after the

VFS translation.

Action actuators: Although the long term goal of the prototype is to support all corrective actions, as a proof of concept, we first implemented action actuators for three most commonly used corrective actions: throttling, migration and adding new pools.

- The IO throttling is enforced at

the GPFS client nodes using a token-bucket algorithm.

The decision-making for throttling each workload

is made in a centralized fashion, with the token-issue

rate and bucket

size written to a control file that is then periodically (20ms)

checked by the node throttling daemon.

- Similarly, the control file for the migration daemon consists

of entries of the form

file name, source pool,

the destination pool

file name, source pool,

the destination pool and the migration speed

is controlled by throttling the migration process.

The migration daemon thread runs in the context of one

of the client nodes and periodically checks

for updates in the control file and invokes

the GPFS built in function mmchattr

to migrate files.

and the migration speed

is controlled by throttling the migration process.

The migration daemon thread runs in the context of one

of the client nodes and periodically checks

for updates in the control file and invokes

the GPFS built in function mmchattr

to migrate files.

- Because the addition of hardware normally requires human intervention, we mimic the effect of adding new hardware by pre-reserving storage devices and forbidding the access to them until SMART decides to add them into the system. In addition, the storage devices are configured with different leadtime to mimic the overhead of placing orders and installation.

In addition, the daemon threads for throttling and migration run at the user-level and invoke kernel-level ioctls for the respective actions.

Action Advisor Integration:

The SMART action advisor is implemented using

techniques described in Section 3.

The time-series forecasting is done in an off-line fashion, where the monitored access characteristics for each

workload are periodically fed to the ARIMA module [24]

for refining the future forecast. Similarly, the performance

prediction is done by bootstrapping the system

for the initial models and refining the models

as more data are collected.

Once the Action Advisor is invoked,

it communicates with individual action

decision-making boxes

and generates an action schedule. The selected actions

are on hold by the action advisor until the

action invocation time (determined by SMART) is due.

At that time, the control files for corresponding

action actuators are updated according

to SMART's decision.

Without access to commercial decision tools, we implemented our own throttling, migration and provisioning tools. Throttling uses simulated annealing algorithm [20] to allocate tokens for each workload; the migration plan is generated by combining optimization, planning and risk modulation. It decides what and where to migrate, when to start migration and migration speed. The provisioning decision is done by estimating the overall system utility for each provisioning option, which considers the utility loss before the hardware arrives, introduced by the load balancing operation (after the new hardware comes into place) and the financial cost of buying and maintaining the hardware.

4.2 Sanity Check

As a sanity check, the action advisor in the GPFS prototype is given the initial system settings as input, while the configuration parameters are varied to examine their impact on SMART's action schedule. The initial system setting for the tests is as follows:

|

Workloads: There are four workload streams: one is a 2 month trace replay of HP's Cello99 traces [18]. The other three workloads are synthetic workload traces with the following characteristics: 1)

|

Components: There are three logical volumes:

POOL1 and POOL2 are both RAID 5 arrays with 16 drives each, while

POOL3 is a RAID 0 with 8 drives.

POOL3 is originally off-line, and is accessible only

when SMART selects hardware provisioning as a corrective action. The initial workload-to-component mapping is:

[HP: POOL1], [Trend: POOL1], [Phased: POOL2]

and [Backup: POOL2].

Miscellaneous settings: The optimization window is set to one month; the default budget constraint is $20,000 and the one day standard deviation of the load for risk analysis is configured as 10% unless otherwise specified. The provisioning tool is configured with 5 options, each with different buying cost, leadtime and estimated performance models. For these initial system settings, the system utility loss at different time intervals without any corrective action is shown in Figure 5 (b).

An ideal yardstick to evaluate the quality of SMART's decisions is by comparing it with existing automated algorithms or with decisions made by an administrator. However, we are not aware of any existing work that considers multiple actions; also, it is very difficult to quantify decisions of a representative administrator. Because of this, we take an alternative approach of comparing the impact of SMART's decisions with the maximum theoretical system utility (upper bound, Equation 3) and the system utility without any action (lower bound).

In the rest of this section, using the system settings described above, we vary the configuration parameters that affect SMART's scheduling decisions namely the utility function values (test 1); the length of optimization window (test 2), budget constraints (test 3), risk factor (test 4). In test 5, we explore how SMART handles unexpected case. For each of these tests, we present the corrective action schedule generated by SMART, and the corresponding predicted utility loss as a function of time (depicted on the x-axis).

Test 1: Impact of Utility Function

|

SMART selects actions that

maximize the overall system utility value, which is driven by the utility functions for

individual workloads using the storage system. In this test, we vary

![]() 's utility function of meeting the SLO latency

goal from the default

's utility function of meeting the SLO latency

goal from the default ![]() to

to

![]() . As

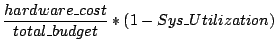

shown in Figure 6(a), the default utility

assignment for

. As

shown in Figure 6(a), the default utility

assignment for ![]() causes a fast growing overall utility

loss - SMART selects to add new hardware. However, for

the low value utility assignment, the latency violation

caused by the increasing load results in a much slower

growth in the utility loss. As a result, the cost of adding

new hardware cannot be justified for the current optimization window and

hence SMART decides to settle for throttling

and migration. Note that as

the utility loss slowly approaches to the point where

the cost of adding new hardware can be justified,

SMART will suggest invoking hardware provisioning as needed.

causes a fast growing overall utility

loss - SMART selects to add new hardware. However, for

the low value utility assignment, the latency violation

caused by the increasing load results in a much slower

growth in the utility loss. As a result, the cost of adding

new hardware cannot be justified for the current optimization window and

hence SMART decides to settle for throttling

and migration. Note that as

the utility loss slowly approaches to the point where

the cost of adding new hardware can be justified,

SMART will suggest invoking hardware provisioning as needed.

Test 2: Impact of Optimization Window

|

SMART is designed to select corrective actions that maximize the overall utility for a given optimization window. In this test, we vary the optimization window to 2 days, 1 week and 1 month (default value) - compared to the schedule for 1 month in Figure 6 (a), Figure 7 shows that SMART correctively chooses different action schedules for the same starting system settings. In brief, for a short optimization window (Figure 7 (a) and (b)), SMART correctly selects action options with a lower cost, while for a longer optimization window (Figure 6 (a)), it suggests higher cost corrective options that are more beneficial in the long run.

Test 3: Impact of Budget Constraints

|

Test 3 demonstrates how SMART responds to various budget constraints. As shown in Figure 8, SMART settles for throttling and migration if no budget is available for buying new hardware. With $5000 budget, SMART opts for adding a hardware. However, compared to the hardware selected for the default $20,000 budget (shown in Figure 6 (a)), the hardware selected is not sufficient to solve the problem completely, and additionally requires a certain degree of traffic regulation.

Test 4: Impact of Risk Modulation

|

SMART uses risk modulation to balance between the risk of invoking an inappropriate action and the corresponding benefit on the utility value. For this experiment, the size of the dataset selected for migration is varied, changing the risk value (Equation 7) associated with the action options. SMART will select the high-risk option only if its benefit is proportionally higher. As shown in Figure 9, SMART changes the ranking of the corrective options and selects a different action invocation schedule for the two risk cases.

Test 5: Handling of Unexpected Case

|

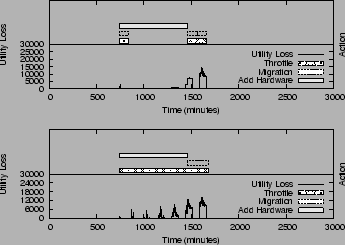

This test explores SMART's ability to handle the unexpected workload

demands. Figure 10 (a) shows the sending rate of

the workload demands.

From minute 60 to minute 145, ![]() sends

at 1500 IOPS instead of the normal 250 IOPS. The difference

between the predicted value 250 IOPS and the observed

value 1500 IOPS exceeds the threshold

and SMART switches to unexpected mode. For both

cases, SMART invokes throttling directly. But for case

1, the migration option involves a 1000 GB data movement and

is never invoked because

the spike duration is not long enough to reach

to a point where the migration invocation cost

is less than the utility loss of staying with throttling.

For case 2, a lower cost migration option is available (8GB data)

and after 5 minutes, the utility loss due to settling for throttling

already exceeds the invocation cost of migration.

The migration option is invoked immediately as a result.

sends

at 1500 IOPS instead of the normal 250 IOPS. The difference

between the predicted value 250 IOPS and the observed

value 1500 IOPS exceeds the threshold

and SMART switches to unexpected mode. For both

cases, SMART invokes throttling directly. But for case

1, the migration option involves a 1000 GB data movement and

is never invoked because

the spike duration is not long enough to reach

to a point where the migration invocation cost

is less than the utility loss of staying with throttling.

For case 2, a lower cost migration option is available (8GB data)

and after 5 minutes, the utility loss due to settling for throttling

already exceeds the invocation cost of migration.

The migration option is invoked immediately as a result.

4.3 Feasibility Test Using GPFS Prototype

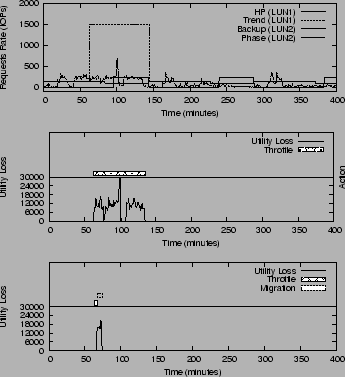

In these tests, SMART is run within the actual GPFS deployment. The tests serve two purposes: (1) to verify if the action schedule generated by SMART can actually help reduce system utility loss; (2) to examine if the utility loss predicted by SMART matches the observed utility loss. We run two tests to demonstrate the normal mode and unexpected mode operation.

Test 1: Normal Model

|

The setting for the experiment are the same as those used in the sanity check tests with two exceptions: the footprint size, and the leadtime of hardware addition. To reduce the experiment running time, the IO features are changed to run 60 times faster (every minute in the figure corresponds to one hour in the trace), and the footprint size is shrunk by a factor of 60, and the leadtime of adding hardware is set to 55 minutes (that maps to the original of 55 hours).

SMART evaluates the information and decides that the best

option is to add a new pool. Because

it takes 55 minutes to come into effect,

SMART looks back to seek for solutions

that can reduce the utility loss for time window [0, 55].

It chooses to migrate the ![]() workloads from POOL1 to

POOL2 and throttle workloads

until the new pool arrives. After

POOL3 joins, the load balance

operation decides to migrate the

workloads from POOL1 to

POOL2 and throttle workloads

until the new pool arrives. After

POOL3 joins, the load balance

operation decides to migrate the ![]() workload

to POOL3 and

workload

to POOL3 and ![]() back to POOL1. The final

workload to component mapping is:

back to POOL1. The final

workload to component mapping is: ![]() on POOL1,

on POOL1, ![]() and

and ![]() on POOL2 and

on POOL2 and ![]() on POOL3.

on POOL3.

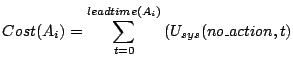

As shown in Figure 11 (a), compared to without any action, SMART's action schedule eliminates about 80% of the original utility loss and also grows at a much slower rate. Before time 20, the no action utility loss is slightly lower than with SMART because the SMART schedule is paying extra utility loss for invoking the migration operation.

It can be observed from Figure 11 (b) that there is a negative utility loss. This is because the maximum utility is calculated based on the planned workload demands, while the observed utility value is calculated based on the observed throughput. Due to the lack of precise control in task scheduling, the workload generator can not precisely generate I/O requests as specified. For example: the workload generator for the HP traces is supposed to send out requests at a rate of 57.46 IOPS at time 33 while the observed throughput is actually 58.59 IOPS. As a result, the observed utility value is actually higher than the maximum value and results in a negative utility loss. For a similar reason, the observed utility loss fluctuates very frequently around zero utility loss.

SMART schedules actions and predicts the utility loss to be close to zero. However, the observed utility loss (shown in Figure 11 (b)) has non-zero values. In order to understand the cause of this, we filter out the amount of observed utility loss due to imprecise workload generation (described above), and plot the remaining utility loss in Figure 11 (c). As we can see, the predicted and observed values match at most times except for two spikes at time 2 and time 58. Going into the log of the run-time performance, we found several high latency spikes ( +60ms compared to the normal 10ms) on the migrated workload during migration. This is because the migration process will lock 256 KB blocks for consistency purposes; hence if the workload tries to access these blocks, it will be delayed until the lock is released. The performance models fail to capture this scenario and we observe a mismatch between the predicted and observed utility values. However, these are only transient behavior in the system and will not affect the overall quality of SMART's decision.

Test 2: Unexpected Case

Similar to the sanity check test (Figure 10 (a)), we intentionally create a load surge from time 10 to time 50. SMART invokes throttling immediately and waits for about 3 minutes (time 13) till the utility loss to invoke migration is lower than the loss due to throttling. The migration operation executed from time 13 to 17 and the system experienced no utility loss once it was done. Similar to the previous test, the temporarily lesser utility loss without any action (shown in Figure 12) is due to the extra utility loss for data movement. We skip other figures due to a lack of new observations for predicted and observed utility values.

4.4 Sensitivity Test

We test the sensitivity of SMART on the errors of performance prediction and future prediction in various configurations. This test is based on a simulator because it provides a more controlled and scalable environment, allowing us to test various system settings in a shorter time. We developed a simulator that takes the original system state, future workload forecasting, performance models and utility functions as input and simulates the execution of SMART's decisions. We vary the number of workloads in the system from 10 to 100. For each workload setting, 50 scenarios are automatically generated as follows:

Workload features: The sending rate and footprint size of each workload are generated using Gaussian mixture distribution: with a high probability, the sending rate (or footprint-size) is generated using a normal distribution with a lower mean value and a low probability, it is generated using another normal distribution with a larger mean value. The use of Gaussian mixture distribution is to mimic the real world behavior: a small number of applications contributes a majority of the system load and accesses the majority of data. Other workload characteristics are randomly generated.

Initial data placement: the number of components is proportional to the number of flows. For our tests, it is randomly chosen to be 1/10 of the number of flows. In addition, we intentionally create an un-balanced system (60% of the workloads will go to one component and the rest is distributed to other components randomly). This design is to introduce SLO violations and therefore, utility loss such that corrective actions are needed.

Workload trending: In addition, to mimic workload changes, 30% of workloads increases and 30% decreases. In particular, the daily growing step size is generated using a random distribution with mean of 1/10 of the original load and the decreasing step size is randomly distributed with mean of 1/20 of the original load.

Utility functions: The utility function of meeting the SLO requirement for each workload is assumed to be a linear curve and the coefficients are randomly generated according to a uniform distribution ranging from 5 to 50. The utility function of violating the SLO requirement is assumed to be zero for all workloads. The SLO goals are also randomly generated with considerations of the workload demands and performance.

For a three month optimization window, the 500 scenarios experienced utility loss in various degrees ranging from 0.02% to 83% of the maximum system utility (the CDF is shown in Figure 13).

Test 1: With Accurate Performance Models and Future Prediction

For this test, we assume both the performance prediction

and the future prediction are accurate. The Cumulative Distribution Functions of

the percentage of

utility loss (defined as

![]() )

for both with and without corrective actions

are shown in Figure 13. Comparing the two curves,

with actions selected by Action Advisor, SMART is very close to

the maximum utility. More than 80% scenarios

have a utility loss ratio less than 2% and more than 93%

have a ratio less than 4%.

)

for both with and without corrective actions

are shown in Figure 13. Comparing the two curves,

with actions selected by Action Advisor, SMART is very close to

the maximum utility. More than 80% scenarios

have a utility loss ratio less than 2% and more than 93%

have a ratio less than 4%.

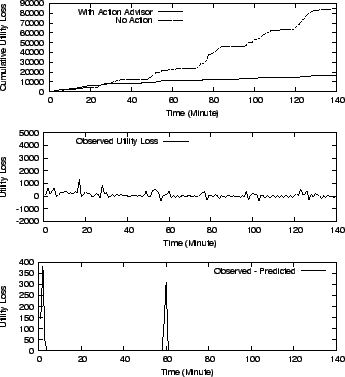

Test 2: With Performance Model Errors

|

Our previous analysis is based on the assumption that perfectly accurate component models are available for extrapolating latency for a given workload. However, this is not always true in real-world systems. To understand how performance prediction errors affect the quality of the decision, we perform the following experiments: (1) we generate a set of synthetic models and make decisions based on them. The latency calculated using these models is used as the ``predicted latency'' and the corresponding utility loss is the ``predicted utility loss''. (2) For the exact settings and action parameters, the ``real latency'' is simulated by adding errors on top of the ``predicted latency'' to mimic the real system latency variations. Because the residual normally grows with the real value, we generate a random scaling factor rather than the absolute error. For example, if the predicted latency is 10ms and the random error is 0.2, the real latency is simulated as 10*(1+0.2) = 12ms. The ``real utility'' is estimated based on this.

Figure 14 (a) shows the ratio of

![]() ,

which reflects the degree of mismatch between the predicted and observed utility loss due to performance

prediction errors. As we can see, there is significant difference between

them - for a 20% model error, the average

,

which reflects the degree of mismatch between the predicted and observed utility loss due to performance

prediction errors. As we can see, there is significant difference between

them - for a 20% model error, the average

![]() is 6 times of the

predicted value. Next, we examine how does this difference affect the quality of the decision?

Figure 14(b)

plots the CDF of the remaining percentage of utility loss, defined as

is 6 times of the

predicted value. Next, we examine how does this difference affect the quality of the decision?

Figure 14(b)

plots the CDF of the remaining percentage of utility loss, defined as

![]() .

It grows as the model error increases. But even with a 100% model error,

on average, the action selected by Action Advisor removes nearly 88% of the utility loss.

.

It grows as the model error increases. But even with a 100% model error,

on average, the action selected by Action Advisor removes nearly 88% of the utility loss.

Test 3: With Time Series Prediction Errors

|

The future forecasting error is introduced in a similar fashion: the workload demands forecasting is generated first. Based on that, the ``real'' workload demands is generated by scaling with a random factor following a normal distribution, with the future forecasting error as the standard deviation. The predicted utility loss is calculated based on the ``forecasted'' demands and the observed utility loss is calculated based on the ``real'' workload demands. Note that, for this set of tests, we restrict the Action Advisor only operating in the normal mode because otherwise, Action Advisor will automatically switch to the unexpected mode.

Figure 15 (a) shows the ratio of the

![]() to the

to the

![]() . It is much bigger than

that of the model error: 560 vs 75 with 100% error. This is

because the future forecasting error has a larger scale.

For example, an error of under-estimating 10% of the future demands may

lead to 100 IOPS under-estimated, which may offset the performance prediction

even more than the one caused directly by model errors. Figure

15 (b) shows the CDF of remaining utility loss after invoking Action Advisor's action schedule.

It shows that as the future forecasting error grows, the probability

that the Action Advisor's decision is helpful reduces very quickly.

It can even result in

a utility loss higher than doing nothing. This confirms our design

choice: when the difference between the future prediction and observed values

are high, we should apply a defensive strategy rather than operating in

the normal mode.

. It is much bigger than

that of the model error: 560 vs 75 with 100% error. This is

because the future forecasting error has a larger scale.

For example, an error of under-estimating 10% of the future demands may

lead to 100 IOPS under-estimated, which may offset the performance prediction

even more than the one caused directly by model errors. Figure

15 (b) shows the CDF of remaining utility loss after invoking Action Advisor's action schedule.

It shows that as the future forecasting error grows, the probability

that the Action Advisor's decision is helpful reduces very quickly.

It can even result in

a utility loss higher than doing nothing. This confirms our design

choice: when the difference between the future prediction and observed values

are high, we should apply a defensive strategy rather than operating in

the normal mode.

Comparing the results of model and future forecasting errors, the quality of the decision is more sensitive to future forecasting accuracy than to model accuracy. In addition, we have used the ARIMA algorithm to perform time-series analysis on HP's Cello99 real world trace and the results show that more than 60% of the predictions falls within 15% of the real value and more than 80% falls within 20%.

5 Related Work

Storage virtualization can be host based [7], network based [23] or storage controller based [28]. Storage virtualization can be at file level abstraction [13] or block level abstraction [14]. These storage virtualization solutions provide support for automatically extending volume size. However, these virtualization solutions do not offer multi-action based SLO enforcement mechanism and only recently single action based (workload throttling) SLO enforcement mechanisms are being combined with storage virtualization solutions [9]. SLO enforcement can also be performed by storage resource management software such as control centerer from EMC [1] and total productivity centerer from IBM [2]. These management software frameworks provide sensor and actuator frameworks, and they also have started to provide problem analysis and solution planning functionality. However, the current versions of these products do not have the ability to combine multiple SLO enforcement mechanisms.

Research prototypes that provide single action based solutions to handle SLO violations exist for a range of storage management actions. Chameleon [25], SLEDRunner [9], and Facade [17] prototypes provide workload throttling based SLO enforcement solutions. QosMig [10] and Aqueduct [16] provide support for data migration based SLO enforcement solutions. Ergastulum [6], Appia [27] and Minerva [3] are some capacity planning tools that can be used to either design a new infra-structure or extend an existing deployment in order to satisfy SLOs.

Hippodrome [5] is a feedback based storage management framework from HP that monitors system behavior and comes up with a strategy to migrate the system from the current state to the desired state. Hippodrome focuses on migrating data and re-configuring the system to transform it from its current state to the new desired state.

6 Conclusion and Future Work

SMART generates a combination of corrective actions. Its action selection algorithm considers a variety of information including forecasted system state, action cost-benefit effect estimation and business constraints, and generates an action schedule that can reduce the system utility loss. It also can generate action plans for different optimization windows and react to both expected load surges and unexpected ones. SMART's prototype has been implemented in a file system. Our experiments show that the system utility value is improved as predicted. Experimental results show that SMART's action decision can result in less than 4% of utility loss. Finally, it is important to note that this framework can also be deployed as part of storage resource management software or storage virtualization software. Currently, we are designing a robust feedback mechanism to handle various uncertainties in production systems. We are also developing pruning techniques to reduce the decision making overhead, making SMART applicable in large data center and scientific deployments.

Acknowledgments

We want to thank John Palmer for his insights and comments on earlier versions of this paper. We also want to thank the anonymous reviewers for their valuable comments. Finally, we thank the HP Labs Storage Systems Department for making their traces available to the general public.

Bibliography

- 1

-

EMC ControlCenter family of storage resource management (SRM).

https://www.emc.com/

products/storage_management/controlcenter.jsp. - 2

-

IBM TotalStorage.

https://www-1.ibm.com/servers/storage. - 3

-

ALVAREZ, G. A., BOROWSKY, E., GO, S., ROMER, T. H., BECKER-SZENDY, R.,

GOLDING, R., MERCHANT, A., SPASOJEVIC, M., VEITCH, A., AND WILKES, J.

Minerva: An automated resource provisioning tool for large-scale storage systems.

ACM Transactions on Computer Systems 19, 4 (2001), 483-518. - 4

-

ANDERSON, E.

Simple table-based modeling of storage devices.

Tech. Rep. HPL-SSP-2001-4, HP Laboratories, July 2001. - 5

-

ANDERSON, E., HOBBS, M., KEETON, K., SPENCE, S., UYSAL, M., AND VEITCH,

A.

Hippodrome: Running circles around storage administration.

Proceedings of Conference on File and Storage Technologies (FAST) (Jan. 2002), 175-188. - 6

-

ANDERSON, E., KALLAHALLA, M., SPENCE, S., SWAMINATHAN, R., AND WANG, Q.

Ergastulum: an approach to solving the workload and device configuration problem.

Tech. Rep. HPL-SSP-2001-5, HP Laboratories, July 2001. - 7

-

ANONYMOUS.

Features of veritas volume manager for unix and veritas file system.

https://www.veritas.com/us/products/volumemanager/whitepaper-02.html (2005). - 8

-

AZOFF, M. E.

Neural network time series forecasting of financial markets. - 9

-

CHAMBLISS, D., ALVAREZ, G. A., PANDEY, P., JADAV, D., XU, J., MENON, R.,

AND LEE, T.

Performance virtualization for large-scale storage systems.

Proceedings of the 22nd Symposium on Reliable Distributed Systems (Oct. 2003), 109-118. - 10

-

DASGUPTA, K., GHOSAL, S., JAIN, R., SHARMA, U., AND VERMA, A.

Qosmig: Adaptive rate-controlled migration of bulk data in storage systems.

ICDE (2005). - 11

-

DIEBOLD, F.X., SCHUERMANN, T., AND STROUGHAIR, J.

Pitfalls and oppotunities in the use of extreme value theory in risk management. - 12

-

GANGER, G. R., WORTHINGTON, Y. N., AND PATT, B. L. A.

The DiskSim simulation environment version 1.0 reference manual.

Tech. Rep. CSE-TR-358-98, 27 1998. - 13

-

GHEMAWAT, S., GOBIOFF, H., AND LEUNG, S.-T.

Google file system.

Proceedings of SOSP (2003). - 14

-

GLIDER, J., FUENTE, F., AND SCALES, W.

The software architecture of a san storage control system.

IBM System Journal 42, 2 (2003), 232-249. - 15

-

KARP, R.

On-line algorithms versus off-line algorithms: how much is it worth to know the future?

In Proceedings of IFIP 12th World Computer Congress (1992), vol. 1, pp. 416-429. - 16

-

LU, C., ALVAREZ, G. A., AND WILKES, J.

Aqueduct: online data migration with performance guarantees.

Proceedings of Conference on File and Storage Technologies (FAST) (Jan. 2002), 175-188. - 17

-

LUMB, C., MERCHANT, A., AND ALVAREZ, G.

Facade: Virtual storage devices with performance guarantees.

Proceedings of 2nd Conference on File and Storage Technologies (FAST) (Apr. 2003), 131-144. - 18

-

RUEMMLER, C., AND WILKES, J.

A trace-driven analysis of disk working set sizes.

Tech. Rep. HPL-OSR-93-23, Palo Alto, CA, USA, May 1993. - 19

-

RUEMMLER, C., AND WILKES, J.

An introduction to disk drive modeling.

Computer 27, 3 (1994), 17-28. - 20

-

RUSSELL, S., AND NORVIG, P.

Artificial Intelligence A Modern Approach.

Prentice Hall, 2003. - 21

-

SCHMUCK, F., AND HASKIN, R.

Gpfs: A shared disk file system for large computing clusters, 2002. - 22

-

SHRIVER, E., MERCHANT, A., AND WILKES, J.

An analytic behavior model for disk drives with readahead caches and request reordering.

In Proceedings of the 1998 ACM SIGMETRICS joint international conference on Measurement and modeling of computer systems (New York, NY, USA, 1998), ACM Press, pp. 182-191. - 23

-

TATE, J., BOGARD, N., AND JAHN, T.

Implementing the ibm totalstorage san volume controller software on the cisco mds 9000.

IBM Redbook SG24-7059-00 (2004). - 24

-

TRAN, N., AND REED, D. A.

ARIMA time series modeling and forecasting for adaptive i/o prefetching.

Proceedings of the 15th international conference on Supercomputing (2001), 473-485. - 25

-

UTTAMCHANDANI, S., YIN, L., ALVAREZ, G., PALMER, J., AND AGHA, G.

Chameleon: a self-evolving, fully-adaptive resource arbitrator for storage systems.

Proceeding of Usenix Annual Technical Conference (Usenix) (June 2005). - 26

-

WANG, M., AU, K., AILAMAKI, A., BROCKWELL, A., FALOUTSOS, C., AND GANGER,

G. R.

Storage device performance prediction with CART models.

SIGMETRICS Perform. Eval. Rev. 32, 1 (2004), 412-413. - 27

-

WARD, J., O'SULLIVAN, M., SHAHOURNIAN, T., WILKES, J., WU, R., AND BEYER,

D.

Appia and the hp san designer: automatic storage area network fabric design.

In HP Technical Conference (Apr. 2003). - 28

-

WARRICK, C., ALLUIS, O., AND ETAL.

The ibm totalstorage ds8000 series: Concepts and architecture.

IBM Redbook SG24-6452-00 (2005). - 29

-

WILKES, J.

The pantheon storage-system simulator.

Tech. Rep. HPL-SSP-95-14, HP Laboratories, dec 1995.

About this document ...

SMART: An Integrated Multi-Action Advisor for Storage SystemsThis document was generated using the LaTeX2HTML translator Version 2002-2-1 (1.70)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

Copyright © 1997, 1998, 1999,

Ross Moore,

Mathematics Department, Macquarie University, Sydney.

The command line arguments were:

latex2html -split 0 -show_section_numbers -local_icons paper.tex

The translation was initiated by Li Yin on 2006-04-17

Li Yin 2006-04-17

|

Last changed: 9 May 2006 ch |